Top 10 AI Skills Employers Are Hiring For in 2026 (With Salary Data)

By Irene Holden

Last Updated: January 4th 2026

Too Long; Didn't Read

AI literacy and prompt engineering, followed by AI-focused data engineering, top the list for 2026 because literacy is now the baseline across functions and data engineers build the pipelines that make AI work in production. Roughly half of tech roles now expect AI skills and postings mentioning AI pay about 28% more, while AI-fluent nontechnical roles can see pay uplifts of roughly 35% to 43% and data engineers have midpoint salaries around $153,750.

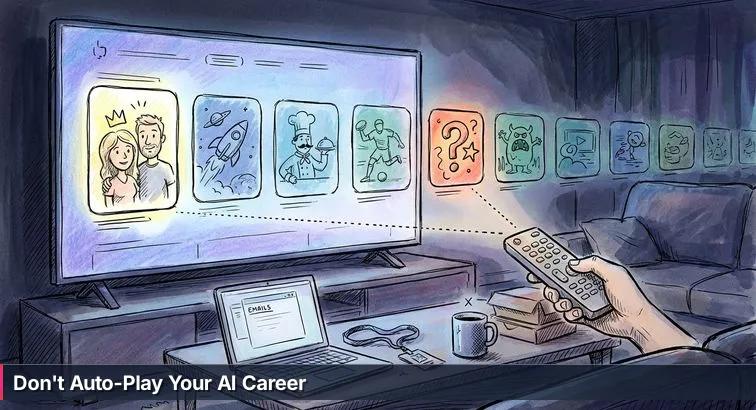

You know that moment when your streaming app shoves a bright “Top 10 in the U.S. Today” row in your face, and your thumb just hovers over the remote? Part of you wants to trust the ranking and hit play on #1. Another part remembers the last time you did that and bailed twenty minutes in. Those tiles are sorted by an algorithm that optimizes for watch time and clicks, not for whether you need comfort, a laugh, or just background noise while you half-watch and half-check email.

Rankings are algorithms, not advice

Most “Top 10 AI skills” lists work the same way. They’re usually ranked by a mix of employer demand, salary levels, and growth - useful signals, but incomplete ones. Recruiter data shows that roughly 50% of tech jobs now require AI skills, and job posts that mention AI offer an average 28% salary premium, or about $18,000 more per year, than similar roles without AI requirements. PwC’s Global AI Jobs Barometer goes even further: workers who have AI competencies now earn about 56% more than colleagues doing the same jobs without AI. No surprise that every skill anywhere near “AI” shoots to the top of the list.

What the lists ignore: you, your start point, and your values

What those rankings can’t see is you. They don’t know whether you’re coming from customer support, teaching, or traditional IT. They don’t show which skills are realistically accessible from a non-CS background, which ones align with how much risk you’re willing to take, or whether you actually want to spend your day tuning GPU clusters versus coaching teams on responsible AI use. A skill can rank #1 for demand and pay, and still be the wrong “show” for your current season of life.

“Companies that continue treating AI as a niche technical skill will find themselves competing for talent with organizations that have embedded AI literacy across their entire workforce.” - Cole Napper, VP of Research, Lightcast

The four “genres” of AI work

To make sense of the rankings - and avoid just auto-playing whatever’s on top - it helps to think in genres. In this guide, AI skills fall into four broad lanes:

- Builders - people who create models and AI systems (ML engineers, computer vision, NLP, custom LLM developers).

- Integrators - people who connect AI to real company data and infrastructure (data engineers, RAG specialists, MLOps and cloud AI engineers).

- Governors - people who manage risk, ethics, compliance, and policy around AI systems.

- Translators - people in non-technical roles who use AI as a force multiplier in marketing, HR, operations, finance, sales, and more.

The list you’re about to read is ordered by those hard market metrics - demand, salary impact, breadth of roles - but your job isn’t to obey the ranking. It’s to treat it like a “Genres” view on your streaming app, pick the lane that fits who you are and where you’re starting, and then build your own watchlist of one or two AI skills to “hit play” on first.

Table of Contents

- Why the right AI skills matter

- AI Literacy & Prompt Engineering

- Data Engineering for AI-Ready Pipelines

- MLOps & AI Infrastructure

- Custom LLM Development & Fine-Tuning

- Retrieval-Augmented Generation (RAG) & Vector Databases

- Natural Language Processing & Conversational AI

- Computer Vision for Autonomous and Industrial Systems

- AI Ethics, Risk & Governance

- Cloud and Distributed AI Engineering

- Applied Generative AI for Marketing, Sales & RevOps

- Build your own AI skill watchlist

- Frequently Asked Questions

Hiring trends now favor demonstrable AI literacy and project portfolios - this article explains how to build both.

AI Literacy & Prompt Engineering

If you only pick up one AI skill this year, make it the ability to actually use these tools well. Recruiters and hiring managers keep calling out AI literacy and prompt engineering as the new baseline: LinkedIn data shows AI-related competencies as the skill of the moment, and a growing share of job postings now expect you to be comfortable collaborating with tools like ChatGPT, Claude, or Gemini. As Forbes put it in its analysis of rising skills on LinkedIn, AI capabilities are moving from “nice-to-have” to standard expectations for knowledge workers across functions like marketing, HR, and operations, not just engineering (LinkedIn reveals the most in-demand skills on the rise).

What AI literacy and prompt engineering look like day-to-day

In practice, AI literacy means you can turn messy, real-world problems into clear instructions, sanity-check whatever the model gives back, and explain how you got from question to answer. Prompt engineering is just the hands-on side of that. Your day might look like:

- Designing structured prompts that tell an AI what role to play, what data to use, and what format to respond in.

- Chaining AI tools with spreadsheets, docs, and email so a single prompt kicks off a repeatable workflow instead of a one-off answer.

- Adding guardrails - constraints, examples, and checklists - so you reduce hallucinations and catch errors before they reach a customer or your manager.

- Coaching teammates who are nervous about AI so they can use it safely instead of avoiding it or misusing it.

Roles, titles, and salary impact

The reason this skill shows up at the top of so many lists is simple: it travels well. LinkedIn’s own job-market analysis notes that a large share of AI-related postings are now for non-technical roles - marketing, sales, HR, operations - where AI literacy is the differentiator rather than deep coding. Lightcast’s research has found that in fields like HR and other non-tech work, AI literacy alone can drive salary uplifts of around 35%, and Forbes reports that in marketing and sales, applied AI skills can trigger average pay bumps of about 43%, with senior specialists earning up to $250,000 in total compensation. Typical titles include “AI-enabled marketing manager,” “operations analyst (AI tools),” or “AI productivity specialist,” and they increasingly show up in LinkedIn’s Work Change Report as examples of hybrid roles blending domain knowledge with AI fluency.

“The 2026 playbook is AI literacy with restraint: the ability to verify, edit, and explain machine-assisted work... outputs must survive scrutiny when a tool’s confidence exceeds its accuracy.” - Market analysts, AI labor trends commentary

How to start without a CS degree

If you’re switching careers or coming from a non-technical background, this is one of the most accessible on-ramps into AI. Instead of binge-watching random tutorials, treat it like a short, focused “season”: 15-25 weeks of structured practice where you build real workflows you could show to a hiring manager. Programs like Nucamp’s 15-week AI literacy bootcamp focus on prompts, everyday work apps, and team workflows, while their longer Solo AI Tech Entrepreneur track adds LLM integration, AI agents, and monetizing small SaaS products. The shorter program runs a 15-week schedule and the entrepreneurial track extends to 25 weeks; together they’re priced at a fraction of the $10,000+ many traditional bootcamps charge, and Nucamp reports a 78% employment rate and a 4.5/5 Trustpilot rating across hundreds of learner reviews.

| Program | Duration (weeks) | Price (USD) | Primary focus |

|---|---|---|---|

| AI Essentials for Work | 15 | $3,582 | AI literacy, prompt engineering, workplace tools |

| Solo AI Tech Entrepreneur | 25 | $3,980 | Prompting, LLM integration, AI agents, SaaS products |

Data Engineering for AI-Ready Pipelines

Think of AI literacy as learning to drive; data engineering is the team that designs and maintains the roads, bridges, and traffic lights so everyone else can move safely. Recruiter data shows that the single most searched technical role right now is the data engineer who can build AI-ready pipelines, because every serious AI project depends on clean, well-organized data flowing in the right direction.

Demand and salary for AI-focused data engineers

Data roles have quietly become some of the most strategic in tech. Robert Half’s 2026 Technology Salary Trends report notes that data-focused positions saw a 4.1% year-over-year salary increase, and midpoint salaries for experienced data engineers now sit around $153,750 in the U.S. When those roles explicitly support AI initiatives - feeding models, powering analytics, enabling retrieval systems - they typically sit at the top of internal pay bands and are among the hardest for hiring managers to fill. Dice has also reported that roughly half of all tech roles now require some level of AI or data capability, pushing demand for engineers who can own the data layer behind those systems.

“Applied expertise and AI fluency are key factors to career and hiring success. Specialized skills in data engineering, cybersecurity, infrastructure, and applied AI are driving the strongest compensation growth.” - James Vallone, President, Motion Recruitment, via TechRSeries analysis

What you actually do all day

On a typical day, a data engineer focused on AI-ready pipelines is less worried about model architectures and more obsessed with how data moves and how trustworthy it is. Your work might include:

- Designing and maintaining ETL/ELT pipelines from CRMs, ERPs, product logs, and third-party APIs into warehouses or data lakes.

- Building curated datasets and feature stores that ML models and LLM-based systems can consume reliably.

- Implementing data quality checks, validation rules, and monitoring so bad data doesn’t silently poison models.

- Collaborating with ML engineers, analysts, and product teams to make sure the right data is available at the right grain and latency.

Skills and tools for AI-ready pipelines

The toolset here is technical but learnable, even from a non-traditional background, as long as you’re comfortable with logic and problem-solving. Core skills include:

- Languages: SQL for querying and shaping data; Python for scripting, automation, and data manipulation.

- Platforms: Cloud data warehouses and lakes (think Snowflake, BigQuery, Redshift, or Databricks).

- Pipelines: Orchestration and transformation tools like Airflow or dbt, plus event streaming with Kafka or similar.

- AI-specific design: Modeling schemas and APIs so downstream ML and LLM systems can access features efficiently.

How to start from a beginner or adjacent role

If you’re coming from support, QA, business analysis, or another adjacent path, your first milestones are clear: get strong with SQL, comfortable with Python, and familiar with basic cloud and DevOps concepts. A structured path like Nucamp’s Back End, SQL & DevOps with Python bootcamp (16 weeks, $2,124) focuses on exactly those foundations: relational databases, backend APIs, scripting, and deployment basics. From there, you can layer on modern data tools (dbt, Airflow, a cloud warehouse) through self-study or more advanced courses.

| Step | Focus | Example tools | Typical timeframe |

|---|---|---|---|

| 1. Fundamentals | SQL, Python basics | PostgreSQL, Pandas | 8-12 weeks |

| 2. Pipelines | ETL/ELT, orchestration | dbt, Airflow | 8-12 weeks |

| 3. AI-ready design | Feature stores, ML datasets | Data warehouse + ML stack | 8-12 weeks |

MLOps & AI Infrastructure

Once a company gets past the “cool demo” phase of AI, someone has to keep the models alive in the wild: deployed, monitored, secure, and not silently drifting off a cliff. That’s the job of MLOps and AI infrastructure. If data engineering builds the roads, MLOps is traffic control, highway maintenance, and the emergency crew that gets called at 2 a.m. when something breaks.

Why MLOps is suddenly everywhere

Hiring data from specialized AI recruiters shows that MLOps engineers are among the highest-paid AI professionals, with many roles ranging from roughly $160,000 to $350,000+ in total compensation, especially at companies that run AI products at scale. An analysis of in-demand AI engineering skills by Second Talent highlights MLOps and infrastructure as a top technical priority, sitting alongside LLM specialization and data engineering in almost every AI-first organization. Broader AI labor reports also note that mid-level AI engineers have seen some of the fastest salary growth, with year-over-year increases outpacing many other tech specialties as companies race to move from experiments to production systems.

“The tech market is becoming increasingly specialized, and the pace of innovation is widening the skills gap faster than most teams can close internally. The organizations that thrive will be those who secure the right resources to fill critical skill gaps and scale capabilities quickly.” - James Vallone, President, Motion Recruitment

What you actually do all day

Day to day, MLOps feels a lot like modern DevOps, but with models instead of just microservices. You’re the person who takes a notebook experiment and turns it into something customers can reliably hit over an API. Typical responsibilities include:

- Packaging models or LLM pipelines into services using Docker, Kubernetes, or serverless frameworks.

- Owning CI/CD pipelines for training, testing, and deploying models, including rollbacks and canary releases.

- Setting up monitoring for latency, errors, data drift, and model performance, then tuning infrastructure accordingly.

- Managing GPU/TPU clusters, autoscaling policies, and cost optimization in the cloud.

- Implementing security, access control, and compliance controls around AI services.

How it differs from DevOps and data engineering

If you’re coming from software, DevOps, or analytics, it helps to see where MLOps overlaps and where it diverges. At a high level, you’re still shipping and operating software, but the “artifact” now includes data and models that change over time, not just code.

| Discipline | Main goal | Primary artifacts | Typical tools |

|---|---|---|---|

| DevOps/SRE | Keep apps reliable and fast | Microservices, APIs | Docker, Kubernetes, CI/CD, observability stacks |

| Data Engineering | Move and model data for analytics/AI | Pipelines, warehouses, feature tables | SQL, Python, dbt, Airflow, warehouses/lakes |

| MLOps & AI Infra | Operate models in production | Models, LLM pipelines, model-serving infra | MLflow/Kubeflow, GPU clusters, model registries |

A practical path into MLOps

The cleanest way into MLOps is usually from an adjacent discipline: backend engineering, DevOps/SRE, or data engineering. You don’t have to become a research scientist; you do need to understand model lifecycles and how they interact with infrastructure. A simple progression looks like this: first, get comfortable deploying containerized apps and APIs in the cloud; next, learn the basics of training and evaluating models with Python; then, glue those together by deploying a small model or LLM-based service with CI/CD and monitoring. As AI job growth accelerates - industry reports estimate hundreds of thousands of new AI-related roles being created globally in just a year, according to analyses like Index.dev’s AI job growth statistics - that combination of software, cloud, and model awareness is exactly what hiring managers are scanning for when they open an “MLOps Engineer” or “AI Platform Engineer” requisition.

Custom LLM Development & Fine-Tuning

Generic LLMs are like whatever’s sitting at #1 in your streaming Top 10 row: wildly capable, but aimed at the broadest possible audience. What companies increasingly want instead is something tuned to their own universe - legal documents, medical notes, support tickets, policy manuals. That’s where custom LLM development and fine-tuning come in. Industry analyses, including Second Talent’s breakdown of in-demand AI engineering skills, flag LLM specialization as a top technical priority, and specialists in this lane often command an average salary boost of around 47%, with mid-level roles falling in the $150,000-$220,000 range. That premium typically stacks on top of the broader 28% AI-skill bump many postings already advertise.

What you actually do all day

Day to day, this work feels less like “inventing intelligence” and more like careful tailoring. Instead of building a model from scratch, you’re teaching an existing one to speak a company’s language and respect its constraints. Typical tasks include:

- Collecting and cleaning domain-specific data - contracts, call transcripts, medical notes, internal policies - and turning it into training or fine-tuning sets.

- Fine-tuning base models using methods like LoRA/PEFT so they perform better on narrow tasks such as legal reasoning, clinical summarization, or financial analysis.

- Designing evaluation suites to measure accuracy, hallucination rates, safety, and bias on real-world scenarios the business cares about.

- Working with product and infrastructure teams to deploy these customized models into apps, copilots, or internal tools.

The skills and tools behind custom LLMs

You don’t have to be a research scientist, but you do need solid Python and a grasp of core ML concepts. The core toolkit usually looks like this:

- Languages & frameworks: Python with libraries like PyTorch, TensorFlow, and Hugging Face Transformers for model loading, training, and evaluation.

- Fine-tuning techniques: Transfer learning, parameter-efficient approaches (LoRA, PEFT), and sometimes reinforcement learning from human feedback (RLHF) basics.

- Evaluation & data work: Building test sets, defining metrics, and running experiments to compare base vs. fine-tuned behavior.

- Infrastructure: GPUs (cloud or on-prem), experiment tracking, and model registries to keep versions organized.

How this differs from just “using” an LLM

At a high level, you can think of three tiers of LLM work, each with different data needs, complexity, and roles attached:

| Approach | Data & infra needed | Difficulty | Typical roles |

|---|---|---|---|

| Off-the-shelf API use | Minimal; prompts + small samples | Low | Product managers, marketers, AI-literate ICs |

| Custom LLM fine-tuning | Curated domain data + GPU access | Medium | LLM engineers, applied ML engineers |

| Training from scratch | Massive datasets + large clusters | Very high | Research scientists, big-lab teams |

“One of the smartest things you can do is document your AI projects publicly on LinkedIn or Twitter... it gets you noticed by hiring managers looking for specialists.” - Career strategists, quoted in Forbes on in-demand AI skills

A practical path into this lane usually starts with building small apps on top of existing LLM APIs, then learning basic ML evaluation, and only then experimenting with parameter-efficient fine-tuning on modest open-source models. Bootcamps that focus on building AI-powered products - rather than pure research - can be a good bridge here: they keep you close to real use cases (like internal copilots or domain-specific chatbots) while giving you just enough exposure to training, data curation, and evaluation to talk about your work confidently in an interview.

Retrieval-Augmented Generation (RAG) & Vector Databases

Large language models are great storytellers, but they’re terrible at remembering your company’s latest policy update or that one obscure edge case buried in a PDF from three years ago. Retrieval-augmented generation (RAG) fixes that by wiring LLMs into real, up-to-date data via vector search. Instead of letting the model guess, you fetch the most relevant documents from a vector database, feed them into the prompt, and have the model answer based on those receipts. It’s one of the most practical patterns for “serious” AI apps, and market analysts estimate that the global vector database and semantic search segment is on track to reach around $671 million in annual revenue, reflecting how central this has become to modern AI systems.

What you actually do all day

In a RAG-focused role, your job is less about inventing new models and more about making sure the model sees the right information at the right time. Typical work looks like:

- Building ingestion pipelines that pull in documents (PDFs, tickets, wiki pages, logs), chunk them intelligently, and generate embeddings.

- Choosing and operating a vector database (like Pinecone, Weaviate, or Qdrant) to store those embeddings and serve fast similarity search.

- Designing retrieval strategies so the system grabs the most relevant context without overwhelming the model or blowing your latency budget.

- Wiring the retrieval layer into LLM prompts for chatbots, internal copilots, or semantic search, and then tuning based on real user queries.

Key building blocks and tools

You can think of a RAG system as three major pieces: document processing, vector storage, and LLM orchestration. Different tools shine at different parts of that pipeline, and you’ll often mix and match depending on the stack your team already uses. Articles that map out the most in-demand AI skills in 2025-2026 consistently highlight this blend of AI and data engineering as a distinct skill set, not just “prompting with extra steps.”

| Component | Main purpose | Example tools | What you’d tweak |

|---|---|---|---|

| Document processing | Clean, chunk, and embed content | Python scripts, LangChain, LlamaIndex | Chunk size, metadata, embedding model |

| Vector database | Store embeddings and serve fast search | Pinecone, Weaviate, Qdrant | Index type, filters, replication, scaling |

| LLM orchestration | Combine retrieved context with prompts | LangChain, custom APIs, app backend | Prompt templates, context window usage |

How to get started from scratch

For beginners or career-switchers, RAG is a sweet spot: technical enough to be valuable, but far more approachable than training your own models. A simple starter project can double as a portfolio piece for interviews and a learning lab for your current job.

- Pick a small, self-contained corpus - for example, your company handbook, a public documentation set, or a collection of blog posts.

- Write a script that splits documents into chunks, calls an embedding model, and stores vectors plus metadata in a lightweight vector database.

- Build a basic Q&A interface where a user asks a question, you retrieve the top-k relevant chunks, and then pass those plus the question into an LLM to generate an answer.

- Iterate: adjust chunking, filters, and prompts based on real questions and failure cases you observe.

“The World Economic Forum has identified AI and big data as the fastest-growing job cluster, favoring professionals who can connect advanced models to real-world information flows.” - Analysis of future-ready skills, cited in Cogent University’s tech skills outlook

Natural Language Processing & Conversational AI

Whenever you chat with a support bot, ask your phone a question, or type into a search bar and get eerily relevant results, you’re bumping into natural language processing (NLP) and conversational AI. Under the buzzwords, this lane is about one thing: helping machines actually understand and respond to human language well enough that people will keep using them.

Demand and salary: why NLP still punches above its weight

NLP has been a core AI specialization for years, and it hasn’t fallen off just because LLMs are everywhere now. In fact, roles that blend NLP with conversational interfaces are some of the strongest earners in the AI ecosystem. Industry compensation data summarized in Investopedia’s roundup of top-paying AI jobs puts natural language processing specialists at a median salary of about $188,600 in the U.S., with senior roles in large tech companies and well-funded startups often pushing well beyond $220,000 when you factor in bonuses and equity. Institutes like the United States Artificial Intelligence Institute also list NLP and conversational AI among the “non-negotiable” AI skill sets employers now expect to see as they embed AI across products and services.

What you actually do all day

On the ground, NLP and conversational AI work is part linguistics, part product design, and part engineering. Instead of just “making a chatbot,” you’re constantly tuning how well systems understand people and how natural they feel in return. Your day might include:

- Designing and training intent classifiers so a bot can tell the difference between “reset my password” and “I think my account was hacked.”

- Building entity extractors that pull out names, dates, product IDs, or dollar amounts from messy text and call transcripts.

- Mapping and refining dialogue flows for chat or voice so conversations don’t get stuck in loops or dead ends.

- Analyzing chat logs, reviews, or survey responses at scale to surface themes like churn risks, product confusion, or sentiment shifts.

The skills and tools behind good conversations

To be effective here, you need enough technical depth to train and evaluate models, paired with a strong feel for language and user experience. Common ingredients include:

- Languages: Python for data prep, model training, and evaluation.

- Libraries & APIs: spaCy or NLTK for classic NLP tasks; modern transformer-based libraries and hosted LLM APIs for classification, summarization, and question answering.

- Core tasks: Text classification, sequence labeling (for extracting entities), summarization, retrieval-based question answering, and ranking for search.

- Data work: Setting up annotation workflows, managing labeling quality, and building test sets that reflect real user behavior, not just clean examples.

How to break in from a non-ML background

If you’re coming from content, support, UX writing, or business analysis, this lane can be a very natural pivot because you’re already used to thinking in words and workflows. A practical way in is to start small: build a sentiment classifier for customer reviews, or an FAQ bot backed by an off-the-shelf LLM, then iterate as you see where it fails. As guides like USAII’s overview of non-negotiable AI skills point out, the combination of domain knowledge and language-focused AI is exactly what many companies are missing - so even one or two well-documented projects can carry a lot of weight in conversations with hiring managers.

Computer Vision for Autonomous and Industrial Systems

Text gets all the hype right now, but a huge chunk of high-value AI work is about understanding the physical world: cars on a highway, defects on a factory line, tumors in a scan, shoppers in a store. That’s the realm of computer vision, and when you look past the shiny “Top 10” skill tiles, this is one of the most quietly powerful lanes for long-term careers - especially if you like seeing your work interact with real hardware and real environments.

Where the demand comes from - and what it pays

Computer vision sits at the center of some of the most capital-intensive projects companies are betting on: autonomous vehicles, robotics, industrial inspection, medical imaging, retail analytics, and security. Recruiting data summarized in salary guides like Nexford’s overview of top-paying AI jobs flags vision specialists as consistently in demand across automotive, manufacturing, and healthcare. Senior computer vision engineers typically earn between $165,000 and $226,000 in base salary, with total compensation (bonuses, equity) often pushing that higher when you’re working on autonomous systems or high-margin industrial products.

What you actually do all day

Day to day, vision work is about turning pixels into decisions. Instead of just building models in a vacuum, you’re constantly wrestling with camera angles, lighting, motion, and edge cases. Typical responsibilities include:

- Designing and training models for object detection, segmentation, pose estimation, defect detection, or OCR on images and video.

- Collaborating with hardware and embedded teams to run models on edge devices - drones, factory cameras, in-store sensors, or vehicle rigs.

- Building and maintaining annotation pipelines so large volumes of images and frames are labeled accurately and efficiently.

- Optimizing models for latency and robustness so they work under real-world conditions: glare, occlusion, motion blur, and camera noise.

The skills and tools behind real-world vision systems

To be effective here, you need a mix of ML fundamentals and an appreciation for the messy physics of cameras and sensors. The core toolkit usually includes:

- Languages: Python for research and prototyping; C++ is common when you need performance on edge devices.

- Frameworks: PyTorch or TensorFlow for model development; OpenCV for classic image-processing operations.

- Model families: Convolutional neural networks, Vision Transformers, and specialized detectors like YOLO or Detectron-style architectures.

- Infra: GPUs for training, plus deployment toolchains for edge (NVIDIA Jetson, mobile frameworks) or cloud video pipelines.

How to start if you’re new to AI

If you’re coming from software, engineering, or even a more hands-on domain like manufacturing or healthcare, computer vision can be a very tangible entry point into AI. A practical sequence is to begin with image classification (e.g., identifying product types), then move to simple detection (locating objects in an image), and finally tackle small end-to-end projects - like a quality-check tool that flags damaged items from photos. As skills reports from organizations like the Swiss School of Business and Management note, vision sits among the AI specialties where employers care less about a traditional CS pedigree and more about whether you can show working systems that solve real business problems, whether that’s safer vehicles, fewer defective parts, or faster diagnoses.

AI Ethics, Risk & Governance

When AI starts influencing who gets hired, which loan applications are flagged, or how medical cases are triaged, “move fast and break things” stops being cute. That’s why AI ethics, risk, and governance roles have exploded. Industry analyses cited by professional bodies report that organizations now request over 100,000 professionals with AI ethics expertise every year, and standard salary bands have settled in the $120,000-$180,000 range. Just as important: about 59% of business leaders say they’re willing to pay above those bands for people who can keep them on the right side of regulators and public opinion.

Why demand is spiking

This surge isn’t just about fear of bad PR. It’s driven by new and evolving AI regulations, stricter enforcement of data protection laws, and the very real risk of biased or opaque models making high-stakes decisions. As one overview of future-ready AI skills from the Global Skill Development Council notes, governance, compliance, and responsible use are now considered core capabilities for any organization scaling AI, not optional extras. At the same time, surveys show that around 60% of American companies expect some form of layoffs amid automation and economic uncertainty, according to reporting from Money Talks News. That combination - regulatory pressure plus workforce disruption - makes AI risk management a board-level concern.

What you actually do all day

On the ground, this work looks a lot less like writing algorithms and a lot more like building guardrails and playbooks people will actually follow. Typical responsibilities include:

- Creating and maintaining AI governance frameworks: model inventories, approval workflows, documentation standards, and risk registers.

- Running bias, fairness, and robustness assessments on models before and after deployment, and coordinating remediation with engineering teams.

- Translating regulations and internal policies into concrete requirements for data collection, model training, monitoring, and human oversight.

- Leading incident response when an AI system misbehaves - investigating what went wrong, communicating with stakeholders, and updating controls.

- Training non-technical staff on responsible AI use so “shadow AI” doesn’t grow faster than your ability to manage it.

Skills, backgrounds, and how to pivot in

The sweet spot in this lane is a blend of AI literacy, risk awareness, and people skills. You need to understand how models are built and where they fail, but you don’t have to be the one coding them. Instead, you pair that understanding with knowledge of privacy and sector rules, plus the ability to negotiate across legal, compliance, engineering, and business teams. People successfully move into these roles from compliance, legal, HR, security, operations, and even social sciences - especially if they’ve already worked with audits, policy, or change management. If you’re the person in the room who naturally asks “what could go wrong, who gets hurt, and how do we prove we did the right thing?”, AI ethics, risk, and governance isn’t a detour from your career story; it’s a way to turn that instinct into a full-time, well-paid job.

Cloud and Distributed AI Engineering

Almost every serious AI system now lives in the cloud. Models are trained on distributed clusters, deployed behind APIs, and scaled up and down in response to traffic and cost pressures. That’s why cloud and distributed AI engineering has become its own lane: the intersection of cloud architecture, distributed systems, and AI workloads. Surveys of technology leaders report that around 88% of tech leaders now say cloud skills are required to fuel their AI adoption, and roles that blend cloud with ML regularly land in the $140,000-$200,000 base salary range, with senior engineers climbing higher. Add in that job posts mentioning AI skills command an average 28% salary premium and you can see why this specialty shows up near the top of every “most in-demand” list.

What you actually do all day

On a typical day, a cloud or distributed AI engineer is less focused on tweaking model architectures and more on making sure those models can run reliably and affordably at scale. Your work might include:

- Designing cloud architectures for AI workloads: VPCs, subnets, security groups, data stores, and GPU/TPU clusters.

- Standing up and tuning autoscaling policies so model-serving endpoints handle traffic spikes without melting your budget.

- Integrating LLM APIs or hosted models into existing microservice ecosystems, including authentication, rate limiting, and logging.

- Implementing observability for AI services: metrics, tracing, and alerting that capture both system health and model-specific signals like latency or error spikes.

- Collaborating with data engineers and MLOps specialists to coordinate storage, networking, and deployment pipelines across teams.

Skills and tools for distributed AI systems

Compared to general cloud engineering, this lane adds a strong awareness of how AI workloads behave: they’re bursty, GPU-hungry, and often latency-sensitive. You’re still working with familiar building blocks, but using them in AI-aware ways.

- Cloud platforms: Deep experience with at least one major provider (AWS, Azure, or GCP), including networking, IAM, and cost controls.

- Compute and orchestration: Managed Kubernetes, container services, and sometimes serverless for glue code around models.

- AI-specific services: Managed ML platforms (like hosted training/serving or feature stores), vector-capable databases, and LLM integration points.

- Distributed systems concepts: Sharding, replication, caching, and consistency models that matter when you’re serving millions of personalized AI responses.

- Infra-as-code and CI/CD: Terraform or CloudFormation plus pipelines that treat model endpoints like any other production service.

How to break in from adjacent roles

If you’re coming from traditional cloud engineering, DevOps, or backend development, this is one of the most natural pivots into AI. A realistic path is to first deepen your cloud fundamentals, then layer on AI-specific services and patterns. According to Dice’s analysis of tech job postings, roughly half of all tech roles now expect some AI familiarity, and certifications like the AWS Machine Learning Specialty can immediately boost salaries by 10-15% for roles that mix cloud and ML. At the same time, experts interviewed by Stanford’s Institute for Human-Centered AI emphasize that the real leverage isn’t just knowing how to click through a managed ML console, but understanding how to design scalable, reliable, and cost-aware distributed systems around those models. One practical way to show that: build a small AI-powered service, deploy it on a cloud provider using infra-as-code, add monitoring and autoscaling, and document the tradeoffs you made. That single project often speaks louder than a long list of buzzwords on a résumé.

| Role focus | Primary concern | Key skills | Typical base range |

|---|---|---|---|

| Cloud Engineer | General app reliability & cost | VPCs, IAM, compute, storage | $120,000-$160,000 |

| Cloud & Distributed AI Engineer | Scalable, efficient AI workloads | GPUs/TPUs, ML services, distributed design | $140,000-$200,000+ |

| AI Platform Engineer | End-to-end AI infrastructure | Kubernetes, MLOps, multi-tenant model serving | $160,000-$220,000+ |

Applied Generative AI for Marketing, Sales & RevOps

Not everyone wants to become an ML engineer, and you don’t have to. For a lot of people in marketing, sales, and revenue operations, the highest-leverage move is learning how to bolt generative AI onto work you already know how to do. Industry data shows that demand for AI skills in revenue roles surged by about 135.8% in a single year, and applied AI capabilities can boost marketing and sales compensation by roughly 43%, with senior specialists in high-performing teams reaching up to $250,000 in total pay. In other words: this is one of the fastest ways for non-engineers to turn AI into real money.

What you actually do all day

Applied gen AI for revenue teams is less about building models and more about wielding them as a force multiplier. Day to day, you might:

- Use generative models to draft and A/B test emails, ads, landing pages, and outreach scripts, then refine prompts based on performance data.

- Automate personalized sequences at scale - prospecting, follow-ups, renewal nudges - without spamming people with generic copy.

- Summarize sales calls, extract key objections and next steps, and push those insights into your CRM or playbooks.

- Analyze funnel metrics and pipeline data with AI to surface patterns: which segments respond to which offers, where deals stall, and what messaging resonates.

- Act as the in-house “AI champion,” creating prompt libraries and mini-playbooks your whole go-to-market team can reuse.

Skills, tools, and roles this unlocks

You don’t need to write production-grade code to be valuable here, but you do need to understand both your revenue workflows and how to guide AI effectively. That usually looks like:

- AI tools: Daily use of LLMs (for copy, summaries, idea generation) and image/video generators for creative variants.

- Revenue platforms: Hands-on with CRMs, marketing automation systems, email tools, and analytics dashboards, plus light scripting or no-code automation.

- Experimentation mindset: Comfort running A/B tests, reading basic stats, and iterating prompts based on open, click, reply, and conversion rates.

- Communication: The ability to explain what’s AI-generated, what’s been human-edited, and how you validated it before it hit customers.

| Role type | How AI changes it | Applied AI focus | Impact on comp |

|---|---|---|---|

| Marketing specialist | From manual content production to high-volume testing | Ad/email variants, landing pages, creative briefs | Higher bands driven by ~43% uplift in AI-skilled roles |

| Sales / SDR | From hand-built sequences to personalized, AI-assisted outreach | Prospecting, follow-ups, call summaries | Stronger pipeline → higher commissions + role premiums |

| RevOps / GTM ops | From reporting to automated insight generation | Funnel analysis, playbooks, workflow automation | Movement into “AI ops” specialist and leadership tracks |

How to start without becoming an engineer

The cleanest on-ramp is to treat your current job as a sandbox. List your most repetitive or analytical tasks, then design small AI experiments around them: prompt templates for cold outreach, AI-assisted campaign briefs, or automated call summaries feeding into your CRM. Document what you tried, how you measured it, and the business results. As one analysis on AI skills everyone will need puts it, the real value comes from professionals who can “manage the machines,” not just stare at their outputs. That is exactly what applied generative AI for marketing, sales, and RevOps is about: you keep your domain expertise, add a powerful new toolset, and turn yourself into the person who can move the revenue needle faster than your peers.

“The ability to design, supervise, and interpret AI-assisted workflows will define the most resilient careers, especially in roles where revenue impact can be clearly measured.” - AI workforce strategists, eLearning Industry

Build your own AI skill watchlist

By now you’ve seen enough Top 10 lists to know the pattern: bright tiles, big promises, and very little help with the real question - “But is this the right thing for me, right now?” The same thing happens with AI skills. Rankings are built from signals like demand, salary, and growth, not from your starting point, risk tolerance, or what you actually enjoy doing all day. Building your own AI skill watchlist is about flipping that around: use the rankings as input, not as auto-play.

Step 1: Pick your genre, not the #1 tile

Instead of asking “What’s the top-paying AI skill?”, start with “What kind of work do I want to be doing?” The four genres from earlier - Builders (coding and models), Integrators (data and systems), Governors (risk and policy), and Translators (business roles using AI) - are your categories on the streaming home screen. Within each genre, multiple skills are in demand; a report on AI job trends from SignalHire highlights everything from LLM engineering and data pipelines to AI product roles and governance as distinct, high-opportunity paths. The trick is to pick the one or two that match your temperament: deep tech vs. cross-functional, individual contributor vs. stakeholder-heavy, experimental vs. highly regulated.

Step 2: Choose 1-2 headliners and 1-2 supporting skills

Once you know your genre, pick a couple of “must-watch” skills instead of trying to binge everything. For a Builder, that might be data engineering plus MLOps; for a Translator, AI literacy plus applied gen AI for marketing or sales. Then add one or two supporting skills that make those headliners more valuable - think RAG for someone focused on LLMs, or ethics and governance for an NLP specialist working in finance or healthcare. Lists that aggregate “high-income skills” for modern careers, like Coursera’s breakdown of high-income skills to learn, consistently stress depth-plus-complementarity over shallow familiarity with a dozen buzzwords.

Step 3: Plan a single learning season

Instead of vaguely “learning AI,” scope a specific season: 3-6 months where you commit to a lane, a couple of skills, and 2-3 concrete projects you can show. That might be standing up a small RAG-based internal helpdesk, deploying a simple model with monitoring, or building a prompt library and reporting system that measurably lifts campaign performance. The goal isn’t to become “done” with AI - none of us will - but to move from zero to “I can talk through real work I’ve shipped” in a focused block of time.

Step 4: Measure, then iterate as the algorithm changes

Finally, treat your AI skill stack like a living watchlist, not a tattoo. Experts tracking AI labor trends warn that roughly 37% of companies plan to replace certain roles with AI by the end of this cycle, which makes “managing the machines” a vital survival skill rather than a nice bonus. Every few months, check in on three things: which skills you’re actually using at work, what the market is rewarding (titles, postings, salary bands), and how well your current path fits your values and energy levels. If something’s off - too much risk, not enough human interaction, or you’re bored out of your mind - swap a skill out and bring a new one in. The point isn’t to chase every new trend; it’s to make sure your career feels more like a carefully curated lineup than whatever happens to auto-play next.

Frequently Asked Questions

Which AI skill will give me the biggest salary bump in 2026?

Specialized infrastructure and operations roles - MLOps and AI platform engineering - tend to pay the most, with many positions reporting total compensation from roughly $160,000 to $350,000+. Custom LLM specialization also commands large premiums (about a 47% boost on average), and job posts that mention AI generally offer an average 28% salary premium.

How did you rank these top AI skills?

The list was ordered by measurable market signals: employer demand, salary impact, growth trajectory, and breadth of roles a skill unlocks. Those signals are grounded in recruiter and labor-data trends - for example, roughly 50% of tech jobs now require AI skills and AI-related job posts show an average 28% pay premium.

Which AI skills are most realistic if I don’t have a CS degree?

Start with AI literacy and prompt engineering - these travel across roles and can drive 35-43% uplifts in non-technical fields - and practical patterns like RAG (retrieval-augmented generation). Structured bootcamps can get you there quickly (e.g., Nucamp’s 15-week AI Essentials or 25-week entrepreneurial track), and RAG or applied gen-AI projects make great portfolio pieces without a research background.

How should I choose which AI skill to focus on first?

Pick a “genre” that fits how you like to work - Builders (models), Integrators (data/systems), Governors (policy/risk), or Translators (business users) - then choose 1-2 headliner skills plus 1-2 supportive ones. Aim for depth over breadth: employers reward complementary skills (remember the 28% AI pay premium) and a focused 3-6 month learning season with 2-3 projects.

How long until I can be job-ready in these AI skills?

Timelines vary by lane: AI literacy and prompt engineering can be learned in about 15-25 weeks with focused practice, while data-engineering fundamentals often break into 8-12 week milestones per step and MLOps or custom LLM work typically requires additional months of hands-on projects. Practical, project-based training plus public documentation of your work is the fastest route - Nucamp reports program durations like 15-25 weeks and employment outcomes that help beginners make that transition.

Explore the highest-paying data scientist roles in 2026 and what day-to-day work actually looks like.

Newcomers should learn which roles are hiring fast in 2026 before choosing a bootcamp or certification.

Use the step-by-step interview prep for modern technical screens to rehearse pair-programming and take-home tasks.

Career-switchers should consult the best entry paths to top tech companies for practical, step-by-step next moves.

See the ranking of internships by pay and full-time conversion to understand long-term return on a summer role.

If you care about return on investment, check the best value CS programs 2026 breakdown.

For a side-by-side view, check this top coding bootcamps ranked by outcomes to compare placement rates.

Thumbnail description: Create an editorial image concept of top AI programming languages - shopper in a running-store comparing shoes labeled Python, JavaScript, Rust, Java, Go, C++, R, Julia, SQL, Mojo; warm lighting, high-res, slightly stylized and informative.

If you’re aiming for web roles, read the ranked full-stack web development path with deployable projects.

This complete guide to time-to-competency for AI roles helped me estimate how long focused study actually takes.

If you’re weighing salaries and day-to-day work, this AI vs web development career comparison chapter is especially helpful.

If you keep asking what is vibe coding, this article gives a plain-language definition and practical examples.

Those leaning toward support work can explore the top help desk jobs no experience required and how to break in quickly.

Get practical AI-assisted development tips for learners that explain how to use assistants without skipping core learning.

See our hands-on tutorial for PyTorch and transformers to move beyond notebook experiments.

See which badges made the list of highest-impact AI credentials for 2026 if you want promotions.

For a clear Data Scientist vs ML Engineer vs AI Engineer comparison, check our in-depth breakdown of roles and trade-offs.

Frontend devs looking for polished components can see the best text-to-UI generators for React + Tailwind in our ranking.

Compare options with the productivity software comparison for knowledge workers included in the piece.

Read our comprehensive guide to whether AI will take jobs in 2026 for data-driven advice.

For a practical shortlist, check the top-ranked AI business ideas list for 2026 that weighs cost, regulation, and time-to-first-dollar.

Explore focused pathways like QA and cybersecurity bootcamps under $5,000 for faster on-ramps into entry-level tech roles.

If price matters, the best budget-friendly bootcamps of 2026 section highlights low-cost programs and financing.

This ranked guide to part-time coding programs helps you weigh weekly hours against tuition.

Employers' views matter - check our employer comparison: hiring bootcamp grads vs CS graduates for real-world hiring data.

Use our complete guide to choosing a bootcamp to evaluate curriculum, career services, and red flags before you enroll.

For career switchers, the top bootcamps for career switchers with guarantees section explains who really benefits from refunds or ISAs.

Start with our complete guide to learning to code with a full-time job for a realistic 12-month plan.

If you want actionable steps, follow the step-by-step plan to increase your web dev pay.

Explore our best tech skills for career switchers guide with stackable learning paths.

Check the complete timeline from first line of code to second job to set realistic expectations.

New to LLMs? learn to wire up embeddings and vector search with a compact PDF-chat example.

For a hiring-market lens, review the top AI bootcamps by job placement rate and what those numbers actually mean.

Managers and non-technical pros can use our top courses for AI literacy and strategy to AI-proof their roles.

Irene Holden

Operations Manager

Former Microsoft Education and Learning Futures Group team member, Irene now oversees instructors at Nucamp while writing about everything tech - from careers to coding bootcamps.