How to Become an AI Engineer in the US in 2026 (Step-by-Step Path)

By Irene Holden

Last Updated: January 4th 2026

Quick Summary

Yes - you can become an AI engineer in the U.S. in 2026 by following a systems-first, step-by-step path: lock down Python and practical math, master core ML and PyTorch/transformers, learn MLOps and cloud deployment, and ship 3-5 end-to-end projects; a realistic timeline for career-switchers studying part-time is about 12-18 months. Demand is strong - AI/ML roles grew roughly 143% year-over-year - and entry-level total compensation typically falls between $100,000 and $150,000, so focus on deployable systems, monitoring, and measurable impact in your portfolio.

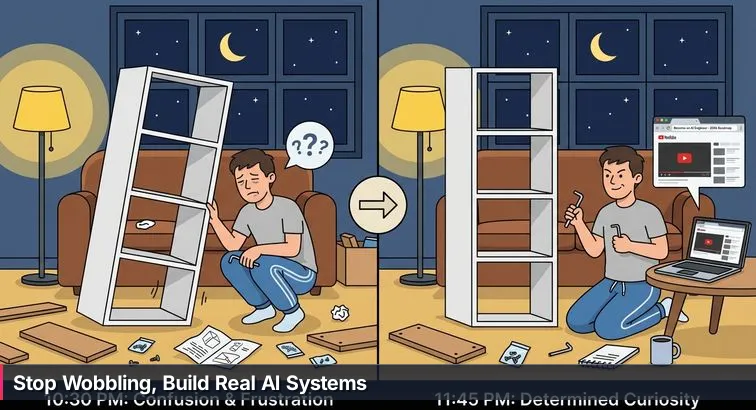

Picture it: it’s 11:47 p.m., you’re on the floor surrounded by boards and tiny screws, and your new bookcase is somehow wobbling in three different directions. The instruction sheet insists there are only 18 steps, but you’ve clearly hit step 19: you did everything “right”…and it still isn’t working. That’s how a lot of people feel after following a glossy “Become an AI engineer in 6 months” roadmap, bingeing tutorials, and then freezing when they try to ship a real system or pass an interview.

Most roadmaps are like those diagrams that assume a perfectly flat floor and correctly labeled boards. They walk you through Python, linear algebra, “then” neural networks, “then” LLMs, as if your background, time, and energy are perfectly standardized. They rarely account for extra screws and mislabeled boards - maybe you’re missing some math, your coding is rusty, or you’re working full-time and can only study at night. They also skip the part where you stop every few steps, press on the shelf, and check for wobble: code reviews, metrics, small deployments, and honest feedback from users or mentors.

Why most AI roadmaps wobble

The usual AI path assumes you’ll go in a straight line: course → project → job. In reality, the gap is between “I trained a model in a notebook” and “I own a system that real users depend on.” As mentors in systems-focused guides like Machine Learning Mastery’s future-proofing roadmap keep pointing out, the world already has plenty of people who can copy-paste a TensorFlow example. What’s missing are engineers who can think in terms of systems: data pipelines, deployment, monitoring, costs, and failure modes. Traditional roadmaps barely mention that shift, so learners stack more and more “shelves” of theory on a frame that was never anchored.

This is also where mindset gets distorted. When the roadmap doesn’t match reality, people blame themselves instead of the plan: “Maybe I’m just not smart enough for AI,” or “I guess you really do need a PhD.” But industry-focused breakdowns like the University of San Diego’s AI careers overview stress behaviors over genius: consistent practice, shipping, and learning to integrate AI into existing software and business workflows. The missing piece isn’t raw IQ; it’s a habit of building, checking, and adjusting as you go.

The stakes in AI engineering right now

None of this would matter as much if AI were just another nice-to-have skill. It isn’t. AI/ML roles are exploding; one analysis reports 143% year-over-year growth in AI/ML engineer positions, outpacing most other tech jobs. Compensation has climbed accordingly. Across U.S. markets, aggregated data from Glassdoor, Built In, and Levels.fyi shows that entry-level AI engineers typically earn around $100,000-$150,000 in total compensation, mid-level engineers around $150,000-$250,000, and senior or highly specialized roles can reach $250,000-$500,000+.

| Level | Typical Experience | Typical Total Compensation (U.S.) |

|---|---|---|

| Entry-level AI Engineer | 0-2 years | $100,000-$150,000 |

| Mid-level AI Engineer | 3-7 years | $150,000-$250,000 |

| Senior/Specialized AI Engineer | 7+ years | $250,000-$500,000+ |

That kind of upside is why so many people grab the nearest “instruction sheet” and start building. But it’s also why a wobbly roadmap hurts: months of effort, thousands of dollars in courses, and still no stable, production-ready skill set. As Marc Benioff put it when describing how companies are changing, “We are in an AI and data revolution.” - Marc Benioff, CEO, Salesforce. In that kind of market, employers aren’t just looking for model tinkerers; they’re looking for people who can design, assemble, and anchor AI systems that support real workflows.

How this guide keeps your “bookcase” steady

This guide is designed less like a poster of pretty diagrams and more like a build-along with constant stability checks. Each step ties specific skills - Python, core ML, deep learning, transformers, RAG, MLOps - back to concrete actions: tightening a joint, checking for wobble, or finally bolting the bookcase to the wall. Instead of rushing you from “Hello, world” to “Build a chatbot,” it forces you to pause, ship something small, measure whether it holds weight, and only then add another shelf.

Most importantly, it treats you as a working adult, not a blank-slate undergrad. It assumes your background is a bit messy, that you’ll hit missing screws and mislabeled boards, and that what gets you hired as an AI engineer is not a perfectly followed checklist but a pattern of behaviors: seeking feedback, integrating theory with practice, and taking responsibility for systems in production. If you’re willing to build that way - deliberately, with regular “is this level?” checks - this roadmap can help you turn a pile of AI parts into a solid, six-figure career instead of another late-night wobble.

Steps Overview

- Why most AI roadmaps wobble and how this guide steadies you

- Before you start: prerequisites, tools, and the right mindset

- Choose your AI engineer target and set a realistic timeline

- Build strong Python and software engineering foundations

- Learn the practical math that actually matters

- Master core machine learning with scikit-learn

- Move into deep learning, transformers, and LLMs

- Learn MLOps, deployment, and production monitoring

- Build 3-5 impactful, end-to-end portfolio projects

- Choose an education path that fits your budget and background

- Build public credibility and run a focused job search

- Verify your skills and troubleshoot common wobbles

- Common Questions

Hiring trends now favor demonstrable AI literacy and project portfolios - this article explains how to build both.

Before you start: prerequisites, tools, and the right mindset

Before you stack another tutorial on your plate, it’s worth pausing to check that your “floor” is level: the baseline skills, tools, and mindset that will keep everything from wobbling later. This isn’t about being a genius; it’s about making sure you have just enough math, coding comfort, and time carved out so the roadmap ahead is challenging but realistic.

Minimum skills to bring with you

You do not need a PhD to become an AI engineer, but you’ll move faster and suffer less if a few basics are in place. Roles highlighted in Coursera’s overview of AI jobs show people coming from software, data, and even non-tech backgrounds, but they almost all share a core foundation in math, logical thinking, and reading technical English.

- Comfort with basic algebra and high-school geometry (solving for x, manipulating equations, understanding angles and areas)

- Ability to learn independently and stick with a plan for 12-18 months (especially if you’re working or caregiving at the same time)

- Intermediate English reading ability so you can digest docs, blog posts, and research summaries

If your math is rusty, you don’t need to halt everything. Instead, plan to add 2-3 hours a week for review while you learn to code, and focus on what feeds directly into models: functions, graphs, vectors, and probability. Think of this as checking that the wall you’re about to drill into is solid, not obsessively repainting the whole room.

Your starter toolkit (hardware + software)

Once the basics are in place, you need a small but solid toolkit so you can actually build and test things without fighting your own machine. Most entry AI roles listed in practical guides like NetCom Learning’s AI engineer career guide assume you’re comfortable in a modern Python environment and at least one major cloud.

- A laptop with at least 16 GB RAM (8 GB can work, but you’ll hit limits quickly with local experiments)

- Python 3.10+ installed (check with

python --versionorpython3 --version) - A code editor like VS Code

- Git and GitHub for version control and portfolio

- A free or low-cost cloud account (AWS, Azure, or GCP all work fine)

- Access to modern AI tools and APIs (OpenAI, Anthropic, or open-source models via Hugging Face)

- Verify you can run a simple Python script from the terminal.

- Create a GitHub account and push a “hello-world” repo.

- Sign up for a cloud free tier and deploy anything small (even a static page) so the dashboard feels familiar.

Pro tip: don’t overspend on hardware. A mid-range laptop plus cloud credits will carry you through almost all beginner and intermediate projects; the point is to learn how to use GPUs in the cloud, not to turn your apartment into a data center.

The mindset shift: from step-follower to system-owner

The biggest prerequisite isn’t technical; it’s how you think about the work. Many AI learners treat roadmaps like IKEA diagrams: follow the boxes, don’t ask questions. But modern AI engineering, as described in system-focused paths like NetCom Learning’s guide, expects you to think like an engineer who owns a system end-to-end, not a student completing assignments. That means asking “How will this behave in production?” as early as “How do I import this library?”

That mindset is showing up even in places you might not expect. When the U.S. Army formalized a dedicated AI/ML career path, they framed it as a long-term, deliberate build rather than a quick skill add-on:

“This is a deliberate and crucial step in keeping pace with present and future operational requirements. We're building a dedicated cadre of in-house experts who will be at the forefront of integrating AI and machine learning across our warfighting functions.” - Lt. Col. Orlandon Howard, U.S. Army spokesperson

Bring that same attitude to your own journey. You’re not just trying to “learn some AI.” You’re committing to become the person who can design, debug, and improve systems other people rely on. If you can accept that this will take months of steady effort, regular feedback, and a lot of small shipped projects, you’ve got the right mindset to start tightening the first screws.

Choose your AI engineer target and set a realistic timeline

Choosing an AI path is a bit like deciding whether you’re building a small corner shelf or a floor-to-ceiling bookcase that dominates the room. “I just want an AI job” sounds flexible, but in practice it leads to a jumble of boards: a bit of NLP here, some computer vision there, and nothing sturdy enough to impress a hiring manager. You’ll move faster if you decide early what kind of AI engineer you’re aiming to become and then set a timeline that matches your reality, not someone else’s highlight reel.

Pick a target flavor of AI engineer

Career maps like the AI engineer roadmap from Turing College and similar guides break “AI engineer” into several overlapping but distinct roles. Each uses many of the same tools, but the day-to-day work - and the kinds of projects you should build - look different.

| Role Focus | What You Mostly Do | Key Tools & Skills |

|---|---|---|

| LLM App / AI Product Engineer | Build apps powered by LLMs, RAG, and agents | Python, APIs, LangChain/LlamaIndex, vector DBs, frontend basics |

| Core ML Engineer | Train and optimize models on structured data | Scikit-learn, XGBoost, PyTorch, statistics, feature engineering |

| MLOps / AI Infrastructure Engineer | Deploy, scale, and monitor models in production | Docker, Kubernetes, cloud (AWS/GCP/Azure), CI/CD, monitoring |

| CV / NLP Specialist | Go deep on images, video, or language | Transformers, CNNs, Hugging Face, domain-specific datasets |

As a quick rule of thumb: if you love UX and talking to users, aim for LLM app / product engineering. If systems, infra, and observability make you happy, lean toward MLOps. If you’re drawn to math-heavy modeling, optimization, or a specific domain like vision, core ML or specialization can be a better fit. Industry breakdowns such as IntuitionLabs’ AI engineer job market analysis show demand across all of these, but hiring managers expect focus: a portfolio built around one narrative beats a scattered set of unrelated experiments.

Turn that target into a 12-18 month plan

Once you’ve picked a direction, you can turn “someday I’ll work in AI” into a timeline that fits your life. For most beginners and career-switchers studying part-time, a realistic range is 12-18 months. Faster is possible if you already write software professionally or have a strong math/CS background; slower is fine if you’re balancing intense work or family commitments.

- Months 0-3: Python + software fundamentals, Git/GitHub, basic terminal and cloud comfort.

- Months 3-6: Core ML with scikit-learn, small tabular projects, one deployed API.

- Months 6-12+: Specialization aligned with your target role (LLMs/RAG, deep learning, or MLOps), plus 2-3 portfolio-grade projects.

Think of each phase as adding another shelf only after the previous one holds weight: you don’t move on from Python until you’ve shipped a small tool, and you don’t call yourself “LLM-focused” until you’ve built at least one real RAG or agentic system that someone else can use. The wrong move here is to keep resetting the plan every month (“Now I’m doing trading bots… now it’s computer vision… now it’s reinforcement learning”). The right move is to write a single guiding sentence and let it steer your choices:

“By December 2026, I want to be an AI engineer focused on LLM-powered applications and RAG systems at a U.S. startup or mid-size company.”

Build strong Python and software engineering foundations

Python is still the heartbeat of AI. Almost every AI engineer job listing in 2026 expects solid Python plus basic software engineering, and roadmaps like the AI/ML engineer roadmap for beginners put “strong Python” as the first non-negotiable step. This is the part where you’re not just opening the box and admiring the parts; you’re learning how to use the drill, the level, and the screwdriver so every shelf you add later doesn’t sag.

What “strong Python” actually means

At this stage, you’re aiming far beyond copy-pasting scripts. Guides like Turing College’s AI engineer roadmap (and similar industry breakdowns) assume you can move comfortably through everything from basic syntax to more advanced language features. Concretely, that looks like:

- Python basics to advanced: data types and control flow, functions, modules and packages, OOP (classes, inheritance, composition), decorators, generators, list comprehensions, and asynchronous programming with

asyncioand libraries likeaiohttpfor non-blocking API calls. - Core libraries:

pandasandnumpyfor data manipulation, plusrequestsfor HTTP calls. - Core CS/engineering: data structures (lists, dicts, sets, queues), an intuitive sense of time/space complexity, writing and running unit tests with

pytest, and clean code practices (meaningful names, small focused functions).

Turn concepts into tiny, testable projects

To keep the “bookcase” from wobbling later, you need to press on each shelf as you install it. That means turning topics into small, shippable projects instead of endless tutorials. After you’re comfortable with basic syntax and functions, set up a simple environment (python -m venv .venv, source .venv/bin/activate or .venv\Scripts\activate on Windows, then pip install requests pandas pytest) and build:

- A CLI app that calls an LLM API and logs responses to a file (great practice for HTTP requests and error handling).

- A simple data pipeline: read a CSV → clean data with

pandas→ write cleaned output to a SQLite database. - A small REST API using FastAPI that returns random quotes, then write at least one test with

pytestand version it with Git (git init,git add .,git commit -m "initial api").

Pro tip: treat Git and testing as “must use,” not “nice to have.” Guides like the Ideas2IT AI engineer roadmap emphasize that almost all real AI teams rely on Git workflows and automated tests; if you skip them now, you’ll feel it hard when you start deploying models.

Where Nucamp fits into this foundation

If you want structure instead of piecing everything together alone, Nucamp’s Back End, SQL & DevOps with Python bootcamp (16 weeks, $2,124) is designed to line up directly with this stage. It covers Python programming and scripting, SQL databases, and DevOps and cloud deployment basics, so you’re not just learning the language in isolation but also how to run it in realistic environments. That’s a very different experience from many $10k+ bootcamps that race you into “AI” without tightening the software joints first.

- Common mistakes: staying in “tutorial hell” instead of shipping small projects, avoiding Git because it’s “confusing” (then struggling later with collaboration), and skipping testing and logging, which are crucial once you deploy models.

Learn the practical math that actually matters

Those walls of symbols and Greek letters you see in AI courses can feel like a secret language. It’s tempting to either run straight at them (and drown in proofs) or run away and hope PyTorch will hide the math for you. Neither works for long. You don’t need to become a mathematician, but you do need math fluency so that when a model misbehaves, you can reason about why. Career guides like the AI engineer job outlook from 365 Data Science consistently list linear algebra, calculus, and probability as core skills, right alongside Python and ML frameworks.

Focus on the math that shows up in your code

Instead of trying to “learn all the math,” zoom in on the pieces that are directly wired into modern ML and deep learning. Roadmaps such as the “Machine Learning Roadmap 2025 - 18 skills you need” put these three pillars front and center because they’re what you see inside optimizers, loss functions, and evaluation metrics every day:

- Linear Algebra: vectors, matrices, matrix multiplication, and eigenvalues/eigenvectors. These underpin embeddings, attention mechanisms, and the way networks transform data layer by layer.

- Calculus: derivatives, gradients, and gradient descent. This is the math of “how do we nudge parameters to make the loss smaller?” and shows up anytime you train a neural network.

- Probability & Statistics: random variables, common distributions, conditional probability and Bayes’ rule, confidence intervals, and hypothesis testing. These power your intuition for uncertainty, overfitting, and whether an improvement is real or just noise.

Turn ideas into code and pictures

To keep this from turning into another abstract textbook, treat every concept as something you’ll implement and visualize. A simple loop works well:

- Learn the idea intuitively (videos, diagrams, or a short write-up).

- Implement a tiny example in Python or NumPy.

- Connect it back to a model you care about (how it affects training, evaluation, or debugging).

For example, you can treat each topic as a mini “joint” in your AI bookcase and stress-test it with a small experiment:

- Gradients: implement linear regression “from scratch” with gradient descent and plot how the loss changes over iterations.

- Learning rate: visualize how different learning rates affect convergence (too small = painfully slow; too big = divergence).

- Probability: simulate coin flips and compare the empirical distribution to the binomial distribution, then relate that to model evaluation variance.

Avoid the two classic traps

Most people who stall on math fall into one of two holes: trying to master years of pure math before touching a model, or avoiding math entirely and hoping libraries are magic. Both slow you down. Common patterns to watch for:

- Over-math mode: spending months on real analysis or advanced proofs with no coding, so nothing connects to actual ML problems.

- Formula memorization: cramming equations for activations or losses without ever implementing or graphing them.

- Math avoidance: skipping every derivation and then freezing when a model diverges because you don’t know what a gradient or variance actually means.

A more sustainable approach is to budget an extra 2-3 hours per week for targeted math, in parallel with your coding. Think “learn just enough for the next model,” not “become perfect at math first.” That way, each new shelf you add to your AI skillset is supported by joints you actually understand, not just symbols you once saw on a slide.

Master core machine learning with scikit-learn

Jumping straight into LLMs without understanding basic machine learning is like hanging your heaviest shelf on two cheap screws. It might hold for a bit, but the first real load (an interview question, a messy dataset at work) will rip it out of the wall. Guides like the AI engineer skills overview from DataTeams still put classic ML - regression, classification, clustering, feature engineering - at the core of what AI engineers are expected to know. This is where you learn how models actually learn, fail, and generalize before you start wrapping them in fancy APIs.

The core ML topics to cover

Your goal in this phase is to be comfortable taking a tabular dataset, cleaning it, choosing a reasonable model, training it, and evaluating it without hand-holding. In practice, that means using scikit-learn as your main playground:

- Supervised learning: Linear Regression, Ridge, Lasso for regression; Logistic Regression, Random Forests, and Gradient Boosted Trees for classification.

- Unsupervised learning: K-Means and DBSCAN for clustering; PCA for dimensionality reduction and visualization.

- Data prep: handling missing values, scaling/normalizing features, encoding categories, basic feature engineering.

- Evaluation: train/test splits and cross-validation with

train_test_splitandcross_val_score; metrics like accuracy, precision, recall, F1, ROC-AUC for classification and RMSE/MAE for regression.

Turn algorithms into scikit-learn projects

Install your toolkit (pip install scikit-learn pandas matplotlib) and treat each algorithm as a mini project, not just a notebook cell. The idea is to tighten one joint at a time and then lean on it:

- House price prediction: load a housing dataset into

pandas, split withtrain_test_split, train a Linear Regression and a Random Forest, and compare RMSE/MAE on the test set. - Churn or default classifier: pick a binary classification dataset and compare Logistic Regression vs. Gradient Boosted Trees using precision, recall, F1, and ROC curves, especially under class imbalance.

- Customer segmentation: use K-Means on a customer dataset, pick the number of clusters with the elbow method or silhouette score, and visualize clusters with PCA.

For each project, create a clean GitHub repo and a short README that explains the problem, the data, the model choice, and the key metrics. As AI adoption grows across industries - summarized in reports like Netguru’s AI adoption statistics - hiring managers are looking for people who can talk about impact (“reduced error by X%”) not just code snippets.

Avoid the classic ML “wobbles”

This is where many learners quietly sabotage themselves. They either obsess over model choice and ignore the data, or quote a single metric without understanding what it hides. To keep your foundation solid:

- Warning: don’t skip data cleaning and feature engineering; in many real problems, they matter more than picking XGBoost over Random Forest.

- Never report only accuracy on imbalanced data; always look at precision, recall, F1, and confusion matrices.

- Use a proper test set and avoid “peeking” at it repeatedly - tune on validation or cross-validation and treat the test set as sacred.

- Add basic baselines (mean predictor for regression, majority-class classifier) so you can prove your model actually beats “dumb” approaches.

Once you can reliably take a new dataset, build a reasonable model in scikit-learn, and explain your decisions and metrics, you’ve got a sturdy middle section of the bookcase. From there, deeper neural networks and transformers become upgrades to a solid frame, not decorations hiding weak joints.

Move into deep learning, transformers, and LLMs

Once your classic ML shelves are solid, it’s time to add the heavy hardware: deep learning, transformers, and LLMs. This is the phase (roughly months 6-10 if you’re following a 12-18 month plan) where you stop treating models as black boxes and start understanding how modern systems like GPT-style models, multimodal architectures, and RAG pipelines actually work. Skills lists like “12 AI Skills in 2026 for Tech & Non-Tech Careers” from Final Round AI highlight PyTorch, transformers, and LLM orchestration as default expectations now, not nice extras, especially for roles that touch product or generative AI.

Learn deep learning basics in PyTorch

Pick PyTorch as your main framework and treat it as your new workbench. Install it and supporting tools (pip install torch torchvision torchaudio) and work through a sequence where each concept becomes a tiny, testable project: tensors and tensor operations; building simple feedforward networks; writing your own training loop with optimizers like SGD and Adam; and adding regularization such as dropout and weight decay. A classic mini-project here is MNIST digit classification from scratch in PyTorch, followed by repeating the same pipeline on a small custom dataset you collect yourself (even basic image folders). This is where you first feel what training actually means: losses decreasing, gradients flowing, and models overfitting if you don’t control them.

Understand transformers and LLM workflows

With basic deep learning under your belt, you can move into transformers and LLMs without drowning. Focus on the core ideas first: self-attention and multi-head attention, positional encodings, and the distinction between encoder, decoder, and encoder-decoder architectures. Then move to practice using the Hugging Face Transformers ecosystem: load a pre-trained text model, fine-tune it for sentiment classification, and compare that to using the same model via prompting only. Learn the difference between full fine-tuning, prompting, and parameter-efficient methods like LoRA, and pay attention to how each affects training time, cost, and performance. This is where you start thinking in terms of trade-offs, not just “bigger model = better.”

Build RAG systems and simple agents

By now, you’re ready for the part most job descriptions call out explicitly: RAG (Retrieval-Augmented Generation) and early agentic patterns. Using orchestration libraries like LangChain or LlamaIndex together with vector databases (Pinecone, Weaviate), you can build a portfolio-grade project such as a RAG-based Q&A assistant for a real domain: ingest PDFs or website content, chunk and embed documents, store them in a vector DB, and wire up an LLM that answers questions using retrieved context. One widely shared success story from 2025 describes a candidate who landed an entry-level AI role by framing their work as “I built a RAG pipeline to reduce customer support response time by 40%,” instead of just “I built a chatbot.” From there, extend the idea into a simple multi-agent system inspired by agentic AI predictions in outlets like Forbes: one agent plans tasks, another calls tools (web search, code execution, database queries), and a final agent summarizes results for the user. These projects show you can do more than talk to a single model - you can design and orchestrate an entire intelligent system.

Learn MLOps, deployment, and production monitoring

Up to this point, you’ve been assembling shelves and making sure they don’t wobble. MLOps is the part where you anchor the whole bookcase to the wall: packaging models, deploying them to the cloud, wiring up monitoring, and owning what happens when real users lean on your system. Career paths like the AWS-focused guide on Whizlabs’ developer-to-AI-engineer roadmap make it clear that cloud, deployment, and operations are now core skills for AI engineers, not just for “DevOps people.”

Know what MLOps actually includes

MLOps is more than “put the model behind an API.” It’s a set of practices and tools that cover the whole lifecycle: data ingestion, training, packaging, deployment, monitoring, and retraining. In practical terms, you’re aiming to get comfortable with:

- Containerization & orchestration: Docker for images and containers; Kubernetes concepts like pods, deployments, and services.

- Model serving: wrapping models in APIs with FastAPI or Flask, then serving them via Docker containers.

- CI/CD: automated tests and deployments with tools like GitHub Actions or GitLab CI whenever you push to main.

- Experiment tracking & versioning: MLflow or Weights & Biases for logging runs, parameters, and metrics.

Analyses of AI career pathways, such as those summarized by AI Staffing Ninja’s AI career pathways guide, consistently highlight that the engineers who advance fastest are the ones who can move smoothly between modeling and infrastructure, treating deployment and monitoring as part of the job, not an afterthought.

Pick one cloud and learn its ML “happy path”

You don’t need to master every cloud. Choose one (often the one your current or target employer uses) and learn the basic workflow for training and deploying models there. At this stage you’re aiming for breadth over depth: know how to get a Dockerized FastAPI model into a managed service, set environment variables securely, and view logs and metrics in the console.

| Cloud | Flagship ML Service | Good First Use Case |

|---|---|---|

| AWS | Amazon SageMaker | Train a scikit-learn model and deploy as a managed endpoint |

| GCP | Vertex AI | Deploy a custom Docker image for prediction to an endpoint |

| Azure | Azure Machine Learning | Register a model and deploy it as a web service |

Warning: don’t try to learn all three clouds at once. That’s like trying to install three different bookcases in the same corner; you’ll just run out of space. Go deep enough on one to ship at least one real project end-to-end.

Build a simple end-to-end MLOps project

To make this concrete, design one “practice production” system where you own the whole pipeline. It doesn’t need to be fancy; it needs to be complete:

- Pick a small ML model (e.g., a scikit-learn classifier or a lightweight PyTorch model).

- Write a training script that saves the model artifact and logs metrics to MLflow or W&B.

- Wrap the trained model in a FastAPI app (

pip install fastapi uvicorn) with a/predictendpoint. - Create a Dockerfile, build an image (

docker build -t my-model-api .) and run it locally. - Push your image to a registry and deploy it to your chosen cloud (ECS, Cloud Run, or similar).

- Set up a basic CI pipeline that runs tests and builds the image on every push to main.

Make monitoring and feedback loops non-negotiable

Finally, treat monitoring as part of “done,” not a bonus. For each deployed model, log and watch at least:

- Operational metrics: latency, error rates, request volumes, and uptime.

- Model health: input feature distributions over time (for data drift), output score distributions, and performance on a rolling validation set.

- Business impact: simple counters that track how often predictions lead to desired actions (clicks, conversions, resolved tickets, etc.).

This is how you move from “I can run a model in a notebook” to “I can own a system that real people rely on.” In the bookcase metaphor, this is the moment you stop admiring how tall it is and finally bolt it to the studs, confident that it won’t come crashing down the first time someone loads it with real weight.

Build 3-5 impactful, end-to-end portfolio projects

Certificates and course badges are like the glossy diagrams that come with your bookcase; they show what things should look like. Your portfolio is the actual piece of furniture hiring managers can kick, shake, and test. In AI roles, people reviewing candidates care far more about 3-5 real, end-to-end systems you’ve built than a long list of tutorials completed, and project-focused guides like Aman’s AI project suggestions push exactly this: fewer, deeper builds that prove you can go from idea to deployed solution.

What a strong AI portfolio looks like

Think of each project as its own small bookcase: it needs a clear purpose, solid joints, and a way to show it’s actually holding weight. From breakdowns of successful ML portfolios and the kinds of projects highlighted in hiring guides, some consistent patterns emerge for AI engineers:

- End-to-end systems: data → model → API or app → basic monitoring. Not just a notebook that stops at

model.fit(). - Real-ish problems: use cases that a business might plausibly care about (support, forecasting, personalization, ops), not only toy Kaggle competitions.

- Measurable outcomes: clear metrics (latency, accuracy, error reduction, response time) that show your system improves something.

- Readable code and docs: clean repository structure, clear README, and simple instructions to run the project.

- Deployed artifact: a live demo, public endpoint, or at least a Docker image + deployment walkthrough.

Because AI roles now command serious compensation - Built In’s AI engineer salary reports put the average U.S. base around the mid-six figures for many markets - the bar for portfolios has risen. Hiring managers are no longer impressed by “I made a chatbot once”; they expect to see evidence you can design and own systems.

Four high-impact project templates

If you’re aiming for 3-5 projects, you don’t need to reinvent everything from scratch. You can adapt a handful of proven templates that show different parts of the AI stack and align with common job descriptions. Each one should live in its own GitHub repo with a short demo video or screenshots.

| Project | What It Demonstrates | Typical Stack |

|---|---|---|

| Real-Time RAG Assistant for a Niche Domain | LLM integration, retrieval, embeddings, prompt design, latency/cost trade-offs | Python, LangChain/LlamaIndex, vector DB (Pinecone/Weaviate), LLM API, FastAPI |

| End-to-End MLOps Pipeline | Training, experiment tracking, containerization, CI/CD, cloud deployment, monitoring | scikit-learn or PyTorch, MLflow/W&B, Docker, GitHub Actions, AWS/GCP/Azure |

| Multi-Agent Planner | Agent orchestration, tool calling (web, code, DB), planning vs. execution, error handling | Python, LLM agents, tool APIs (search, code exec), lightweight task DB, web UI or CLI |

| Computer Vision on Edge or Mobile | Model compression, performance constraints, hardware-aware design | YOLOv8 or similar, ONNX/TensorRT, webcam or mobile app wrapper, simple dashboard |

- Aim to cover at least one LLM/RAG project, one classic ML + MLOps pipeline, and one “special” angle (agents, CV, or another domain you care about).

- Reuse pieces where it makes sense (e.g., the same monitoring pattern across projects) so you deepen skills instead of rebuilding everything from zero.

How to tell the story around each project

A solid build still wobbles in interviews if you can’t explain it. For every project, your README and your verbal story should answer three questions: What problem did you tackle, what did you build, and how did you know it worked?

- Problem: one or two sentences in plain language (“Small e-commerce stores struggle to answer repetitive support questions quickly.”).

- Solution: a concise system summary (“Built a retrieval-augmented assistant that ingests their product FAQ and policy docs, then serves answers via an internal web app.”).

- Stack: list core tools and why you chose them (e.g., “chose a vector DB over fine-tuning to keep updates simple and cheap”).

- Impact & validation: describe how you tested it (manual evaluation, test set, user feedback) and what success looked like.

If you can do this for 3-5 diverse, end-to-end projects, you’re no longer just someone who followed an AI roadmap. You’re someone who’s designed, built, and stress-tested real systems - and your portfolio becomes a sturdy, well-documented manual that hiring managers can trust.

Choose an education path that fits your budget and background

At some point, you have to decide how you’re actually going to learn all this - not in abstract, but in terms of money, time, and the rest of your life. Are you going back to school? Piecing everything together from YouTube and papers? Enrolling in a bootcamp? The wrong choice doesn’t just waste cash; it can leave you with another half-assembled “bookcase” and no one to tell you why it keeps wobbling. The right choice depends on your background, budget, and how much structure you need to keep moving for 12-18 months.

Option 1: University degree

A traditional degree is still the most recognized path, especially if you want to compete for research-heavy or visa-sensitive roles. Many AI engineer job listings prefer at least a Bachelor’s in Computer Science, Engineering, or Math, and senior or research roles often tilt toward a master’s or PhD. Schools that show up in the U.S. News ranking of top AI graduate programs (Carnegie Mellon, MIT, Stanford, and others) offer strong theory, faculty networks, and recruiting pipelines. The trade-offs: 2-4+ years of study, high tuition that can easily hit tens of thousands per year, and curricula that may lag behind fast-moving stacks like production LLMs and modern MLOps. University can be a great fit if you’re earlier in your career, can afford the cost, and want the breadth and prestige that come with a formal degree.

Option 2: Self-study and open resources

Self-study gives you maximum flexibility and minimum direct cost. With high-quality blogs, MOOCs, and open-source repos, it’s entirely possible to build a credible AI skillset on your own. This route often works well for people who already write software professionally and just need to add ML and MLOps to an existing engineering foundation. The downside is that you’re also taking on project selection, pacing, and accountability by yourself. It’s easy to drift - spending months on theory with no projects, or hopping from LLMs to reinforcement learning to computer vision without ever finishing anything end-to-end. If you choose this path, you’ll need to be deliberate about following a roadmap, scheduling regular “checkpoints,” and seeking feedback through code reviews, open-source contributions, or mentorship.

Option 3: Structured bootcamps (including Nucamp)

Bootcamps sit in the middle: more structure and support than pure self-study, far less time and money than a full degree. Many AI-focused bootcamps now cost well over $10,000, which can be a serious barrier for career switchers. Nucamp was built to attack exactly that problem: its AI-relevant programs range from about $2,124 to $3,980, with monthly payment plans and schedules designed for working adults. The 16-week Back End, SQL and DevOps with Python bootcamp focuses on Python, databases, and deployment - perfect for your foundation and early MLOps skills. The 25-week Solo AI Tech Entrepreneur Bootcamp dives into LLM integration, prompt engineering, agents, and shipping AI-powered products, while the 15-week AI Essentials for Work program targets practical AI use in non-technical roles. Across programs, Nucamp reports roughly a 78% employment rate, a 75% graduation rate, and a 4.5/5 Trustpilot rating with about 80% five-star reviews, which is unusually strong for the price point.

| Path | Typical Duration | Typical Cost | Best For |

|---|---|---|---|

| University degree | 2-4+ years | Tens of thousands per year | Those wanting deep theory, prestige, or academic/research roles |

| Self-study | Highly variable (often 12-24+ months) | Lowest direct cost | Disciplined learners with strong existing coding skills |

| Structured bootcamp (e.g., Nucamp) | 15-25 weeks per program | Roughly $2,124-$3,980 | Career-switchers needing structure, projects, and support on a budget |

There’s no single “correct” choice. The key is to be honest about your constraints and working style: if you know you stall without deadlines and feedback, a structured bootcamp might save you months of wandering. If you already have a solid CS degree, self-study plus targeted courses might be enough. And if you’re earlier in your academic journey and can invest the time and money, a university program can give you a broad, durable base. What matters most is that whichever path you choose, it plugs cleanly into the roadmap you’ve laid out - so every course, project, and tuition payment moves you closer to owning real AI systems, not just collecting more instruction sheets.

Build public credibility and run a focused job search

Once your skills and projects are coming together, the next challenge is getting other people to see them - and trust them. At this point, you’re not adding new shelves so much as putting the bookcase in the living room, letting people lean on it, and showing that it doesn’t tip. That means building public credibility (GitHub, writing, networking) and running a job search that’s precise instead of desperate.

Treat GitHub and writing as your public workshop

Your GitHub should read like a clear build log, not a junk drawer. Put all 3-5 flagship projects there, each in its own repo with a solid README that explains the problem, stack, and results. Use branches, pull requests, and issues - even on solo projects - to show you understand real-world workflows. Then layer in short writeups on LinkedIn or a blog where you walk through how you designed your RAG assistant, debugged a flaky deployment, or compared two architectures. Career breakdowns like SalaryCube’s ML engineer salary and skills analysis repeatedly emphasize that engineers who can explain their work clearly tend to move faster into higher-paying, higher-responsibility roles.

Network like an engineer, not a spam bot

Networking isn’t about blasting “open to work” posts; it’s about building a small circle of people who’ve actually seen you build. Start simple: join a couple of AI Discords or Slack communities, attend local meetups or virtual events once a month, and aim for one meaningful conversation per week - commenting thoughtfully on someone’s project, asking a specific question, or sharing a small win. Guides to emerging AI roles, such as the “13 AI jobs that will explode” breakdown on The Cloud Girl’s career guide, underline how diverse the ecosystem has become; thoughtful networking is often how you hear about niche roles (LLM app engineer, AI ops, internal tools) before they hit the big job boards.

Run a targeted, evidence-backed job search

Instead of applying to every posting with “AI” in the title, pick a lane (LLM app engineer, core ML, MLOps) and build your materials around it. For each role:

- Mirror the language of the job description with your actual projects (“Built a LangChain-based RAG system with FastAPI and Docker, deployed to cloud, monitored latency and error rates”).

- Lead with impact in your resume bullets (“Improved demand forecasting error by 18% in backtests,” “Cut manual support response time by 40% in pilot”).

- Prepare for three interview tracks: practical coding (Python + data structures), ML/LLM fundamentals, and system design for ML or RAG services.

Remember that you’re competing in a market where mid-level ML/AI engineers often earn well into six figures, and even early-career roles can be highly selective. A focused search - backed by visible code, clear explanations, and a small network that knows what you can do - beats sending out hundreds of indistinguishable applications. At that point, you’re not just someone who followed an AI roadmap; you look like an engineer who already behaves the way teams expect their colleagues to behave in production.

Verify your skills and troubleshoot common wobbles

Reaching the end of a roadmap can feel a bit like tightening the last screw and stepping back: is this thing actually solid, or is it going to tip the moment someone loads it with real books? At this point, more courses won’t help unless you can verify that you’re able to design, ship, and own AI systems end-to-end. You need a clear way to check your skills against what teams are really using, and a plan for what to do if something still wobbles.

Run a simple self-check on your skills

Instead of asking “Do I know enough?”, test yourself against a few concrete capabilities that line up with how modern AI work actually looks. Analyses like Stanford HAI’s expert predictions on AI in 2026 emphasize system-level thinking, cross-functional collaboration, and continuous deployment as the new normal, not rare edge cases. Use that as your bar:

- End-to-end build: Given a real-ish problem, can you choose a sensible approach (classic ML vs. LLM/RAG), collect or clean data, train and evaluate a model, wrap it in an API or app, deploy it to the cloud, and add basic logging and monitoring?

- Portfolio depth: Do you have 3-5 polished projects that each tell a clear story (problem, solution, metrics, stack), not ten half-finished notebooks?

- Communication: Can you explain a project to a non-technical stakeholder without jargon, then dive deep into embeddings, retrieval strategies, or regularization when talking to another engineer?

- Market traction: Are you getting some positive signals - recruiter messages for AI/ML roles, passing initial screens, or good feedback in mock interviews that focuses on refinement, not “you’re missing the basics”?

- Adaptability: When a new model, API, or requirement appears, can you integrate it in days rather than months, using the same fundamentals and patterns you already know?

Spot the most common “wobbles”

If you’re not checking most of those boxes yet, it’s almost always because of a few recurring gaps - not because you “don’t have the brain for AI.” The fix is to diagnose the wobble and add targeted reinforcement, not to start the entire roadmap over from scratch.

- Wobble 1 - Notebook-only projects: You can train models but haven’t deployed anything. Remedy: pick one existing project and upgrade it into a service - FastAPI + Docker + a single cloud deployment - before you start anything new.

- Wobble 2 - Shallow LLM demos: You’ve built chatbots that only work on toy prompts. Remedy: turn one into a RAG system with your own docs, add evaluation (manual grading or small test sets), and track latency and cost per request.

- Wobble 3 - Weak coding or CS fundamentals: You freeze on data structures or basic algorithms in interviews. Remedy: schedule regular Python + DSA practice (even 3-5 problems a week) and refactor one project to use cleaner abstractions and tests.

- Wobble 4 - No business framing: You can describe what you built, but not why it matters. Remedy: rewrite every project README to lead with the problem, who cares about it, and what changed when your system worked.

Know when to double down vs. when to ship yourself

There’s a moment where “one more course” stops helping and “more applications and conversations” becomes the real lever. Tech compensation reports such as Birchwood University’s overview of high-paying tech jobs in the U.S. show AI and ML roles clustered near the top of the market, which means competition is real - but it also means you don’t need to be perfect to be hireable, just solid where it counts. As a rule, if you can build and deploy an end-to-end system, defend your design choices, and show a handful of projects with clear impact, it’s time to run a serious job search while continuing to shore up weak spots in parallel.

From here on out, your growth looks less like following a linear checklist and more like maintaining and expanding a living system: add a new project when you see a gap, refactor old code as you learn better patterns, and keep tightening the bolts on deployment, monitoring, and communication. If your “bookcase” can handle real-world weight - users, traffic, changing requirements - you’re not just someone who studied AI. You’re behaving like an AI engineer, and that’s what hiring managers are trying to detect.

Common Questions

Can I become an AI engineer in 12-18 months while working full-time?

Yes - many career-switchers studying part-time finish a practical track in about 12-18 months; if you already write software you can often move faster, while heavy caregiving or full-time study may stretch the timeline. The roadmap in this article breaks that into phases (0-3 months for Python and tooling, 3-6 months for core ML, 6-12+ for specialization and projects).

What minimum skills and hardware should I have before I start?

Bring basic algebra, intermediate English reading, and comfort learning independently, plus software skills: Python 3.10+, Git/GitHub, and a free cloud account; hardware-wise, a laptop with ~16 GB RAM is recommended (8 GB can work but will limit experiments).

Which portfolio projects actually convince hiring managers?

Aim for 3-5 end-to-end systems that each show data → model → API/app → basic monitoring: at least one LLM/RAG project, one ML+MLOps pipeline, and one specialization (agents, CV, or edge). Include measurable outcomes (for example, “reduced support response time by 40%”) and a deployed demo or clear deployment instructions.

Should I do a degree, a bootcamp, or self-study to break into AI in the US?

It depends on your constraints: a university degree (2-4+ years, often tens of thousands in tuition) suits research or early-career students, self-study is lowest cost but takes discipline (often 12-24+ months), and structured bootcamps (15-25 weeks, roughly $2,124-$3,980 in the ranges cited here) give fast, project-focused support for career-switchers. Choose the path that matches your budget, need for accountability, and hiring goals.

I can train models in notebooks but I’m not getting interviews - what do I fix first?

Stop building new notebooks and upgrade one existing project end-to-end: wrap the model in a FastAPI endpoint, Dockerize it, deploy it to a cloud free tier, and add simple monitoring; this single change often turns a notebook into a portfolio asset. If other gaps exist, prioritize converting an LLM demo into a RAG system with evaluation, practicing coding/DSA (3-5 problems/week), and rewriting READMEs to lead with business impact.

Compare stability and pay in the highest-paying IT Manager and CTO roles for small to mid-size companies.

Use this article as a skills-first hiring checklist for the 2026 tech job search when you update your resume.

This resource includes a hands-on tutorial for building a job-ready portfolio with real projects and READMEs.

If you care about pay and culture, this top tech companies ranked by growth, pay, and culture breaks down the trade-offs clearly.

If you’re switching careers, explore the top internships for career switchers into tech that emphasize mentorship and real project ownership.

If AI or systems excite you, check the top CS programs for AI and systems breakdown.

If you’re a working parent, our best part-time bootcamps for working adults section is especially relevant.

Explore the best languages for AI web apps if your goal is building LLM-powered front ends and production UIs.

Use our comprehensive learn-to-portfolio guide to pick one starter, one main, and one optional side.

Developers curious about niche stacks can compare outcomes in the comprehensive salary matrix for specialized languages.

If you’re weighing salaries and day-to-day work, this AI vs web development career comparison chapter is especially helpful.

This comprehensive guide to monetizing AI-built apps explains pricing, product-market fit, and early customer outreach.

For a practical, ranked overview, check our comprehensive ranking of beginner-friendly tech careers and the skills each requires.

Get practical AI-assisted development tips for learners that explain how to use assistants without skipping core learning.

Want cloud-focused roles? See the cloud ML engineer certifications ranked for employer recognition.

Consider this alternative to jumping straight into ML engineering if you prefer product-facing work first.

Frontend devs looking for polished components can see the best text-to-UI generators for React + Tailwind in our ranking.

Start with the complete guide to using AI at work in 2026 to turn chaotic workflows into calm processes.

If you want a quick comparison, check our best AI skills for 2026 to see demand and pay side-by-side.

Career-switchers should learn which roles are most at risk from AI in 2026 before updating their resumes.

Compare niche opportunities in the top AI business opportunities ranked by runway and risk to pick the right gate for your skills.

Explore focused pathways like QA and cybersecurity bootcamps under $5,000 for faster on-ramps into entry-level tech roles.

Before you enroll, read the best bootcamps comparison and 'test drive' checklist to know what questions to ask.

Use our long-tail guide to choosing part-time bootcamps for working professionals to map programs to your life.

Not sure which is better? Read the which is better: bootcamp or CS degree guide for practical decision advice.

Use our complete guide to choosing a bootcamp to evaluate curriculum, career services, and red flags before you enroll.

Consult the best money-back coding bootcamps entry to understand strict refund eligibility and application quotas.

Explore the long-tail plan: how to learn to code while holding a 9-5 for step-by-step milestones.

Students should learn what junior, mid, and senior web developers earn before applying.

Build a targeted learning plan with our top 10 entry-level tech skills for 2026.

If you want to learn how to use AI as a productive sous-chef, check the section on AI literacy and prompts.

Read our tutorial on choosing 2026-ready templates to pick a project that shows agentic systems and deployment.

Founders and product builders should read our roundup of AI bootcamps for entrepreneurs and product builders focused on LLMs and monetizable projects.

For conceptual depth, check the best platform-neutral AI fundamentals picks such as Elements of AI.

Irene Holden

Operations Manager

Former Microsoft Education and Learning Futures Group team member, Irene now oversees instructors at Nucamp while writing about everything tech - from careers to coding bootcamps.