Social Engineering in 2026: The Real-World Scams and How to Defend Against Them

By Irene Holden

Last Updated: January 9th 2026

Key Takeaways

Social engineering in 2026 is driven by AI-enhanced, hyper-personalized scams - from deepfake executive calls to smishing, quishing, and sophisticated BEC - and remains the primary entry point because roughly 60 to 68 percent of incidents involve the human element and U.S. losses reached about $16.6 billion. Defend by pausing and verifying out-of-band, enforcing phishing-resistant MFA (like FIDO2), adding payment guardrails and dual approvals, and running realistic training - which can drop click rates from about 33.1 percent to roughly 4.1 percent - because unchecked AI phishing can push click rates as high as 54 percent.

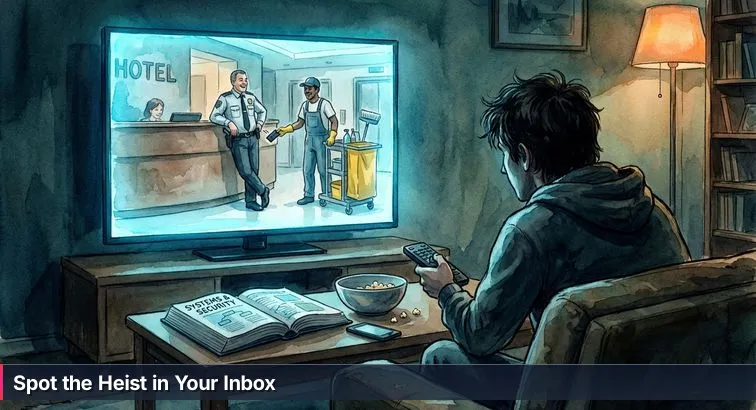

The second time you watch the heist movie, the lobby scene doesn’t feel like background noise anymore. You hit pause. The “bored” guard isn’t just bored; he’s looking the other way on purpose. The janitor’s joke as he wheels the mop bucket past reception is really a distraction. A hand brushing a dangling keycard for half a second is the moment the whole break-in becomes possible. On your first viewing, it was just set dressing; on the rewatch, you see the script underneath the scene.

Now imagine hitting pause on your own day. At 7:42 a.m., you skim an email “from IT” while half awake. At 12:18 p.m., you tap a text about an “unpaid toll” in line for coffee. At 4:03 p.m., your boss “messages” you on Teams asking for a quick favor. Those feel like background moments, but for an attacker, they’re the lobby shot: the tiny window where they try to get you to open the door. In the U.S. alone, victims lose around $16.6 billion to social engineering scams in a single year, with losses growing about 33% year over year, according to a compiled cost of cybercrime analysis from Old Republic Title. And when generative AI writes the script, phishing emails become far more convincing - tests show AI-generated phishing can get people to click up to 54% of the time, compared to about 12% for old-school attempts, as documented in DeepStrike’s AI cyber attack statistics.

Baseline security tests still find that roughly 33.1% of employees fall for social engineering during initial assessments, even though almost everyone has “heard of” phishing. So the real divide isn’t between people who know scams exist and people who don’t. It’s between first-time viewers - who only notice the twist after it hits - and second-time viewers, who can feel the con building from the first casual “just checking in” email. This guide is about making that shift: learning to see the role you’re being cast in, the pressure beats (“urgent,” “don’t tell anyone”), and the moment the attacker needs you to break procedure so their heist can move from planning to execution.

Over the next sections, you’ll move from background extra to script doctor. We’ll walk through how modern social engineering actually works - email, text, calls, QR codes, deepfakes, even in-person encounters - and we’ll only dissect these tactics for one reason: so you can spot and stop them ethically and legally, long before any money moves or data leaves. By the end, you’ll be able to:

- Recognize the core beats of modern social engineering and where you fit in the script

- Spot real-world scams like swipe-up mobile exploits, deepfake executive calls, quishing, and synthetic identities

- Use practical checklists and verification steps at home and at work, without needing to be “technical”

- Understand the technical and human defenses organizations rely on - and how you can strengthen them

- See how this skill set maps directly into cybersecurity careers, from SOC analyst to ethical hacker and security awareness specialist

Think of it as turning on the director’s commentary for your inbox, your notifications, and your daily conversations. Instead of racing through scenes and hoping the filters catch the villains, you’ll learn to pause, rewind, and examine what’s really happening in the background - so when the next “urgent favor” or “unpaid toll” pops up, you’re not just reacting. You’re reading the script, staying within ethical and legal lines, and quietly rewriting the ending.

In This Guide

- Introduction - learning to spot the scam

- Social engineering today: what it means in 2026

- The numbers behind the scams and why they matter

- The modern playbook: scripts, roles, and psychological beats

- Phishing, spear phishing, and whaling explained

- Business Email Compromise and pretexting defenses

- Vishing and AI voice cloning: stop, verify, and call back

- Smishing, quishing, and mobile swipe-up exploits

- Deepfake video, synthetic identities, and executive impersonation

- Physical social engineering: tailgating, baiting, and hybrids

- The AI twist: why old red flags don’t work anymore

- Defense in depth: technical controls that break the script

- Human firewalls: training, culture, and everyday habits

- Red flags checklist and verification playbooks

- Turning awareness into a cybersecurity career

- Director’s cut: changing how you see every message

- Frequently Asked Questions

If you want to get started this month, the learn-to-read-the-water cybersecurity plan lays out concrete weekly steps.

Social engineering today: what it means in 2026

Think of social engineering not as a random scam, but as the opening act of almost every modern heist. The attacker doesn’t start by “hacking a firewall”; they start by getting a person to hold the door, share a code, or click a link. In security terms, social engineering is any attack that targets people instead of just systems - using stories, urgency, and trust to trick someone into giving up access, money, or information. It’s the moment the keycard gets quietly lifted in the lobby, long before anyone touches the vault.

What social engineering really looks like now

Across real breach investigations, somewhere between 60% and 68% of incidents involve the so-called human element - social engineering, misdirected messages, or plain error - based on summaries of the Verizon Data Breach Investigations Report compiled by dmarcian’s analysis of recent DBIR findings. That means the first successful move often isn’t malware or an exotic exploit; it’s a believable email to a payroll clerk, a fake login prompt for a cloud admin, or a phone call to the help desk. When you picture “the attack,” imagine an attacker in the writer’s room planning which employee to cast, what pretext to use, and which process they want that person to bend.

Why people are the primary target

Security teams have spent years hardening networks, encrypting data, and rolling out single sign-on, so attackers follow the path of least resistance: everyday workflows. If you send invoices, approve expenses, reset passwords, or manage HR data, you’re part of the plot whether you like it or not. In surveys summarized by Infosecurity Magazine, about 63% of cybersecurity professionals now rank AI-driven social engineering as their top concern, ahead of even ransomware, because it exploits human judgment at exactly these touchpoints. The platform doesn’t matter - email, Teams, Slack, SMS, LinkedIn - all of them are just different stages where the same human story plays out.

AI and the “death of bad grammar”

Attackers also have new co-writers. Analyses from multiple threat-intelligence firms show that over 80% of phishing campaigns now use AI for at least one step: drafting text, personalizing details, or generating look-alike websites. What used to be a clumsy mass email is now a fluent, on-brand message that references your actual role or recent activity. Experts at Bitdefender even describe this shift as the “death of bad grammar”, noting in their recent cybersecurity predictions that generative models have made phishing, vishing, and Business Email Compromise both linguistically flawless and highly contextual.

For you, that changes the game. You can’t rely on crooked logos and awkward phrasing as your main tripwires anymore. Instead of asking, “Does this message look sketchy?”, you start asking, “If this were a heist scene, what role am I being pushed into, and what shortcut am I being nudged to take?” Once you see social engineering that way - scripted, targeted, and increasingly AI-polished - you’re much closer to being the person who spots the con in the lobby instead of the one wondering how the vault somehow ended up open.

The numbers behind the scams and why they matter

Before we go any deeper into the movie, it helps to know how often the “lobby scene” actually decides the plot. Social engineering isn’t a side character; by the time most incidents are investigated, the trail usually leads back to a person who clicked, approved, or shared something they shouldn’t have. The numbers are uncomfortable, but once you see them, it becomes a lot easier to understand why attackers put so much energy into writing better scripts for people, not just code for machines.

Across recent studies from Verizon, DeepStrike, KnowBe4, and others, a consistent pattern emerges. Around 60%-68% of breaches involve the human element - everything from social engineering to misdelivery and error. Baseline testing shows that about 33.1% of employees fail initial phishing simulations, but with focused security awareness training that can drop to roughly 4.1% within a year, as detailed in the KnowBe4 Phishing By Industry Benchmark report. Financially, U.S. losses from social engineering grow at about 33% year-over-year, reaching roughly $16.6 billion in 2024, and Business Email Compromise incidents alone see median losses around $50,000 per case, according to breakdowns in recent cyberattack statistics from ZeroThreat. Layer on AI and it gets sharper still: AI-generated phishing emails in some tests achieve click-through rates up to 54%, compared to about 12% for manually crafted phishing, and analyses from DeepStrike and others estimate that over 80% of phishing campaigns now use AI in some form.

There’s another subplot unfolding in the background: deepfake and fraud claims. Research cited by Aon and summarized in recent industry reports shows a 53% increase in social-engineering incidents year-over-year and a staggering 233% growth in fraud claims, with deepfake-enabled scams playing a major role. At the same time, some incident-response data indicates that as many as 79% of observed intrusions are now “malware-free” - attackers don’t always drop obvious malicious files; they log in with stolen credentials and move like any other user. Put together, the statistics say the quiet part out loud: the first successful move is usually psychological, not technical, and it often looks like a normal work task done in a slightly abnormal way.

Why does this matter if you’re “just” an end user, a beginner, or someone switching into cybersecurity? Because the people authoring invoices, approving payments, resetting passwords, or granting access are the ones standing on the critical marks. If you regularly authorize payments, touch customer data, manage shared inboxes, or administer cloud tools, you are exactly the kind of character attackers try to cast. Treating social engineering defense as a core professional skill - not a side dish of “be careful out there” - is how you move from being a background extra to someone who can see, in real time, when a request doesn’t fit the script.

A quick personal risk scan makes this concrete. Ask yourself: Do I ever change vendor bank details or approve wires? Do I have admin or HR access to systems others don’t? Is my role and workplace visible on LinkedIn or other social media? If you answered yes to any of those, the statistics aren’t just abstract; they describe the environment you’re already working in. The good news buried in the same data is that targeted training and better verification habits measurably bend the curve. Once you understand how often the heist starts with “Can you do me a quick favor?” and how dramatically failure rates drop with practice, it stops feeling like a horror story and starts feeling like a skill you can build.

The modern playbook: scripts, roles, and psychological beats

Once you start seeing attacks as scripted heists instead of random chaos, patterns jump out. Social engineers don’t just wake up, fire off a sloppy email, and hope you wire them money. They run a playbook: research their mark, choose a disguise, open with something disarming, then build pressure until you do the one thing that unlocks the rest of the plot. You’re not just “getting scammed” in that moment; you’re being cast in a role the attacker has already written for you.

The attacker’s process: from writer’s room to first contact

Most modern social engineering campaigns follow the same spine, whether the channel is email, SMS, LinkedIn, or a phone call. StrongestLayer’s team showed that with the help of generative AI, an attacker can now assemble a highly targeted phishing campaign in minutes that used to take human operators many hours, turning what used to be a bespoke con into something they can run at industrial scale. A typical sequence looks like this:

- Reconnaissance - Scraping LinkedIn, corporate bios, press releases, and data-broker records to learn who does what, who approves money, and what tools you use.

- Casting - Deciding who to impersonate (CEO, vendor, IT, HR) and who to target (AP clerk, recruiter, engineer, executive assistant) based on leverage.

- The Approach - A low-risk opener: a friendly check-in, a calendar invite, a routine security notice, or a simple “Is this still your number?” text.

- The Turn - Introducing urgency, secrecy, or emotional pressure: a last-minute payment window, a security scare, a deal that will “fall through” if you wait.

- The Ask - The real objective: move money, change bank details, share credentials or MFA codes, upload a file, or install a “required” tool.

- The Cover-Up - Encouraging you to keep it quiet, deleting evidence, or using one-time “tokenized” pages that vanish once the job is done.

The psychological beats underneath the script

Under all the technical gloss, the engine that makes this work is psychology. A recent preprint on social engineering research notes that attackers systematically lean on cognitive shortcuts like authority, urgency, and reciprocity to bypass careful thinking, describing social engineering as “one of the most effective ways of circumventing technical measures” in real-world attacks. In that analysis from Preprints.org’s study of social engineering trends and psychological triggers, the most common levers line up almost perfectly with what you see in real incidents.

- Authority: “I’m your CEO / bank / vendor; this is already decided.”

- Urgency: “Right now, before close of business, or something breaks.”

- Scarcity: “Last chance, one-time link, limited window.”

- Reciprocity: “We did X for you; now you just need to do Y for us.”

- Fear or greed: “Account locked, legal action coming” versus “Bonus, prize, refund waiting.”

- Isolation: “Don’t loop anyone else in yet; this needs to be discreet.”

“Social engineering attacks remain one of the most effective ways of circumventing technical measures, precisely because they exploit predictable psychological triggers rather than technical vulnerabilities.” - Authors, Social Engineering Attacks: Trends, Psychological Triggers, and Defense Strategies, Preprints.org

Breaking the story by naming the scene

The practical move for you is to start labeling what scene you’re in. When a strange email or text shows up, ask yourself: Is this recon (they’re probing), the turn (pressure is rising), or the ask (they want money, data, or access)? Simply giving it a name creates a tiny pause between stimulus and response. That pause is where you can check context, verify through another channel, or push the interaction back into a safe, documented process. Industry analyses compiled on sites like Sprinto’s review of social engineering statistics show just how often those small pauses change outcomes: organizations that train people to recognize these beats see dramatically fewer successful attacks, even when the emails and calls look perfect. You don’t have to memorize jargon; you just have to get good at spotting the moment the script needs you to break your usual rules - and choose not to.

Phishing, spear phishing, and whaling explained

On the surface, phishing looks simple: a fake email shows up, you either click or you don’t. But in the heist script, this is where most plots actually begin. Phishing is the broad, mass-emailed version of the con. Spear phishing narrows the cast list to a specific person or role. Whaling goes after “big fish” like executives and high-value decision-makers. Underneath all three is the same goal: get you to do something that hands the attacker a key - usually by imitating a service (Microsoft, Google, your bank) or a person (your boss, a vendor, IT).

How it shows up in your inbox

Imagine you get a “security alert” from what looks like Microsoft. The language is polished, it references your time zone, and there’s a big button to “Secure Your Account.” Behind the scenes, campaigns like this are increasingly AI-assisted: recent analyses show that over 65% of phishing campaigns now use AI-generated content, and about 51.7% of malicious emails impersonate well-known brands such as Microsoft, Google, and Amazon, according to a detailed breakdown in Keepnet Labs’ phishing statistics and trends report. The fake login page you land on might be nearly pixel-perfect, but the domain will be just off - something like micros0ft-login-secure.com instead of the real one. In spear-phishing and whaling attacks, the copy goes even further, referencing real projects, coworkers, or travel plans; some studies now estimate that roughly 1 in 12 targeted spear-phishing emails successfully compromise credentials.

Why these lures work so well

Part of the power here is that the message blends into the normal flow of your workday. You’re used to password reset emails, document share notifications, and invoice reminders. The attacker’s script leans on that muscle memory. They add a touch of urgency (“We’ve detected suspicious activity, act within 24 hours”), then offer the easiest possible path: a single button in the email. For defenders, the challenge is that traditional “tells” like bad spelling or awkward phrasing are fading; threat coverage on sites like The Hacker News’ social engineering channel highlights how generative AI has raised the quality bar for phishing, making content fluent, on-brand, and often localized to the target’s language and region.

A simple verification routine you can run every time

The safest move isn’t to become a human spam filter; it’s to change how you react to anything that asks for credentials, payments, or sensitive data. When an email asks you to log in, pay, or approve something, hit pause. Hover over the sender and links: does the domain exactly match what you expect, down to the last letter? If it’s from a company or colleague, can you confirm via another channel - your corporate chat, a known phone number, or by navigating to the official website yourself instead of using the email button? And remember the ethical line: as you learn how these attacks work, use that knowledge only for defense - spotting, reporting, and helping improve simulations and training inside organizations, never for crafting real-world scams. In the heist movie, this is the moment you refuse to follow the script and instead call the bank, IT, or your manager to check the story before the vault door quietly swings open.

Business Email Compromise and pretexting defenses

In most corporate heist stories, the big score isn’t a mysterious “hack” - it’s an email that looks boringly normal. That’s Business Email Compromise (BEC): attackers slipping into everyday conversations about invoices, payroll, or vendor updates and steering the money somewhere it was never meant to go. The technique that powers it is pretexting - building a believable story (“we changed banks,” “the CEO is traveling,” “this is a confidential deal”) that makes an unusual request feel routine.

How BEC and pretexting actually play out

Instead of blasting out random phishing, BEC actors study who talks to whom about money. They may first compromise a mailbox or spoof a domain, then quietly watch real threads about invoices and contracts. When the timing is right, they reply in-line: “Here are our new banking details, please use this account from now on.” Recent analyses show pretexting incidents have nearly doubled and now account for over 50% of all social engineering cases, and BEC itself makes up about 21% of phishing-related losses with an average $150,000 lost per incident in 2024, according to industry figures summarized in the Identity Theft Resource Center’s Business Impact Report. Because the message appears in an existing thread, from a familiar name, many targets never even think of it as “a security decision” - it feels like just another Tuesday task.

Red flags in the middle of a “normal” email

The trick is that the danger rarely looks dramatic. It’s subtle shifts: a vendor asking you to update bank details via email instead of your usual portal; a supplier suddenly insisting a payment must go out today or a shipment will be delayed; someone in leadership pushing you to “keep this between us” while bypassing standard approvals. Attack write-ups from firms like Memcyco describe how modern social engineers blend these process changes with technical tricks - such as short-lived “tokenized” pages that disappear when investigators try to revisit them - to erase evidence as soon as the transfer clears, underscoring how much rides on the decisions individual employees make in the moment, not just on the tools running in the background.

“The most damaging business email compromises rarely hinge on a single technical failure; they succeed because a plausible pretext convinces someone to override the very processes meant to protect the organization.” - 2025 Business Impact Report, Identity Theft Resource Center

Out-of-band verification: your simplest plot breaker

The strongest defense against BEC isn’t a complicated gadget; it’s a boring, repeatable habit: never trust a change to payment instructions based solely on the channel where the request arrived. Any time you see new bank details, urgent transfer requests, or unusual instructions, treat it as a high-risk event. Build a ritual like this into your workflow:

- Pause immediately. Do not act from the email, even if it’s in a real thread.

- Verify out-of-band. Call a known contact using a phone number from your internal records or contract, not from the message itself.

- Require dual control. For significant transfers or banking changes, ensure at least two people review and approve.

- Document the check. Note who you spoke with, when, and how they confirmed.

For beginners and career-switchers moving into cybersecurity, this is a perfect example of how understanding human workflows beats memorizing buzzwords. You don’t need admin access to protect your company from BEC; you just need to recognize when the script is trying to rush you past your own rules, and then slow the scene down long enough to confirm the story through a channel the attacker can’t control.

Vishing and AI voice cloning: stop, verify, and call back

The first time your “CEO” calls you in a panic, it doesn’t feel like a cyberattack. It feels like a stressful workday: bad connection, rushed voice, “we’re about to miss this deal if you don’t push this wire now.” That’s vishing - voice phishing - where the entire con runs through a phone line instead of an email. The twist now is that attackers don’t even need to sound kind of like your boss; with seconds of stolen audio, they can sound exactly like them, down to the cadence and favorite phrases.

When the phone becomes the main attack surface

Modern vishing campaigns rarely show up alone. You might get a short email first (“I’ll call you about an urgent matter”), then a phone call from a spoofed number that matches your executive’s contact, then a follow-up message confirming the “transaction.” Threat-intel roundups show why this channel is exploding: some datasets report vishing volumes surging by roughly 440% year-over-year, often tied to requests for bank detail changes or “emergency” transfers, as highlighted in Spacelift’s review of social engineering statistics. Because we’re conditioned to trust a familiar voice more than text, many people will override written policies when a “real person” is asking - especially if that person appears to outrank them.

AI voice cloning raises the stakes

Attackers no longer need to be gifted impersonators. With publicly available audio - from earnings calls, YouTube talks, podcasts, or even leaked voicemail - AI can clone a voice with eerie accuracy. In one widely cited case study, a finance employee was tricked into authorizing roughly $25 million after joining what they believed was a legitimate video call with their CFO, whose likeness and voice were both deepfaked. Articles like Microtime’s analysis of AI-powered social engineering threats warn that employees have “almost no prior experience with AI-generated voices,” making them particularly vulnerable the first time they encounter one.

“In an era of synthetic voices and deepfakes, ‘I heard it from my boss’ is no longer sufficient proof. Verification has to move beyond sound alone.” - AI-Powered Social Engineering: Protecting Yourself in 2026, Microtime Computers

The hang up, verify, and call back ritual

The defensive move here is simple, legal, and powerful: refuse to complete sensitive actions while you are still on the incoming call. If someone - no matter how familiar they sound - asks you to move money, change bank details, share MFA codes, or bypass normal approvals, treat that as your cue to pause the movie. Politely say you must follow policy, hang up, then call back using a number from your corporate directory or official records, not from the caller ID or email signature. If the request is real, the person will understand. If it’s not, you’ve just broken the script the attacker needs to keep you on. As you build a cybersecurity career, this “stop, verify, and call back” habit is exactly the kind of human control that pairs with technical tools; understanding it deeply means you’re not just learning acronyms, you’re learning how to change the outcome of the scene.

Smishing, quishing, and mobile swipe-up exploits

Your inbox isn’t the only stage anymore. The heist has moved into your pocket: a buzz from an unknown number about an “unpaid toll,” a text saying your package is stuck in customs, a QR code taped over a parking meter or printed on a café table. Each one is a tiny lobby scene on your phone, inviting you to tap, scan, or swipe before you’ve even had time to read the fine print.

Smishing: when text messages run the con

Smishing (SMS phishing) leans on how casually we treat texts. One of the fastest-growing lures is the tiny “overdue fee”: fake road-toll messages demanding just a few dollars to avoid penalties. Campaigns like this have exploded, with some analyses showing fake toll smishing up by nearly 2,900% in a single year. And it rarely stops at one channel. Roughly 40% of phishing campaigns now spill beyond email into SMS, chat apps, or collaboration tools, building trust across multiple touchpoints, according to a recent overview of mobile-focused cyber threats. The script is simple: a small, believable debt, a short link, and a fake payment page that quietly harvests your card details while promising a digital receipt.

Quishing and swipe-up tricks: when the URL disappears

Quishing pushes the same idea into QR codes. Instead of a blue button in an email, the attacker gives you a black-and-white square in an attachment, a flyer, or a sticker near a checkout terminal. Email filters often can’t see the URL hiding inside the image, so the malicious link sails through. On mobile, things get even trickier. Researchers at Memcyco have documented a “swipe-up” pattern where a legitimate-looking short link (like a branded Bitly) leads to a page that prompts you to swipe up; the browser then hides the address bar and loads a perfect clone of your bank or email login, with no visible URL to inspect, as described in detail in Memcyco’s analysis of modern social engineering tactics.

“Traditional scanners are failing because attackers now use mobile UI exploits and tokenized pages that disappear when security researchers try to analyze them.” - Tzoor Cohen, CTI Lead, Memcyco

The defensive habits here are straightforward but powerful. Treat any unexpected text about money, penalties, or accounts as a potential cold open, not a to-do item. Don’t tap short links from strangers; instead, type the official site or use the official app you already have installed. In the physical world, avoid scanning QR codes for payments unless they’re part of a trusted system you recognize, and if a page hides your browser’s address bar or makes it hard to see where you are, back out immediately. As you learn these patterns, remember the ethical line: the goal is to recognize and report these setups, not to recreate them. Your job, whether as a savvy user or a future security pro, is to be the person who notices when the URL slips into the shadows and hits pause before the story moves to the vault.

Deepfake video, synthetic identities, and executive impersonation

The newest twist in the social engineering heist doesn’t arrive as an email at all; it appears in a calendar invite. You join a “quick video check-in” with the CISO and CFO about a security drill or urgent transaction. Their faces look right, their voices sound right, they reference real projects and deadlines you recognize. Then they ask you to log into a special portal with your corporate credentials or approve an unusual transfer on the spot. What you don’t see is that the attackers are running a real-time deepfake over the call, using AI to puppeteer executives you trust.

Deepfake meetings as upgraded executive impersonation

Classic “CEO fraud” emails are already damaging, but a video call feels like proof. That’s exactly why attackers are moving there. Case studies compiled in analyses like Dark Reading’s coverage of real-life social engineering attacks show how voice and video deepfakes are being used to authorize large transfers, request confidential reports, or push employees into “emergency drills” that are really credential-harvesting exercises. The script is familiar - authority plus urgency plus secrecy - but the medium makes it much harder for untrained employees to say no. In these scenes, the red flags shift from spelling and formatting to context and process:

- Senior leaders joining from unusual accounts or platforms you’ve never used with them before

- Requests to bypass normal approvals or use a new portal “just for this drill”

- Insistence on acting during the call with no time to verify via another channel

Synthetic identities: when the “new hire” is a ghost

On a different part of the stage, attackers are using AI not to mimic known people, but to invent entirely new ones. Synthetic identities combine AI-generated headshots, fabricated government IDs, and stitched-together credit histories into personas realistic enough to pass many automated checks. Banks have already seen these ghosts opening accounts and applying for loans tailored to slip past their specific KYC (Know Your Customer) rules, a trend explored in depth by The Financial Brand’s analysis of AI-driven identity fraud. The same techniques can seep into hiring: a remote “candidate” with spotless documents and a convincing LinkedIn profile is onboarded into a sensitive role, then quietly abuses their legitimate access from day one. Warning signs here include:

- Resumes and IDs that look perfect but tie back to very recent or shallow online histories

- References that can only be reached on numbers or emails provided in the CV, with no independent trail

- Reluctance to complete any live, verified video or in-person identity checks, especially for high-risk roles

Verification controls that pull the mask off

Defenses against these higher-budget cons still come down to slowing the scene and checking what’s just off-camera. For sensitive video calls, confirm that the meeting was scheduled through official calendars, require corporate accounts and waiting rooms, and don’t execute unusual payments or credential steps without a separate, out-of-band confirmation through known channels. For hiring and onboarding, pair automated checks with at least one strong in-person or securely verified live video step, and validate references using contact details you look up yourself, not just what appears on a resume. As you study these techniques, keep the ethical line bright: understanding deepfakes and synthetic IDs is about helping organizations design safer processes and training, not about creating new disguises. Your role in the story is to be the one who notices when a familiar face or a perfect candidate doesn’t quite fit the script - and then pauses long enough to verify before granting the keys.

Physical social engineering: tailgating, baiting, and hybrids

The quietest part of the heist is often the most important: someone holds a door for the wrong person, props open a stairwell, or ignores a stranger plugging a laptop into an empty desk. That’s physical social engineering. Instead of tricking your browser, the attacker is tricking your instincts about politeness, helpfulness, or routine. Tailgating, baiting, and their hybrids are the lobby scenes of the real world, where a smile, a box of pastries, or a “forgot my badge” line becomes the keycard that bypasses every firewall you’ve paid for.

Tailgating: the “forgot my badge” classic

Tailgating works because it feels rude to challenge someone who looks like they belong. An attacker might stand just outside a side entrance, arms full of boxes, and ask you to “grab the door real quick.” Once inside, they can wander through floors, glance at whiteboards, snap photos of network diagrams, or slide into an empty conference room and connect a device to the wired network. In many breaches, that first unsupervised foothold is all they need to start scanning internal systems or planting hardware that will stay long after they leave. The defensive habit here is simple but uncomfortable: treat every badge-protected door like a single-occupancy checkpoint. If someone doesn’t have visible credentials, walk them to reception instead of waving them through, no matter how much of a rush they’re in.

Baiting: curiosity and convenience as lockpicks

Baiting flips the script: instead of the attacker asking you for access, they leave something tempting where you’ll find it. A USB drive labeled “Employee Bonuses Q4.xlsx” on the break room table, a SD card near the printer, a laptop power adapter “forgotten” in a conference room, or even a printed QR code offering “free coffee for employees” at a nearby café. The hope is that someone will plug the unknown device into a work machine “just to see what’s on it” or scan the code to claim the perk, unknowingly running malware or landing on a credential-harvesting site. Security teams increasingly point to these small moments of curiosity as the missing link between policy and practice; discussions of the modern threat landscape, like Security Boulevard’s look at how SOC roles are evolving, stress that defending organizations now means watching the human layer as closely as the network.

Hybrids: when physical and digital cons team up

The most effective scripts blend physical and digital moves. An attacker might tailgate in as a “third-party technician,” drop a few infected USB drives, snap photos of your badge format and floor map, then follow up days later with highly tailored phishing emails that reference real room numbers, internal jargon, or even the exact model of your printers. To you, those details make the message feel legitimate; to them, it’s just the payoff from a short site visit. Defenses have to match that blend: clear visitor policies and escort rules, locked-down ports on workstations, staff trained to report strange devices immediately, and a culture where it’s normal - not paranoid - to ask, “Who are you here with?” None of that requires deep technical skills. It does require seeing the hallways, meeting rooms, and shared desks the way an attacker would, and choosing, in those quick social moments, not to play the part they’ve written for you.

The AI twist: why old red flags don’t work anymore

Remember when “spot the typo” felt like enough? If the logo was fuzzy and the greeting was “Dear Sir/Madam,” you could safely drag it to trash. That was the first viewing. AI has rewritten the script. Today’s scams look like clean corporate emails, smooth Slack messages, and perfectly local bank alerts, all tuned to your language, your role, even your recent activity. The old red flags haven’t vanished completely, but they’ve moved from center stage to a quick cameo in the background.

How AI rewrote the attacker toolkit

Generative models gave attackers exactly what they needed: a co-writer that never gets tired. Tools can now scrape public data about your company, analyze how you and your peers communicate, and then generate messages that feel hand-crafted but were actually produced in bulk. One industry analysis notes that polymorphic phishing - attacks where each email is slightly different to evade filters - now shows up in about 76.4% of observed campaigns, making traditional signature-based defenses far less effective, according to a report highlighted in StrongestLayer’s deep dive into AI-generated phishing. At the same time, some global snapshots estimate that up to 98% of successful cyberattacks involve a social engineering component somewhere in the chain, as attackers lean on AI-enhanced messaging to open the first door rather than brute-forcing their way in.

Security writers sometimes call this the era of “hyper-personalized social engineering” because the model isn’t just fixing grammar; it’s weaving in context. A job-title scraped from LinkedIn, a conference talk you gave, a vendor your team just onboarded - those details make the email, text, or DM feel like it could only have been written for you. As one forecast on hyper-personalized social engineering puts it, attackers are now industrializing what used to be bespoke cons, letting them “scale trust abuse across thousands of inboxes at once” instead of targeting a handful of people manually.

“AI is fueling a golden age of scammers, giving threat actors near-limitless creative power to outthink traditional security measures.” - CISO quoted in AI-Generated Phishing: The Top Enterprise Threat, StrongestLayer

Why context, not cosmetics, has to be your new red flag

All of this is why staring at fonts and grammar isn’t enough anymore. The message will often look right. The sender name will often look right. The tone will often sound exactly like your boss or your bank. What still stands out is whether the scene makes sense: Is this the kind of request this person usually sends you? Is this the normal channel for this kind of approval or payment? Does the timing line up with your processes, or is it weirdly last-minute and secretive? Instead of asking “Does this look fake?”, you start asking “Is this the right request, through the right channel, following our usual process?” If any one of those is off, that’s your cue to pause, step out of the script, and verify before acting.

For beginners and career-switchers, this is good news disguised as bad. You don’t need to memorize every new AI trick; you need to get very comfortable with context and process. That’s a learnable skill. It means slowing the scene for a beat - checking URLs, calling back on known numbers, confirming changes in person or through official tools - especially any time money, access, or sensitive data is involved. Ethically and legally, your role is to use this understanding to defend: to spot, report, and help your organization adapt training and controls, not to experiment with these techniques in the wild. Once you shift from cosmetic checks to contextual ones, you’re no longer just hoping the old red flags will show up - you’re actively looking for the moment the story stops matching how your world really works.

Defense in depth: technical controls that break the script

Technical controls are the set designers and prop masters of your security story: you might not notice them when everything goes right, but they shape what attackers can realistically pull off. Defense in depth means layering these controls so even if one lock fails or one person follows the wrong script, the plot still stalls before the big loss. You’re not trying to build a perfect wall; you’re trying to make every step of the attacker’s plan harder, noisier, and more likely to be caught.

Stronger authentication: from easy-to-phish to phishing-resistant

Multi-factor authentication (MFA) is one of the most important brakes you can add, but not all MFA works the same way. Simple push notifications or SMS codes can still be abused through tactics like “MFA fatigue,” where attackers bombard a victim with prompts until they approve one by mistake; some industry reports tie this kind of prompt bombing to roughly 14% of social engineering incidents. Phishing-resistant options like FIDO2/WebAuthn security keys and platform authenticators bind the login to the real domain, so even if a user clicks a fake link, the key simply won’t complete the login on the attacker’s site. As you move into cybersecurity roles, understanding these differences is critical: you’re not just “turning on MFA,” you’re choosing which version of MFA to cast in the role of gatekeeper.

| MFA Method | Phishing Resistance | Typical Use | Common Risk |

|---|---|---|---|

| SMS codes | Low | Consumer accounts, legacy apps | SIM swapping, code relays via fake sites |

| Authenticator app codes | Medium | Cloud services, VPNs | Code theft on phishing pages |

| Push notifications | Medium | SSO portals, mobile apps | MFA fatigue (push bombing) |

| FIDO2/WebAuthn security keys | High | Admins, executives, high-risk users | Loss if not backed up or enrolled properly |

Email and domain protections: making spoofing harder

Because so many social engineering attacks still arrive by email, controls like SPF, DKIM, and DMARC are crucial for keeping obvious impersonations off the stage. These standards let receiving servers check whether a message claiming to be from yourcompany.com is actually authorized to use that domain. Summaries of recent Verizon Data Breach Investigations Reports note that misused or spoofed domains are a recurring theme in credential phishing and BEC, and highlight DMARC as one of the core defenses organizations should implement to cut down on direct domain spoofing, as seen in the Verizon DBIR SMB snapshot. Layered on top of that, modern secure email gateways increasingly use AI to spot suspicious language, unusual sending patterns, and embedded QR codes, catching more lures before they ever reach a person’s screen.

Out-of-band checks and payment guardrails

Some of the most effective “technical” defenses are actually process rules backed by systems. For high-value actions like changing vendor bank details, approving wires, or elevating user privileges, organizations are codifying requirements for dual approval, enforced waiting periods, and mandatory out-of-band verification. In practice, that can look like workflow tools that simply won’t let a single person both request and approve a transfer, or finance platforms that flag and hold first-time payments to new accounts until someone confirms them via a verified phone number. Reports on building cyber resilience, such as Security Boulevard’s discussion of how SOCs are evolving, stress that these kinds of guardrails are increasingly being automated so that “doing the safe thing” is the default path, not an extra step people have to remember under pressure.

Visibility and behavior analytics: catching odd moves mid-heist

Finally, tools like User and Entity Behavior Analytics (UEBA) and modern Security Operations Centers (SOCs) watch for scenes that don’t fit the usual pattern: logins from impossible locations, a salesperson suddenly downloading gigabytes of data, or a finance user initiating wires in the middle of the night. As data volumes grow, AI is taking on more of this first-line triage. Analysts quoted in industry pieces predict that AI-driven systems will autonomously resolve over 90% of routine alerts, leaving human defenders to focus on complex, ambiguous cases where judgment and context really matter.

“By automating the bulk of low-level alerts, AI will transform Tier 1 SOC analysts into supervisors and investigators, fundamentally redefining what a modern security operations center looks like.” - 5 Predictions That Will Redefine Your SOC, Security BoulevardFor you as a future cyber pro, that means learning to read not just individual alerts, but the story they tell together: where the attacker entered, which controls slowed them down, and where a better lock or a better habit could have broken the script entirely.

Human firewalls: training, culture, and everyday habits

All the tech in the world can’t save a company if the people in it play straight into the attacker’s script. That’s why professionals talk about “human firewalls”: not superhumans who never make mistakes, but regular employees who’ve been trained, supported, and equipped to spot the con early and hit pause. For beginners and career-switchers, this is good news - defending against social engineering is at least as much about habits and culture as it is about hardcore technical skills.

Modern awareness training that actually changes behavior

Real-world numbers show that training works when it reflects the attacks people actually face. Baseline phishing tests often find around 33.1% of employees will click or interact with a malicious message the first time they’re tested. With consistent, realistic security awareness training and simulations, that “phish-prone” rate can drop to roughly 4.1% within a year. Other studies of security awareness programs report about a 40% risk reduction after 90 days and up to an 86% reduction in successful phishing incidents after one year when training is ongoing and tailored. Newer tools even use generative AI to create adaptive simulations that mirror current attacker tactics; according to research discussed by Breacher’s work on AI-powered social engineering simulations, such platforms can push phishing detection rates to over 90% by repeatedly giving employees safe chances to spot and report increasingly sophisticated lures.

Building a no-stigma verification culture

Training alone isn’t enough if the culture tells people that slowing down is “being difficult.” The organizations that handle social engineering best treat verification as a professional reflex, not a lack of trust. Leaders openly say things like “If something feels off, call me back on the main line,” and they back that up by praising employees who question odd requests - even when those requests turn out to be legitimate. Analyses of resilient companies, such as those explored in Eye Security’s discussion of the evolving cyber threat landscape, consistently highlight this cultural piece: teams that normalize double-checking, documenting approvals, and escalating suspicious messages suffer far fewer successful social-engineering incidents than those that treat “just getting it done” as the highest virtue.

Everyday habits that make you harder to cast

At an individual level, a handful of boring-sounding habits make you a much tougher target to write into a script. Using a password manager so every site has a unique, strong password means one stolen credential doesn’t unlock ten other systems. Turning on MFA everywhere - preferably with app-based codes or hardware keys - forces attackers to clear an extra hurdle even if they guess or steal a password. Minimizing the personal and professional details you share publicly makes it harder for someone to tailor a pretext just for you. Keeping your phone, browser, and laptop updated closes off entire categories of quick drive-by exploits. None of these steps are glamorous, but together they turn you from an easy mark into a character who’s surprisingly difficult to manipulate.

The throughline is that none of this requires you to be a genius or memorize endless jargon. It means treating odd emails, texts, calls, or office encounters the way a seasoned moviegoer treats a suspicious side character: noting the inconsistency, asking what role you’re being pushed into, and taking a moment to verify before you act. As you build these habits - and help others around you build them too - you’re not just protecting yourself and your employer. You’re practicing the core of many security careers: understanding how people actually work, where they’re likely to be pressured, and how to redesign the scene so the heist never quite comes together.

Red flags checklist and verification playbooks

Checklists and playbooks are your pause button. When a sketchy email lands, a text pings, or someone rushes you at the door, you don’t have time to reinvent your response from scratch. You need a short list of red flags and a rehearsed set of moves you can run almost on autopilot. That’s what turns you from a first-time viewer caught off guard by the twist into someone who’s seen this scene before and knows exactly when to slow things down.

The stakes for getting these moments right are high. Industry analyses show that social engineering-related breaches can cost organizations an average of $4.77 million when you add together incident response, downtime, and long-tail damage to trust, as highlighted in broad cybersecurity statistics compilations like VikingCloud’s roundup of cyber risk data. Under pressure, people fall back on habits; checklists and simple playbooks give you better habits to fall back on. They don’t require deep technical knowledge, just the willingness to pause, follow a short script of your own, and verify before you act.

Universal red flags checklist

When any message or request comes in - email, SMS, DM, phone, or in person - treat it as suspicious if you notice two or more of these patterns:

- Unsolicited contact - You didn’t start the conversation, and the sender isn’t someone you usually deal with.

- Urgency - “Right now,” “within the next hour,” “before close of business” is baked into the ask.

- Secrecy or isolation - You’re told not to loop in your manager, finance, or IT “to avoid delays” or “keep this discreet.”

- Channel mismatch - Money, HR, or security requests show up via SMS, social DMs, or personal email instead of official systems.

- Process bypass - You’re pushed to skip normal approvals, ticketing, or contract steps “just this once.”

- New payment details - Bank accounts, payout methods, or billing info are being changed over email or text.

- Credentials or MFA codes requested - Anyone asking for passwords, one-time codes, or reset links is a giant warning sign.

- Unusual payment methods - Gift cards, crypto, or personal accounts for what should be routine business transactions.

- Inability to verify the URL - Hidden address bar, shortened link you can’t expand, or a domain that doesn’t quite match.

- Emotional manipulation - Threats of account closure or legal trouble, or, on the flip side, surprise prizes and refunds.

“Prepared users who know the warning signs and have a simple response plan can stop the majority of phishing and social engineering attacks before any technical control even comes into play.” - Cybersecurity Ventures, Cybersecurity Almanac

Email verification playbook

When an email feels off - or touches money, access, or sensitive data - run this short sequence:

- Pause. Don’t click links, open attachments, or reply yet.

- Inspect the sender. Check the full address, not just the display name; does the domain exactly match what you expect?

- Check links. Hover to preview URLs; look for subtle misspellings or extra words around the real brand name.

- Consider context. Ask yourself if this is the kind of request this person normally sends you, in this way, at this time.

- Verify out-of-band. Contact the supposed sender via a known phone number, chat, or portal - not by replying to the suspicious email.

- Report. Use your organization’s “Report Phishing” button or forward to the security/contact address once you’ve stepped away from the message.

Phone/video and in-person playbooks

For calls and meetings, your goal is to break the attacker’s control of the channel. If someone on the phone or in a video call asks you to move money, share codes, or ignore policy, do this:

- Refuse to act live. Say you must follow policy and will handle it after you verify details.

- End the call. Don’t stay in the pressured conversation.

- Call back on a trusted number. Use your corporate directory or official website, not the caller ID or a number they give you.

- Confirm the request. Make sure the person and the action both check out before you proceed.

In person, apply the same mindset: don’t let anyone tailgate through secure doors without a badge, walk unknown visitors to reception, and never plug in found USB drives or devices. Many broad security studies, including multi-industry surveys like Cybersecurity Ventures’ Cybersecurity Almanac, point out that human decisions are at the center of most incidents. Treating these playbooks as part of your normal routine - rather than something “extra” you do when you remember - turns that reality into an advantage. Used ethically and consistently, they let you rewrite the scene so the scam never gets past the first line.

Turning awareness into a cybersecurity career

At some point, “I think this email is weird” stops being just self-preservation and starts looking a lot like a marketable skill. Once you can see the beats of a social engineering attack - the recon, the turn, the ask - you’re already thinking the way many security teams need their analysts, trainers, and responders to think. For career-switchers, that’s the bridge: you’re not starting from zero, you’re taking instincts you already have and turning them into a job where reading the script under the surface is part of the role description.

From noticing red flags to professional skill

Security work is no longer just “hackers in hoodies” and deep command-line magic. A huge slice of day-to-day cybersecurity involves exactly what you’ve been practicing in this guide: spotting risky patterns in email, chat, calls, and workflows, then helping people and systems respond better next time. Global industry snapshots, like those compiled in Cybersecurity Ventures’ Cybersecurity Almanac, point to roughly 3.5 million unfilled cybersecurity jobs worldwide and emphasize that demand is especially strong for roles focused on protecting data, monitoring alerts, and training users. Those roles don’t require you to be a mathematical prodigy; they do require you to be curious about how attacks unfold and comfortable explaining risk in plain language.

“Every IT position is also a cybersecurity position now. Every employee needs to be aware of their role in protecting the organization.” - Steve Morgan, Founder, Cybersecurity Ventures

Where social engineering defense shows up in entry-level roles

If you zoom in on the entry points into the field, you’ll see social engineering defense everywhere. A Security Operations Center (SOC) analyst spends their day triaging alerts that often start with a phishing click or a suspicious login. A security awareness specialist designs the training and phishing simulations that help employees recognize those tells earlier. A junior penetration tester (ethical hacker) might run authorized phishing tests to help an organization measure and improve its resilience. Even risk and compliance analysts map out how money and data move through the business, then recommend controls to keep a single rushed approval from turning into a six-figure BEC loss. In other words, understanding the “human side” of attacks isn’t a side quest; it’s central to how these jobs deliver value.

| Role | Day-to-day Focus | Social Engineering Angle | Common Entry Path |

|---|---|---|---|

| SOC Analyst (Tier 1) | Monitor alerts, investigate suspicious logins and emails | Recognize patterns that start with phishing or account misuse | Bootcamps, Security+ prep, IT help desk experience |

| Security Awareness Specialist | Design training, phishing simulations, and policy rollouts | Turn real attacker scripts into safe teaching scenarios | Communication background plus cybersecurity fundamentals |

| Junior Ethical Hacker | Run authorized tests of systems and processes | Legally probe how people respond to simulated lures | Ethical hacking courses, lab work, CEH or similar prep |

| Risk / Compliance Analyst | Map processes, assess controls, support audits | Identify where human error or pretexting could bypass safeguards | Business/IT background plus security and governance training |

Using Nucamp as your training montage (and staying ethical)

Structured learning gives you a way to turn this awareness into a resume. A program like Nucamp’s Cybersecurity Fundamentals bootcamp is intentionally built around that transition: over 15 weeks, you move through three focused courses - Cybersecurity Foundations, Network Defense and Security, and Ethical Hacking - that together demand about 12 hours per week and stay friendly to people with jobs and families by being 100% online with weekly live workshops capped at 15 students. The tuition, around $2,124 when paid in full (plus a modest registration fee), is deliberately set well below the $10,000+ price tags of many other bootcamps, which matters if you’re changing careers. Along the way you can earn Nucamp’s CySecurity, CyDefSec, and CyHacker certificates and prepare for industry-recognized exams like CompTIA Security+, GIAC GSEC, or EC-Council CEH. More importantly, you get practice thinking like both attacker and defender in authorized labs, where every phishing simulation, scan, or exploit is tightly scoped and legal.

That last part is key. Everything you’ve learned about social engineering in this guide is meant for defense, training, and better design - not for trying stunts on real people or systems without permission. As you move toward a cybersecurity role, the ethical rules are as important as the technical ones: only test systems you own or are explicitly authorized to assess; never run your own “phishing experiments” on coworkers; treat sensitive information you see on the job with strict confidentiality. Within those boundaries, your ability to read the script, spot the beats, and help rewrite risky scenes is exactly what makes you valuable. You’re not just the character who survives the twist; you’re stepping into the crew that keeps the heist from working in the first place.

Director’s cut: changing how you see every message

By now, the lobby scene looks different. That bored guard, the janitor’s small talk, the quick brush of a keycard - they don’t feel like filler anymore. Your inbox, your texts, your chat notifications are the same way. Once you’ve seen how social engineering really works, it’s hard to go back to skimming messages like background noise. Every urgent favor, every “just confirming” link, every surprise invoice starts to feel like a shot you might want to pause and study before you let the story move on.

From reacting to reading the script

The shift isn’t about becoming paranoid; it’s about becoming deliberate. Instead of asking “Is this obviously fake?”, you’re asking “What role is this trying to cast me in, and does that make sense?” You’ve seen how attackers run recon, choose pretexts, and lean on authority and urgency. You’ve walked through email, SMS, QR codes, phone calls, video meetings, even tailgating at the office. You know the red flags and you have playbooks you can run without needing anyone’s permission. That’s what it means to move from first-time viewer (surprised by the twist) to someone who can feel the con building long before the vault door swings.

Keeping your own commentary track running

The threat landscape will keep evolving. Reports like Varonis’s 2026 cybercrime trends overview talk about attackers getting more creative with data theft, deepfakes, and hands-on-keyboard intrusions that look like normal user behavior. But the core of your job as a defender stays the same: slow down when money, access, or sensitive data are on the line; check context, not just cosmetics; verify through channels the attacker can’t see or control. Whether you’re an employee trying not to get burned or a new analyst in a SOC, that commentary track in your head - “What stage of the script is this? What’s the ask? How do I break the scene safely?” - is one of your best tools.

If you choose to turn this awareness into a cybersecurity career, you’re not signing up to become a Hollywood hacker. You’re signing up to be the person in the room who understands both the human story and the technical backdrop well enough to change the ending: to design better processes, tune better alerts, teach better habits, and insist on ethical, legal boundaries around every test you run. The scams won’t stop trying to write you in, but you don’t have to follow the script. You can pause, rewind, and decide, scene by scene, how the story plays out.

Frequently Asked Questions

How have social engineering scams changed in 2026, and what actually stops them?

Scams are far more personalized and AI-polished now - industry work shows over 80% of phishing campaigns use AI and AI-generated lures can drive click rates up to 54% versus ~12% for manual attempts. Effective defenses are simple: verify context (right request, right channel, usual process), add phishing-resistant MFA (FIDO2/WebAuthn), and use out-of-band checks and training that can cut phish-prone rates from ~33.1% to about 4.1% within a year.

What immediate steps can I take today to avoid getting scammed at work or on my phone?

Pause before acting, hover to inspect sender addresses and links, never update bank details from an email - always verify by calling a known number - and avoid tapping unknown short links or QR codes. Also enable strong MFA (prefer app-based or hardware keys) and use a password manager; Business Email Compromise incidents alone averaged roughly $150,000 per case in recent reports, so simple process checks matter.

How should I handle a phone or video call that feels urgent or like it could be a deepfake?

Never perform sensitive actions while still on the incoming call - politely end the call and call back on a corporate directory number or known contact; do not trust caller ID or on-call pressure alone. Vishing volumes have surged (some datasets report ~440% year-over-year increases) and high-profile deepfake incidents have led to multi-million dollar mistakes, so the ‘stop, verify, call back’ ritual is essential.

Aren’t email filters enough to stop modern, hyper-personalized phishing?

No - AI makes messages polymorphic and contextually accurate (reports cite ~76% polymorphic campaigns and >65% use of AI content), so filters alone won’t catch everything. Combine SPF/DKIM/DMARC and modern AI-driven gateways with human verification playbooks and reporting habits to close gaps that automated tools miss.

I want to move into cybersecurity - which entry-level roles focus on defending against social engineering?

Look at SOC analyst (triage phishing alerts), security awareness specialist (design simulations and training), junior ethical hacker (authorized phishing tests), and risk/compliance analyst (process controls); defending the human layer appears across many entry paths. Demand is high - there are roughly 3.5 million unfilled cybersecurity jobs worldwide - so short, practical training programs (e.g., 15-week bootcamps) can help you transition while emphasizing ethical, authorized practice.

To understand email-based schemes, review the best business email compromise (BEC) incidents and their missed red flags.

If you want the best cybersecurity interview questions for 2026, this guide ranks practical prompts and sample answers.

Try the step-by-step Wireshark guide that walks through HTTP, DNS, and port-scan exercises in a lab.

This step-by-step cybersecurity analyst tutorial walks through labs, SIEM projects, and certification timelines.

Defenders should read this introduction to Metasploit for blue teams to learn safe validation techniques.

Irene Holden

Operations Manager

Former Microsoft Education and Learning Futures Group team member, Irene now oversees instructors at Nucamp while writing about everything tech - from careers to coding bootcamps.