Building REST APIs and AI MCP Endpoints in 2026: The Complete Node.js Guide

By Irene Holden

Last Updated: January 18th 2026

Quick Summary

Yes - you can build both versioned REST APIs and AI-friendly MCP endpoints in Node.js by keeping one shared business-logic layer and exposing predictable versioned routes for human clients while offering a small set of task-oriented MCP tools for LLMs. Use Node.js 18+, TypeScript with @modelcontextprotocol/sdk, Zod for validation, JWTs for auth, and protect your REST surface with express-rate-limit (e.g., 100 requests per 15 minutes); exposing a single manage_tasks MCP tool (list/create/complete/delete) keeps agents robust as your backend evolves.

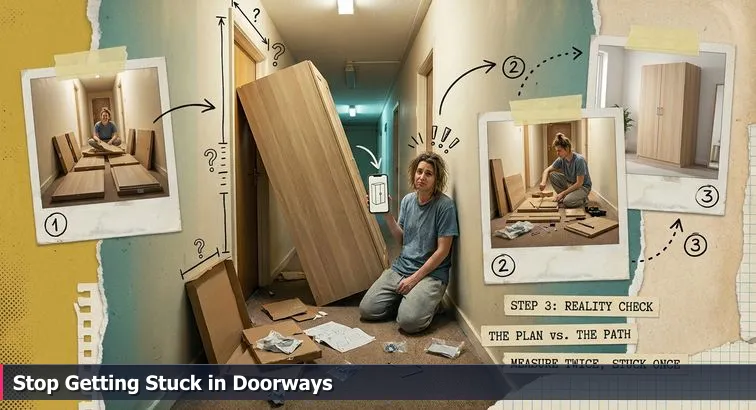

You’ve probably had that feeling where you’ve followed every step of a tutorial, wired up all the routes, hit “run”… and technically everything works. Then the first real mobile client or AI assistant tries to use your API, and suddenly you’re metaphorically wedging a finished wardrobe through a hallway that’s two inches too narrow. Nothing is wrong with the individual pieces - what’s off is the way you measured the space they have to move through.

In API terms, that hallway is the path between your backend and its callers: URLs, versions, auth headers, payload shapes, rate limits, and now, the way AI agents ask for work to be done. Traditional REST tutorials teach you how to assemble endpoints - the digital equivalent of screwing boards together. They rarely ask who will be carrying this thing: a React Native app that hates breaking URL changes, or an AI agent that doesn’t think in “GET /tasks” but in “plan my day and update my to-dos.” As more teams adopt the Model Context Protocol (MCP) - an open standard for connecting language models to tools and data via JSON-RPC, described in detail on the MCP overview on Wikipedia - that second doorway has become just as important as the first.

By now, it’s trivial to ask an AI assistant to “generate an Express API for tasks” and get something runnable. You can do the same for an MCP server, using the official TypeScript SDK, and end up with tools an LLM can technically call. But if you don’t design both doorways up front, you hit the classic stuck-in-the-doorway moment: mobile clients break when you rename routes, AI agents fail when a refactor changes response shapes, and different models struggle to pick the right one out of dozens of tiny, low-level tools. Engineers writing about this shift, like those in the MCP vs REST comparison from Nextaim, keep coming back to the same point: REST should expose predictable resources, while MCP should expose higher-level tasks that match how LLMs reason.

The good news is that you don’t need exotic new skills to avoid these traps. You need a measuring tape, not a fancier instruction manual: solid HTTP basics, comfort with Node.js and Express, and a bit of discipline around versioning, validation, and auth. Those are the humble Allen keys you still have to master, even if AI power tools can scaffold an entire project for you. When you use those fundamentals to design both a versioned REST API for human-centric clients and a focused MCP tool layer for AI agents, you end up with something very different from a copy-pasted tutorial: a wardrobe that slides cleanly through every doorway it has to pass, even as your backend evolves.

Steps Overview

- Measure the Hallway, Not Just the Wardrobe

- Prerequisites and Tools

- Design the Architecture: REST vs MCP

- Initialize Node.js + Express and Add Task Routes

- Harden the REST API (Validation, Auth, Rate Limits, Docs)

- Test the REST API Like a Client and an AI

- Understand MCP: The AI-Facing Doorway

- Build the MCP Server in Node/TypeScript

- Secure and Scale REST + MCP

- Verify Your REST and MCP

- Troubleshoot Common Issues

- Next Steps and Learning Path

- Common Questions

When you’re ready to ship, follow the deploying full stack apps with CI/CD and Docker section to anchor your projects in the cloud.

Prerequisites and Tools

Before you start cutting code, it helps to be very explicit about what you need both in your head and on your machine. In a world where an AI assistant can spin up an Express app or even an MCP server in seconds, your real advantage is knowing enough fundamentals to read that code, tweak it safely, and understand how it behaves when real clients and AI agents start calling it.

On the knowledge side, you’ll be in a good place if you’re comfortable with:

- Basic JavaScript: variables, functions, objects, arrays, and async/await.

- Node.js basics: running scripts with

node, usingnpm, and requiring/importing modules. - HTTP fundamentals: what a request/response is, status codes, headers, and JSON bodies. A quick pass through a hands-on guide like the Postman tutorial on building REST APIs with Node and Express is usually enough background for this project.

On the tooling side, make sure you have the basics installed and ready:

- Node.js 18+ (LTS) from the official downloads, so you get modern language features and stable async behavior.

- A REST client such as Postman, VS Code’s REST client, or even just

curlso you can hit your endpoints like a real consumer. - A code editor you’re comfortable in (VS Code is a solid default for JavaScript and TypeScript work).

For this specific REST + MCP stack, you’ll also install a small set of Node packages that cover the “plumbing” you’d normally have to hand-roll:

- express for the REST server and routing.

- cors to handle cross-origin requests safely.

- express-rate-limit for rate limiting and basic abuse protection.

- zod for runtime validation of incoming JSON payloads.

- jsonwebtoken for simple JWT-based auth.

- swagger-ui-express and swagger-jsdoc to generate minimal REST documentation.

- @modelcontextprotocol/sdk to build the MCP server that AI agents can talk to.

- dotenv to manage environment variables for things like secrets and ports.

It’s absolutely fine - and normal in this era - to lean on AI to generate boilerplate for these tools. The key is treating that AI like a power drill, not a magic wand. As one engineer put it in a Node.js best-practices writeup, “Node.js remains an excellent choice for APIs, but success comes less from novelty and more from discipline.” - wmdn9116, Software Engineer, DEV Community. The discipline here is learning enough JavaScript, HTTP, and Node to be the person holding the measuring tape, not just the one following whatever the autogenerated instructions say.

Design the Architecture: REST vs MCP

Before you touch a line of code, it’s worth stepping back and asking a deceptively simple question: who needs to get through this doorway? In practice, you’re designing two different “hallways” into the same Node.js backend. One is a REST API that web and mobile apps can call with predictable URLs and status codes. The other is an MCP server that AI agents use to perform tasks via JSON-RPC. If you treat both as an afterthought and just let an AI assistant scaffold whatever looks right, you end up with something that runs, but jams the moment a real client or agent tries to squeeze through.

REST: the resource-oriented doorway

For human-centric clients like React or React Native apps, REST is still the low-level assembly of parts: HTTP methods, paths, and JSON payloads. You’ll define a simple shared data model for tasks:

type Task = {

id: string;

title: string;

completed: boolean;

};- GET

/api/v1/tasks - POST

/api/v1/tasks - PUT

/api/v1/tasks/:id - DELETE

/api/v1/tasks/:id

That versioned prefix (/api/v1) is not decoration; guides on scalable Express APIs, like the best-practices writeup on building scalable APIs with Node.js and Express, treat explicit versioning as mandatory so you don’t break existing clients when the wardrobe inevitably gets redesigned.

MCP: the task-oriented doorway for AI agents

AI agents don’t think in “GET /tasks”; they think in “list my tasks” or “complete this task and summarize the result.” That’s where the Model Context Protocol (MCP) comes in: instead of dozens of tiny REST-style tools, you expose a smaller set of task-focused operations over JSON-RPC. For this guide, that’s a single manage_tasks tool with parameters like:

- action:

"list" | "create" | "complete" | "delete" - title?:

string(forcreate) - id?:

string(forcomplete/delete)

This follows what many practitioners call the Layered Tool Pattern: fewer, higher-level tools that map to complete tasks instead of mirroring every REST endpoint. As one analysis of AI integrations puts it, “MCP is designed for how AI models communicate, whereas traditional APIs are optimized for how people and applications communicate.” - Primacy, MCP vs API: How AI Models Communicate vs People.

| Aspect | REST Layer | MCP Layer |

|---|---|---|

| Primary client | Web/mobile apps, services | LLM-based AI agents |

| Design focus | Resources and HTTP verbs | Tasks and outcomes |

| Protocol | HTTP + JSON | JSON-RPC over stdio or HTTP/SSE |

| Change strategy | Versioned URLs (e.g. /api/v1) |

Stable tool names and schemas |

The architectural trick is to keep your core business logic separate from both doorways, then design REST routes for predictable resource access and MCP tools for higher-level tasks. Teams that blur this line - for example, by auto-generating one MCP tool per REST endpoint, as cautioned in comparisons like the one from ELEKS on MCP vs REST APIs - often discover later that their AI agents are just as brittle as their old clients. Taking time now to measure both hallways with solid Node.js and HTTP fundamentals gives you a stack that can evolve without getting stuck in the doorway every time something behind it changes.

Initialize Node.js + Express and Add Task Routes

To get from architecture sketches to something that actually runs, you need a clean starting point in Node.js and Express. Yes, an AI assistant can spit out a full CRUD API in one shot, but taking a few minutes to set up the project yourself makes the rest of this guide much easier to follow and debug. Think of this as cutting and labeling each board before you start assembling the wardrobe: a little care now prevents you from discovering, halfway down the hallway, that nothing lines up.

Step 4.1 - Create the project shell

Start by creating a fresh directory and initializing a Node.js project:

mkdir node-rest-mcp-demo

cd node-rest-mcp-demo

npm init -y

Then install the core dependencies you’ll use throughout the REST portion of the stack:

npm install express cors express-rate-limit zod jsonwebtoken dotenv swagger-ui-express swagger-jsdoc

npm install --save-dev nodemon

Update your package.json to add a dev script that restarts the server on changes:

"scripts": {

"dev": "nodemon server.js"

}

Step 4.2 - Spin up a minimal Express server

Next, create a basic Express server with a health check so you can verify that everything is wired correctly before you add any business logic. Create a file called server.js in the project root:

require('dotenv').config();

const express = require('express');

const cors = require('cors');

const app = express();

const PORT = process.env.PORT || 3000;

// Middlewares

app.use(cors());

app.use(express.json());

// Health check

app.get('/health', (req, res) => {

res.json({ status: 'ok' });

});

// Start server

app.listen(PORT, () => {

console.log(REST API listening on port ${PORT});

});

Run the server with:

npm run dev

Then visit http://localhost:3000/health in your browser or hit it with curl to confirm you get { "status": "ok" }. This kind of small, incremental verification is exactly what practical Node.js API guides, like the step-by-step REST tutorial from Appeak Technologies on building RESTful APIs with Node.js, recommend before layering on databases, auth, or AI integrations.

Step 4.3 - Add in-memory task routes under /api/v1

With the server running, you can add a simple in-memory data store and versioned CRUD routes. First, install uuid for generating task IDs:

npm install uuid

At the top of server.js, after the existing imports, add:

const { v4: uuidv4 } = require('uuid');

let tasks = [];

Now define your REST endpoints under a versioned prefix, /api/v1:

// GET /api/v1/tasks

app.get('/api/v1/tasks', (req, res) => {

res.json(tasks);

});

// POST /api/v1/tasks

app.post('/api/v1/tasks', (req, res) => {

const { title } = req.body;

if (!title || typeof title !== 'string') {

return res.status(400).json({ error: 'title is required' });

}

const task = { id: uuidv4(), title, completed: false };

tasks.push(task);

res.status(201).json(task);

});

// PUT /api/v1/tasks/:id

app.put('/api/v1/tasks/:id', (req, res) => {

const { id } = req.params;

const { title, completed } = req.body;

const task = tasks.find(t => t.id === id);

if (!task) return res.status(404).json({ error: 'Task not found' });

if (typeof title === 'string') task.title = title;

if (typeof completed === 'boolean') task.completed = completed;

res.json(task);

});

// DELETE /api/v1/tasks/:id

app.delete('/api/v1/tasks/:id', (req, res) => {

const { id } = req.params;

const index = tasks.findIndex(t => t.id === id);

if (index === -1) return res.status(404).json({ error: 'Task not found' });

const deleted = tasks.splice(index, 1)[0];

res.json(deleted);

});

Why this “boring” setup matters

This may look like yet another CRUD example, but you’ve quietly made a few decisions that will keep you from getting stuck in the doorway later: a clear project structure, a health check, JSON parsing, and a versioned path (/api/v1/tasks) that you can evolve without instantly breaking your clients. These same fundamentals show up in more advanced tutorials, like the full REST + MongoDB walkthrough from Djamware on building a RESTful API using Node.js, Express, and MongoDB, because they’re the foundations your AI tools and MCP layer will also rely on. Pro tip: resist the urge to let AI skip past this setup; understanding how this server boots and how each route behaves is the Allen key you’ll keep reaching for as your stack grows more sophisticated.

Harden the REST API (Validation, Auth, Rate Limits, Docs)

Once your basic routes are working, the real work begins: turning “it runs on my machine” into an API that won’t crumble the first time a buggy frontend, a noisy neighbor, or an AI agent pushes on it. This is where you harden the REST layer with validation, auth, rate limits, and documentation. Modern guides on secure Node backends - including those looking at how APIs feed AI tools, like Snyk’s discussion of safe Node.js servers for LLMs - all stress that input validation and tight contracts are mandatory to avoid everything from injection bugs to “bloated JSON” responses that leak too much context (Snyk’s article on building Node.js servers for LLMs is a good example of this mindset).

Step 5.1 - Add Zod validation and consistent errors

Create a validation.js file to centralize your schemas:

const { z } = require('zod');

const createTaskSchema = z.object({

title: z.string().min(1),

});

const updateTaskSchema = z.object({

title: z.string().min(1).optional(),

completed: z.boolean().optional(),

});

function validate(schema) {

return (req, res, next) => {

const result = schema.safeParse(req.body);

if (!result.success) {

return res.status(400).json({

error: 'Validation failed',

details: result.error.issues,

});

}

req.validatedBody = result.data;

next();

};

}

module.exports = { createTaskSchema, updateTaskSchema, validate };

Wire it into your routes in server.js so every create/update request is checked before it reaches your logic:

const { createTaskSchema, updateTaskSchema, validate } = require('./validation');

// POST /api/v1/tasks

app.post('/api/v1/tasks', validate(createTaskSchema), (req, res) => {

const { title } = req.validatedBody;

const task = { id: uuidv4(), title, completed: false };

tasks.push(task);

res.status(201).json(task);

});

// PUT /api/v1/tasks/:id

app.put('/api/v1/tasks/:id', validate(updateTaskSchema), (req, res) => {

const { id } = req.params;

const { title, completed } = req.validatedBody;

const task = tasks.find(t => t.id === id);

if (!task) return res.status(404).json({ error: 'Task not found' });

if (typeof title === 'string') task.title = title;

if (typeof completed === 'boolean') task.completed = completed;

res.json(task);

});

Pro tip: keep your error shape consistent (e.g., always { error, details } on failure). That makes life easier for frontend devs and AI agents that need to parse and react to errors programmatically.

Step 5.2 - Add rate limiting and JWT auth

To protect your API from abuse, add a rate limiter in server.js using express-rate-limit:

const rateLimit = require('express-rate-limit');

const apiLimiter = rateLimit({

windowMs: 15 * 60 * 1000, // 15 minutes

max: 100, // max 100 requests per window per IP

});

app.use('/api/', apiLimiter);

Then create auth.js for a simple JWT auth flow:

const jwt = require('jsonwebtoken');

const JWT_SECRET = process.env.JWT_SECRET || 'dev-secret'; // use env in real apps

function authMiddleware(req, res, next) {

const authHeader = req.headers['authorization'];

if (!authHeader?.startsWith('Bearer ')) {

return res.status(401).json({ error: 'Missing or invalid Authorization header' });

}

const token = authHeader.substring(7);

try {

const payload = jwt.verify(token, JWT_SECRET);

req.user = payload;

next();

} catch (err) {

return res.status(401).json({ error: 'Invalid token' });

}

}

function issueDevToken(userId = 'demo-user') {

return jwt.sign({ sub: userId }, JWT_SECRET, { expiresIn: '1h' });

}

module.exports = { authMiddleware, issueDevToken };

Expose a dev-only token route and protect your tasks in server.js:

const { authMiddleware, issueDevToken } = require('./auth');

// Dev-only route to get a token

app.get('/dev/token', (req, res) => {

const token = issueDevToken();

res.json({ token });

});

// Protect task routes

app.use('/api/v1/tasks', authMiddleware);

Warning: never ship JWT_SECRET hard-coded or leave /dev/token enabled in production. Move secrets into real environment variables and lock down any dev-only helpers before you let outside traffic anywhere near your API.

Step 5.3 - Wire up minimal Swagger docs

Finally, document your REST contract so both humans and tools can discover it. Create swagger.js:

const swaggerJsdoc = require('swagger-jsdoc');

const swaggerUi = require('swagger-ui-express');

const options = {

definition: {

openapi: '3.0.0',

info: {

title: 'Task API',

version: '1.0.0',

},

},

apis: ['./server.js'], // annotate routes here

};

const swaggerSpec = swaggerJsdoc(options);

function setupSwagger(app) {

app.use('/api-docs', swaggerUi.serve, swaggerUi.setup(swaggerSpec));

}

module.exports = { setupSwagger };

Then initialize it in server.js:

const { setupSwagger } = require('./swagger');

setupSwagger(app);

Annotate your routes with JSDoc-style comments and you’ll get interactive docs at /api-docs. That single page becomes the contract your React app, your mobile client, and eventually your MCP server can rely on. AI tools can help you generate the annotations and even the OpenAPI spec, but understanding why you validate inputs, limit requests to 100 per 15 minutes, require a Bearer token, and publish a stable spec is what keeps your API from behaving like a wardrobe that only fits through the hallway on a good day.

Test the REST API Like a Client and an AI

Now that your REST API is up and running, you need to stop thinking like the person who built the wardrobe and start thinking like the people trying to carry it through the hallway. That means hitting your endpoints the way a real mobile app, web frontend, or AI agent would, and checking not just that they respond, but that they respond predictably.

Step 6.1 - Manual tests with a REST client

Use Postman, VS Code’s REST client, or plain curl so you can see exactly what your API sends over the wire. Run through a basic flow:

- Get a development token:

Copy thecurl http://localhost:3000/dev/tokentokenfield from the JSON response. - Create a task:

curl -X POST http://localhost:3000/api/v1/tasks \ -H "Authorization: Bearer YOUR_TOKEN_HERE" \ -H "Content-Type: application/json" \ -d '{"title": "Learn MCP"}' - List tasks:

curl http://localhost:3000/api/v1/tasks \ -H "Authorization: Bearer YOUR_TOKEN_HERE" - Update and delete tasks with the

idfrom the create response.

While you do this, pay attention to three things: response bodies are valid JSON, the shape is consistent across calls, and you’re getting appropriate HTTP status codes like 201 for successful creates, 404 when a task doesn’t exist, and 400 when validation fails. Pro tip: save these requests as a Postman collection so you can quickly rerun them after any refactor.

Step 6.2 - Think like an AI consumer

Next, pretend you’re an AI agent that only sees JSON, not your code. From that perspective, your API contract needs to be boringly clear: stable URLs, straightforward field names, and error responses that are easy to parse. When a request fails, you should always get a machine-friendly structure (for example, { "error": "...", "details": [...] }) rather than a one-off message. This is the same principle highlighted in comparisons of AI and human APIs, where authors note that agents depend on structured, predictable responses to reason effectively over tools (Roo Code’s MCP vs API breakdown leans hard on this distinction).

Try a few “ugly” calls on purpose: send an empty body to your create route, omit the Authorization header, or use a random UUID for the task ID. Check that:

- Validation errors always use the same JSON layout.

- Auth failures are clearly distinguished from validation and not-found errors.

- No stack traces or internal details appear in the response.

"MCP is designed around the way models consume and act on structured context, which means the shape and stability of your responses matter just as much as the raw data itself." - tl;dv Team, The Comprehensive Guide to Model Context Protocol (tldv.io)

If your REST layer behaves well under these tests, you’ve effectively proven that your “hallway” is straight, well-lit, and clearly labeled. That’s what will let a future MCP server sit on top of it and let AI agents call into your system without getting jammed halfway through every time a detail of your backend changes.

Understand MCP: The AI-Facing Doorway

Up to this point, you’ve been focused on the “front door” your web and mobile clients use: HTTP, URLs, JSON. MCP is the side entrance built specifically for AI agents - a doorway where language models don’t have to guess which URL to call or what headers to send, they just invoke named tools with structured parameters and get back structured results. Instead of juggling dozens of endpoints, an agent sees a compact menu of capabilities it can reason about.

What MCP actually is

The Model Context Protocol is an open standard for connecting AI systems to external tools and data using JSON-RPC 2.0. Instead of inventing a different integration story for every model vendor, MCP defines a consistent way to describe what your backend can do and how to call it. According to an overview from Spacelift, MCP “provides a single, standardized way to plug tools and data sources into any compliant LLM runtime,” which replaces a lot of brittle, one-off glue code (Spacelift’s Model Context Protocol explainer dives into this in more detail).

"MCP acts as a standardized bridge between AI models, tools, and data sources, replacing brittle one-off integrations with a reusable contract."

- Spacelift Team, What Is MCP? Model Context Protocol Explained Simply

Tools and resources: MCP’s building blocks

In REST, your mental model is “resources + verbs” (tasks, users, projects, and GET/POST/PUT/DELETE). In MCP, the core pieces are tools and resources. A tool is a callable operation like manage_tasks with a defined input schema (for example, an action field and optional title or id). A resource is a named data source - like a log file or a task list - that an agent can browse or read. Both are described with JSON schemas so the AI runtime knows exactly what parameters are required and what shape the output will take, without scraping human-written docs.

| Concept | REST API | MCP Server |

|---|---|---|

| Primary client | Browsers, mobile apps, services | LLM-based agents and tools |

| Interface | URLs, HTTP methods, JSON bodies | Named tools & resources with schemas |

| Message format | Arbitrary JSON per endpoint | JSON-RPC 2.0 requests/responses |

| Discovery | OpenAPI / Swagger docs | Self-describing tool & resource manifests |

Transports: stdio vs HTTP + SSE

MCP doesn’t care whether your server lives locally or in the cloud; it defines behavior over a few simple transports. A local tool might expose MCP over stdio, which makes it easy to plug into editors or CLIs. A remote service might speak MCP over HTTP + Server-Sent Events (SSE), so an AI platform can connect to it much like any other web service. The underlying pattern is always the same: JSON-RPC messages flowing between a client (the AI runtime) and a server (your Node process), as described in Stytch’s introduction to using MCP with modern auth and identity systems (Stytch’s Model Context Protocol overview).

Why MCP design feels different from REST

The big mental shift is that MCP is task-oriented. Instead of one tool per endpoint, you design a small number of higher-level tools - like manage_tasks - that encapsulate whole flows (list, create, complete, delete) behind a single, well-typed interface. This “layered tool” approach gives AI agents a clean, well-sized doorway: fewer choices, clearer semantics, and less chance of getting stuck trying to guess which of 27 nearly identical operations to call. Your foundational skills in Node.js, HTTP, and JSON are what let you carve out that doorway intentionally, instead of letting auto-generated code dictate how AI has to squeeze into your system.

Build the MCP Server in Node/TypeScript

With your REST API solid, the next step is to give AI agents their own doorway into the same logic by building an MCP server in Node and TypeScript. Instead of reinventing your task features, you’ll wrap them in a single manage_tasks tool that LLMs can call via JSON-RPC. TypeScript helps here by catching schema mismatches at compile time, and it’s the same stack used in reference projects like Anthropic’s Node/TypeScript MCP server example in the official skills repository on GitHub.

"With just a few dozen lines of TypeScript, you can expose powerful capabilities to AI agents by defining clear MCP tools around your existing logic." - Christian Grech, Developer & Author, Level Up Coding

Step 8.1 - Add TypeScript and the MCP SDK

Start by installing TypeScript, the MCP SDK, and basic type definitions, then initializing a TypeScript config:

npm install --save-dev typescript ts-node @types/node

npm install @modelcontextprotocol/sdk zod

npx tsc --init

In tsconfig.json, ensure you’re targeting modern Node by setting options similar to:

{

"compilerOptions": {

"module": "commonjs",

"target": "ES2020",

"esModuleInterop": true,

"strict": true

}

}

Update package.json so you have a script to run the MCP server:

"scripts": {

"dev": "nodemon server.js",

"mcp": "ts-node mcp-server.ts"

}

Pro tip: keep the REST and MCP processes separate (different scripts) so you can iterate on one doorway without accidentally breaking the other while you’re still debugging.

Step 8.2 - Share task logic between REST and MCP

Next, extract your core task operations into a shared service that both the REST layer and the MCP server can use. Create taskService.js in the project root:

const { v4: uuidv4 } = require('uuid');

let tasks = [];

function listTasks() {

return tasks;

}

function createTask(title) {

const task = { id: uuidv4(), title, completed: false };

tasks.push(task);

return task;

}

function updateTask(id, updates) {

const task = tasks.find(t => t.id === id);

if (!task) return null;

if (typeof updates.title === 'string') task.title = updates.title;

if (typeof updates.completed === 'boolean') task.completed = updates.completed;

return task;

}

function deleteTask(id) {

const index = tasks.findIndex(t => t.id === id);

if (index === -1) return null;

const [deleted] = tasks.splice(index, 1);

return deleted;

}

module.exports = { listTasks, createTask, updateTask, deleteTask };

Refactor server.js to call this service instead of manipulating tasks directly:

const { listTasks, createTask, updateTask, deleteTask } = require('./taskService');

// GET /api/v1/tasks

app.get('/api/v1/tasks', (req, res) => {

res.json(listTasks());

});

// POST /api/v1/tasks

app.post('/api/v1/tasks', validate(createTaskSchema), (req, res) => {

const task = createTask(req.validatedBody.title);

res.status(201).json(task);

});

// PUT /api/v1/tasks/:id

app.put('/api/v1/tasks/:id', validate(updateTaskSchema), (req, res) => {

const updated = updateTask(req.params.id, req.validatedBody);

if (!updated) return res.status(404).json({ error: 'Task not found' });

res.json(updated);

});

// DELETE /api/v1/tasks/:id

app.delete('/api/v1/tasks/:id', (req, res) => {

const deleted = deleteTask(req.params.id);

if (!deleted) return res.status(404).json({ error: 'Task not found' });

res.json(deleted);

});

Step 8.3 - Implement the MCP server over stdio

Now you can build an MCP server that calls into the same taskService but exposes everything as a single manage_tasks tool. Create mcp-server.ts:

import { McpServer } from '@modelcontextprotocol/sdk/server';

import { Tool, z } from '@modelcontextprotocol/sdk/types';

import { StdioServerTransport } from '@modelcontextprotocol/sdk/server/stdio';

const taskService = require('./taskService');

// 1. Define the tool schema with Zod

const manageTasksParams = z.object({

action: z.enum(['list', 'create', 'complete', 'delete']),

title: z.string().min(1).optional(),

id: z.string().optional(),

});

// 2. Create MCP server instance

const server = new McpServer({

name: 'task-mcp-server',

version: '1.0.0',

});

// 3. Register the tool

server.tool('manage_tasks', {

description: 'List, create, complete, or delete tasks',

inputSchema: manageTasksParams,

async handler({ input }) {

const { action, title, id } = input;

switch (action) {

case 'list': {

const tasks = taskService.listTasks();

return { content: [{ type: 'text', text: JSON.stringify(tasks) }] };

}

case 'create': {

if (!title) {

throw new Error('title is required for create');

}

const task = taskService.createTask(title);

return { content: [{ type: 'text', text: JSON.stringify(task) }] };

}

case 'complete': {

if (!id) throw new Error('id is required for complete');

const updated = taskService.updateTask(id, { completed: true });

if (!updated) throw new Error('Task not found');

return { content: [{ type: 'text', text: JSON.stringify(updated) }] };

}

case 'delete': {

if (!id) throw new Error('id is required for delete');

const deleted = taskService.deleteTask(id);

if (!deleted) throw new Error('Task not found');

return { content: [{ type: 'text', text: JSON.stringify(deleted) }] };

}

default:

throw new Error('Unknown action');

}

},

} as Tool<typeof manageTasksParams>);

// 4. Start the MCP server over stdio

async function main() {

const transport = new StdioServerTransport();

await server.connect(transport);

}

main().catch(err => {

console.error('Failed to start MCP server', err);

process.exit(1);

});

Start the MCP server with:

npm run mcp

From there, any MCP-aware client or agent framework can connect over stdio, discover the manage_tasks tool, and call it with structured parameters. You’ve effectively added a second, AI-friendly doorway to the same Node.js logic without duplicating business rules - and because both REST and MCP sit on top of taskService, you can evolve the internals later without forcing every client or agent to squeeze through a different, awkwardly shaped opening each time you refactor.

Secure and Scale REST + MCP

Once both of your doorways are open - REST for apps, MCP for AI agents - the next challenge is making sure they don’t become easy entry points for abuse or fall over under load. This is where classic web API hygiene (auth, rate limits, TLS) meets newer AI-specific concerns like over-permissive tools and “context bloat,” where you send far more data to a model than it needs. The same Node.js fundamentals that made your first API work are what you’ll lean on here to keep both layers secure and scalable.

Secure the edges before anything else

At the REST layer, you already have a few important guardrails: JWT auth, rate limiting, and structured validation. In production, you’d put those behind HTTPS and often an API gateway that can enforce things like IP allowlists and per-client quotas. MCP adds another dimension: instead of exposing endpoints directly, you’re exposing tools over stdio or HTTP/SSE, and authentication is usually handled at the transport level (API keys, OAuth tokens, or environment-provided credentials). A practical approach is to terminate TLS and auth at an edge service or gateway, then only let authenticated, authorized connections reach your MCP server. As one identity provider notes in their MCP authorization design guide, “securing MCP is about treating tool access as an API in its own right, with the same rigor you would apply to any externally exposed service” - Curity Team, Design MCP Authorization to Securely Expose APIs (curity.io).

Control what AI is allowed to see and do

For MCP specifically, the principle of least privilege matters even more than in traditional REST. Each tool you expose is effectively a capability the AI can exercise, so you want to keep the surface area small and focused: don’t give an assistant that only needs to manage tasks access to billing or admin tools. Similarly, treat every response as potential “context” that might be carried forward into later prompts; strip out internal IDs, secrets, or verbose debug fields before returning data, and avoid streaming full database rows when a small summary will do. This helps with both security and cost: sending fewer, smaller payloads keeps you away from the “bloated JSON” anti-pattern and reduces how much token budget your agents burn processing unnecessary detail.

Scale Node.js for I/O and AI workloads

On the performance side, REST + MCP put you squarely in Node’s sweet spot - lots of concurrent, I/O-bound work shuttling JSON in and out - but you still need to avoid blocking the event loop with CPU-heavy tasks like complex report generation or large JSON transformations. Offload heavy computation to worker_threads or separate services, and scale horizontally with containers or the built-in cluster module. Observability (logs, metrics, traces) becomes critical for both layers, so you can see which tools, routes, or clients are causing hot spots. In a recent discussion of where Node fits into modern backends, members of the core team emphasized that it excels when you treat it as the orchestrator of I/O and push CPU-intensive work elsewhere (Software Engineering Daily’s Node.js in 2026 interview goes deep on this balance).

| Concern | REST Layer | MCP Layer |

|---|---|---|

| Authentication | JWT / OAuth on HTTP requests | API keys / OAuth on MCP transport |

| Authorization | Role-based checks per route | Per-tool access control, least privilege |

| Abuse protection | Rate limits, IP filters, WAF rules | Tool quotas, invocation logging, context limits |

| Scaling strategy | Horizontal scaling, caching, worker offload | Same Node scaling + careful payload sizing for LLMs |

Verify Your REST and MCP

By this stage, you’ve built two entrances into the same backend: a REST API for apps and an MCP server for AI agents. Verifying that everything really works is less about “does it respond?” and more about “does it behave consistently when stressed, refactored, and called by something that isn’t me?” This is where checklists help you prove that both doorways are wide enough and stable enough for real clients to walk through without getting wedged halfway.

REST verification checklist

First, exercise the REST API exactly like a front-end or mobile app would. Confirm that:

GET /healthreturns{ "status": "ok" }with a 200 status.GET /dev/tokenreturns a JWT, and using that token in anAuthorization: Bearer ...header lets you:- Create tasks via

POST /api/v1/tasks. - List tasks via

GET /api/v1/tasks. - Update and delete tasks via

PUT/DELETE /api/v1/tasks/:id.

- Create tasks via

/api-docsshows your routes in Swagger UI and matches the behavior you see withcurlor Postman.- Sending invalid bodies (like an empty

title) returns a 400 with a clearerroranddetailsarray from Zod, not a generic 500. - Repeatedly calling the API from one IP eventually hits your rate limit (e.g., more than 100 requests in 15 minutes under

/api/).

As a stretch test, change your path from /api/v1/tasks to /api/v2/tasks and leave /api/v1 as a thin compatibility layer that proxies to v2. If your existing clients keep working, you’ve proven that you can upgrade the wardrobe design without ripping out the hallway your users already depend on.

MCP verification checklist

With the MCP server running (npm run mcp), switch perspectives and test it like an AI runtime would. Use an MCP-compatible client or agent framework and:

- Connect to your

task-mcp-serverover stdio. - Ask the AI to “List my current tasks,” “Create a task called ‘Ship MCP demo’,” and “Mark that task as complete.”

- Watch your server logs to confirm that the

manage_taskstool is invoked withactionvalues like"list","create", and"complete", and that the JSON it returns lines up with the tasks you see from the REST API.

Then refactor something under the hood: change how tasks are stored or tweak the REST endpoints, but keep the MCP tool name and schema exactly the same. If your AI assistant continues to behave correctly, you’ve validated what MCP advocates call the “wrapper pattern” - using MCP as a stable layer that shields agents from backend churn. As one DZone article on upgrading Node backends for AI notes, “the simplest way to adopt MCP is to wrap existing RESTful services rather than rebuild them from scratch, preserving a stable contract for agents while the underlying APIs evolve” - DZone Editorial Team, Transform Your Node.js REST API into an AI-Ready MCP Server.

Career verification: skills that actually transfer

Finally, zoom out and verify your own skills. If you can confidently explain how your REST API works (routes, status codes, auth, validation), how your MCP server exposes tools (JSON-RPC, schemas, transports), and how both reuse the same business logic, you’re ahead of a lot of “it compiles” code that’s floating around. Analyses comparing MCP and REST, like the breakdown from Nextaim on MCP vs REST APIs, point out that teams who succeed combine solid HTTP and Node foundations with an understanding of task-oriented design for AI agents. That combination is what turns your project from a fragile, AI-generated demo into a wardrobe that slides smoothly through every doorway it needs to pass: browsers, mobile clients, and AI assistants alike.

Troubleshoot Common Issues

Even with a solid design, it’s normal for things to go sideways the first time a real client or AI agent hits your stack. Requests start coming back with mysterious 401s, Zod throws validation errors on bodies you thought were fine, the rate limiter suddenly answers everything with 429, or your MCP client insists it “can’t find any tools.” The goal here isn’t to memorize every possible failure, but to build a simple checklist you can run through whenever the wardrobe seems stuck in the doorway.

Debugging REST issues: auth, validation, and limits

Most REST problems come down to a handful of repeat offenders. When a request fails, check these in order:

- Authorization header: Confirm it’s present and correctly formatted. It must be exactly

Authorization: Bearer YOUR_JWT_HERE, with no extra spaces or missing “Bearer”. A missing or malformed header should trigger your401branch inauthMiddleware. - Token validity: If you see

Invalid token, regenerate one viaGET /dev/tokenand verify yourJWT_SECRETin.envmatches the one used to sign it. - Zod validation: A

400withValidation failedmeans the body doesn’t match your schema. Logresult.error.issuestemporarily to see which field is wrong and adjust either the client payload or the schema. - Rate limits: If responses suddenly switch to

429 Too Many Requests, you’ve hit the max 100 requests per 15 minutes threshold. For debugging, you can raisemaxor shortenwindowMs, but don’t forget to restore sane values.

Pro tip: log the method, path, status code, and a short error key (not the entire stack trace) for each failed request. That gives you enough signal to debug without dumping internal details into your logs or responses.

Tracking down MCP-specific problems

MCP adds its own class of “it runs, but the agent can’t do anything” bugs. When an AI client reports missing tools or fails to act on results, work through these checks:

- Transport and connection: Make sure the MCP server is actually running (

npm run mcp) and that your client is pointed at the right stdio/command. A misconfigured command path is a common cause of “no tools available.” - Tool registration: Confirm

server.tool('manage_tasks', ...)is executed and that there are no runtime errors during startup. Logging tool registration once at boot helps. - Schema mismatches: If an agent sends

{ action: "create" }withouttitle, the Zod schema inmanageTasksParamswill reject it. For debugging, loginputinside the handler to see what parameters the client actually sends. - Payload size and shape: Return only the fields the agent needs. Oversized responses increase token usage and can confuse planning logic; several MCP primers, including Airbyte’s overview of what Model Context Protocol is and how it works, warn about sending unnecessary detail into an LLM’s context window.

"Effective MCP debugging starts with the contract: make sure tool schemas and responses say exactly what the model can rely on, and nothing more." - Red Hat AI Engineering Team, Building Effective AI Agents with MCP, developers.redhat.com

Let AI help you debug - without giving up control

AI assistants can be genuinely useful when you’re stuck: paste in a failing request/response pair and ask for likely causes, or have an LLM generate curl commands that hit all your routes and tools. Just keep a tight grip on the measuring tape. Always cross-check suggestions against your actual schemas, auth rules, and logs, and never paste secrets like JWT_SECRET into public tools. As one guide comparing MCP to traditional APIs puts it, AI agents and humans rely on the same thing in the end: clear, stable contracts and predictable behavior (Tallyfy’s explainer on MCP, agents, and REST APIs walks through this parallel). When you treat AI as a power tool that helps you test and explore, not as an oracle that rewrites your stack, you’ll resolve issues faster without accidentally loosening the screws that keep your REST and MCP doorways secure.

Next Steps and Learning Path

Standing back from your finished REST + MCP stack, the most valuable next step isn’t adding a dozen more endpoints or tools - it’s deciding how you’ll keep sharpening the skills that made this possible in the first place. You’ve seen how far basic JavaScript, Node, HTTP, and a bit of architectural thinking can take you, especially when you use AI as a power tool instead of a crutch. The question now is how to turn this one project into a repeatable learning path that moves you toward real full stack roles, not just one-off demos.

Deepen your full stack fundamentals

If you’re early in your journey or switching careers, the best investment over the next few months is still a solid, end-to-end JavaScript foundation. That means getting more comfortable with React on the frontend, React Native for mobile, and Node + Express + MongoDB on the backend - the same stack you just used to build versioned REST routes and an MCP server. Nucamp’s Full Stack Web and Mobile Development bootcamp is built around exactly this path: a 22-week, 100% online program with weekly 4-hour live workshops (capped at 15 students) and a time commitment of about 10-20 hours per week. Early-bird tuition is $2,604, which is significantly lower than the $15,000+ price tags you see at many other bootcamps, and you get four dedicated weeks at the end to ship a full stack portfolio project that looks a lot like a more polished version of the API you just built.

Add AI and product thinking on top

Once you’re comfortable building and shipping full stack apps, the next lever is learning how to turn those skills into AI-powered products. Nucamp’s Solo AI Tech Entrepreneur bootcamp is a 25-week follow-on designed for exactly that: you come in with JavaScript and a modern framework under your belt, and you spend half a year learning prompt engineering, integrating LLMs like OpenAI and Claude, and wiring agents into real backends. Along the way you expand your toolkit with Svelte, Strapi, PostgreSQL, Docker, and GitHub Actions, and you graduate with a deployed SaaS product that includes authentication, payments through providers like Stripe or Lemon Squeezy, and global deployment. Tuition is $3,980 with early-bird options, and the format mirrors the full stack bootcamp: online, workshop-based, and capped class sizes so you can actually get questions answered.

Map out your next 12 months

To turn all of this into a concrete plan, think in stages rather than trying to learn everything at once. For many beginners, a 4-week Web Development Fundamentals course (around $458) is a gentle on-ramp, followed by the 22-week Full Stack Web and Mobile Development bootcamp, and then the 25-week Solo AI Tech Entrepreneur program - roughly an 11-month path that mirrors Nucamp’s Complete Software Engineering track in scope, but with a stronger focus on AI-era product skills. Along the way you can branch into focused options like Front End Web and Mobile Development (17 weeks), Back End, SQL and DevOps with Python (16 weeks), Cybersecurity Fundamentals (15 weeks), or AI Essentials for Work (15 weeks, $3,582) depending on where you want to specialize. Industry roadmaps, like a recent web development guide outlining skills and tools for modern full stack careers, keep underscoring the same message: developers who understand HTTP, JavaScript, and backend architecture - and know how to pair those with AI tools - are the ones who stay employable. If you keep using AI to accelerate your learning while you deliberately practice the “Allen key” fundamentals in structured programs like these, you’re building a career that can adapt no matter how much the tooling or buzzwords change.

Common Questions

Can I build both production-ready REST APIs and MCP endpoints with Node.js in 2026?

Yes - Node.js (18+) is well suited to both: use Express for a versioned REST layer and the @modelcontextprotocol/sdk for MCP tools, keeping shared business logic between them. In practice teams run REST and MCP as separate processes, protect REST with JWTs and rate limits (e.g., 100 requests per 15 minutes) and expose a small set of stable MCP tools so AI agents aren’t brittle.

Do I have to use TypeScript to build an MCP server, or is plain JavaScript acceptable?

Plain JavaScript will work, but TypeScript is recommended because it catches schema mismatches at compile time and makes MCP tool contracts safer. Many examples use TypeScript with Zod and the MCP SDK, but you can start in JS and migrate once your schemas stabilize.

How can I prevent AI agents from breaking when I refactor my REST routes or response shapes?

Keep a stable contract: version your REST URLs (e.g., /api/v1), keep MCP tool names and schemas unchanged, and share core business logic so changes are internal only. As a strategy, run a compatibility layer (leave /api/v1 in place while adding /api/v2) and return consistent machine-friendly errors like { error, details } so agents can parse failures reliably.

My MCP client reports 'no tools available' - what should I check first?

Verify the MCP server is running (npm run mcp), that your transport is correct (stdio or HTTP+SSE), and that server.tool('manage_tasks', ...) actually executed at startup; add a boot log for tool registration. Also inspect incoming inputs for schema mismatches (log the input inside the handler) and confirm the client invokes the right command path.

How is securing MCP different from securing a normal REST API?

Both need strong auth, rate limits, and validation, but MCP often handles authentication at the transport level (API keys or OAuth) and requires per-tool least-privilege access so agents can’t reach unrelated capabilities. Additionally, limit and sanitize MCP responses to avoid leaking secrets or sending oversized payloads (which also reduces token costs for LLMs).

Follow the hands-on walkthrough titled how to deploy a MERN app to Netlify, Railway, and MongoDB Atlas if you want a tested recipe.

Product teams compare the cross-platform framework comparison: Flutter and React Native to pick the right stack.

Use this comprehensive guide to top full stack interview topics as your pantry map before a live coding round.

Follow a focused step-by-step guide to adding LLM-powered summaries to your app that emphasizes safety and testing.

See the best frameworks for AI-ready full stack apps when you plan LLM integrations.

Irene Holden

Operations Manager

Former Microsoft Education and Learning Futures Group team member, Irene now oversees instructors at Nucamp while writing about everything tech - from careers to coding bootcamps.