Why AI Won't Replace Full Stack Developers in 2026 (But Will Change Your Job)

By Irene Holden

Last Updated: January 18th 2026

Key Takeaways

AI won’t replace full stack developers in 2026 because models accelerate scaffolding but can’t take on system architecture, product judgment, security, or incident ownership - the human skills that actually keep products running. Most developers already use AI daily (about 84%) yet trust in it is low (around 33%), and firms are compressing junior roles (entry-level hiring down roughly 25%), so your job will shift toward orchestrating AI, owning reliability, and making higher-order decisions.

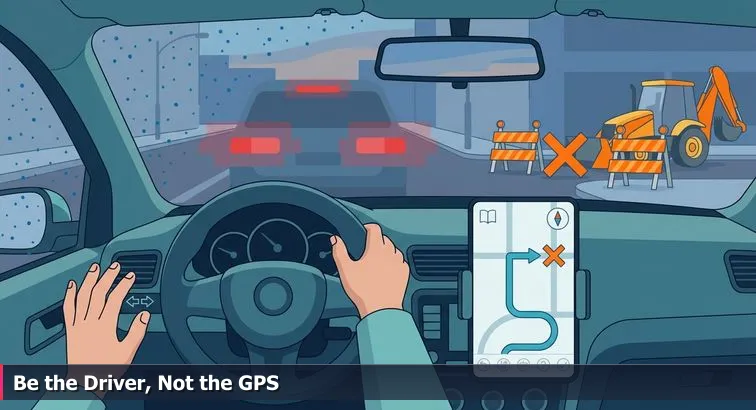

You’re sitting in your car, ten minutes from an interview that could change your career. The navigation calmly says, “Turn right,” but the street ahead is a mess of orange barricades and torn-up asphalt. Your turn signal ticks, wipers squeak, and the blue arrow insists on an impossible turn while traffic piles up behind you. That tiny spike of panic - that feeling of having instructions but no usable path - is exactly where a lot of aspiring and working developers find themselves with AI in 2026.

Almost everyone is now running some kind of AI coding assistant, but very few people fully trust it. The 2025 Stack Overflow Developer Survey reports that 84% of developers use AI tools daily, up from 76% the year before, yet trust in those tools dropped to 33% from 43%. The top frustration for 66% of respondents is AI code that’s “almost right but not quite,” because it creates extra debugging work instead of saving time. At the same time, around 69% of AI agent users say they do feel more productive overall, which matches what many devs describe: faster output, but more second-guessing and cleanup. Stack Overflow itself sums this up as developers being “willing but reluctant” in their expanded analysis of AI adoption and trust.

This is the AI trust gap: we’re all driving with the GPS on, but nobody’s sure it won’t send us into a blocked-off street.

- We use AI like a GPS we can’t turn off - every editor, every IDE, every tutorial seems to have it baked in.

- We don’t fully trust it, especially once the “road” (your codebase, your infrastructure, your product constraints) gets messy.

- The work quietly shifts from “type code” to “read the map, judge the route, spot bad turns, and improvise when there’s construction and no clear detour sign.”

That’s why the real career question right now isn’t “Will AI replace full stack developers?” so much as “Are you just following turn-by-turn directions, or do you actually understand the city?” In other words: when the AI says “turn right” and you see nothing but construction cones, can you zoom out, reason about the whole system, and still get your team where it needs to go?

This guide is about closing that gap. We’ll look at, in concrete terms:

- What AI is genuinely good at across the full stack - and where it falls apart on real projects.

- How teams are changing, including the uncomfortable compression of junior roles.

- Which skills make full stack developers hard to replace, even as AI writes more of the raw code.

- How to build a learning and career plan that treats AI as standard equipment on the dashboard, not magic or doom.

In This Guide

- Why the AI Panic Feels Like a Detour

- What AI Actually Does Today and Where It Fails

- Why AI Is Compressing Teams, Not Replacing Developers

- How AI Is Reshaping Entry-Level Jobs

- Core Skills AI Still Can’t Touch

- Task Map: What to Automate, What to Pair, What to Own

- The New Core Skill Stack for 2026 Full Stack Devs

- A Practical Day-to-Day Workflow for Working with AI

- Upskilling Path: From Beginner to AI-Ready Full Stack

- Level Up: Building AI-Powered Products and Agents

- A 12-Month Roadmap to Stay Employable in the AI Era

- Conclusion: Be the Driver, Not the GPS

- Frequently Asked Questions

When you’re ready to ship, follow the deploying full stack apps with CI/CD and Docker section to anchor your projects in the cloud.

What AI Actually Does Today and Where It Fails

Put simply, today’s AI coding tools are extremely good at patterns and extremely bad at reality. They’re like a GPS that can sketch beautiful routes on a clean, up-to-date map - but once you hit construction cones, weird back alleys, or a missing street sign, they start confidently sending you straight into a dead end. In code, that means AI excels at scaffolding components, wiring up CRUD endpoints, and churning out tests, but routinely falls apart on messy, interconnected logic and real production constraints.

Frontend: fast React scaffolding, clumsy real UX

- On the UI side, AI is strong at generating React components, Tailwind markup, and even turning design briefs into JSX. Frontend trend reports note that AI is already automating repetitive UI building and accessibility improvements like alt text and labels, especially in React-heavy stacks, as highlighted in The Software House’s overview of AI-driven frontend trends.

- However, it doesn’t understand why a layout should be that way, how to trade off performance on low-end Android devices, or how multi-step flows, offline states, and ugly edge cases actually play out. Developers using tools like Claude Code and Cursor frequently report that generated frontends “implode” when asked to refactor or maintain logic across more than about 30-50 lines in one go.

- In practice, AI can spit out a decent React signup form component, but it will not decide you need rate limiting, design password rules that balance UX and security, or handle a lost network connection in the middle of a request. Those are “read the road” decisions, not pattern-matching tasks.

Backend & APIs: boilerplate wizard, architecture amateur

On the server, AI shines at boilerplate: generating CRUD endpoints in Express or Django, mapping ORM models to tables, and creating basic validation and middleware. Guides on AI’s coding capabilities point out that pattern-based server code is exactly where large language models excel, because they can remix thousands of similar examples from open source and tutorials, as discussed in Graphite’s deep dive on what AI can and can’t code. Where it stumbles is exactly where real backends get interesting: deciding on microservices versus a monolith, designing multi-tenant data models, putting proper authorization in place, and keeping cloud bills under control. Research summarized by METR found that for complex tasks, experienced developers can actually be about 19% slower when leaning heavily on AI because verification, refactoring, and debugging overhead cancel out the raw speed of code generation.

“New technologies generally increase complexity rather than reducing it, so a significant portion of a software engineer’s job involves planning, coordinating, and system architecture.” - Jakob Nielsen, UX researcher, in a 2026 prediction on AI and software complexity

- AI will happily generate “discount = price * 0.9,” but it cannot mediate between finance, sales, and legal to decide how discounts must really work - or encode those rules safely across services.

- It can sketch a JWT auth middleware, but it won’t design a robust role-based access model, add audit logs, or think about data residency and compliance.

Testing & DevOps: high coverage, risky last mile

- On the testing side, AI is extremely good at brute-force coverage: some industry reports describe up to 85% higher test coverage and around 30% lower test-writing costs when teams aggressively use AI to generate unit tests. It can look at a function and spit out a dozen Jest cases in seconds.

- But AI doesn’t know which tests actually matter to your users or your business. Humans still have to decide which edge cases are high-risk, design realistic integration and end-to-end tests, and audit for security, privacy, and ethical concerns that a model cannot truly understand as real-world risk.

- For DevOps and deployment, most teams treat AI suggestions as “maybe useful, definitely untrusted.” AI can draft a Dockerfile or Terraform config, but it often misses subtle security settings, scaling behaviors, or cloud-provider quirks. Surveys cited by BetaNews show roughly 76% of developers do not plan to use AI for deployment or infrastructure anytime soon, citing security, reliability, and cost risks in their review of how AI is reshaping the SDLC pipeline (BetaNews’ look at AI in software development).

| Area | What AI is strong at | What humans must still own |

|---|---|---|

| Frontend | React component scaffolding, Tailwind markup, basic accessibility patterns | UX intent, performance under constraints, complex client-side flows |

| Backend & APIs | CRUD routes, ORM models, simple data transformations | System design, unique schemas, security and cost-aware architecture |

| Testing | Generating unit tests, boilerplate setups, regression test stubs | Choosing critical paths, realistic scenarios, security/privacy coverage |

| DevOps | Drafting Docker/Terraform templates, basic CI steps | Production deployments, rollbacks, incident response and SLOs |

The practical way to think about it is this: use AI for scaffolding, not for owning any part of your system. Let it write the first pass of components, routes, and tests, then review that output like you would a fast but naïve junior developer. You’re responsible for adding real error handling, logging, security checks, and the tests that truly matter. Treat AI as a very quick intern - useful, but never unsupervised - rather than an invisible senior engineer you hand the keys to.

Why AI Is Compressing Teams, Not Replacing Developers

Look at how teams actually feel on the ground: it’s not that all the “drivers” got fired, it’s that each driver suddenly has a far more powerful GPS, so companies try to cover the same (or bigger) city with fewer people. In practical terms, AI is compressing teams. You still need humans behind the wheel, but each one is expected to own more routes, more services, more tech surface area than before.

The compression effect: fewer seats, bigger surface area

Industry analyses describe a pattern where a company that used to hire 8-10 engineers for a product now tries to get similar output with maybe 4 highly skilled developers plus AI tools. That’s possible because some estimates suggest that nearly half of all code written in 2025 is AI-generated, and automation-related roles made up roughly 44% of all AI job postings in late 2025. The raw typing - the “turn left in 200 feet” part of the job - is no longer the bottleneck; oversight, integration, and long-term reliability are. A detailed breakdown of these automation trends from Netcorp notes that AI is taking over routine implementation work, but not the higher-order reasoning that keeps systems maintainable across years.

Speeding up code without eliminating engineers

Big tech isn’t using AI to get rid of engineers; it’s using AI to widen the road and cover more ground. At Google, roughly 25% of new code is now AI-assisted, and leadership estimates an overall engineering velocity boost of about 10%. Crucially, Google’s CEO Sundar Pichai has said they still “plan to hire more engineers” because the opportunity space has expanded with AI, not shrunk, a point highlighted in Baytech Consulting’s recap of AI-driven workforce changes. In other words, more can be built, faster - but only if there are enough people who understand how all the pieces fit together in production.

From coder to orchestrator: the new full stack seat

This is where the role of the full stack developer shifts. Instead of being valued for how many lines you can crank out per day, you’re judged on how well you can direct AI, enforce architecture boundaries, and keep the system healthy when it hits real-world detours. Builder.io captures this change bluntly: their analysis of the “AI software engineer” argues that orchestration is becoming the differentiator, not raw implementation speed.

“The most valuable developers won't be the fastest coders, but the best orchestrators - directing agents effectively while safeguarding system reliability.” - Builder.io, AI Software Engineer Report

Inside teams, AI is increasingly treated like a robotic intern: fast, tireless, sometimes brilliant, but never accountable. Someone still has to own the architecture diagrams, the on-call rotation, and the dash of logs and metrics when things go sideways. Compression means there are fewer of those seats, and the expectations sitting in each one are higher - but for developers who can think across the whole stack and manage AI instead of being managed by it, those seats are also more critical than ever.

| Phase | Team Shape | Main Human Value |

|---|---|---|

| Pre-AI-heavy | Larger teams, more narrow roles (frontend, backend, QA) | Implementation speed, framework expertise |

| AI-compressed | Smaller teams, full stack generalists with AI tools | System thinking, AI orchestration, reliability |

| AI-native | Engineers overseeing fleets of agents and services | Architecture, risk management, cross-functional judgment |

How AI Is Reshaping Entry-Level Jobs

If you’re early in your journey, the AI shift doesn’t feel abstract at all - it feels like you showed up for your first driving lesson and were told, “By the way, the car can mostly steer itself now, so we only hire people who can manage three cars at once.” The same tools that make seniors faster are quietly eating the exact “grunt work” that used to justify entry-level roles: wiring basic CRUD APIs, fixing small bugs, adding form validation, and writing boilerplate tests.

The junior pipeline is cracking

Across a lot of companies, the work that trained juniors is exactly what AI does well enough: simple endpoints, straightforward UI tweaks, and repetitive test cases. Hiring data reflects that shift. Analyses of 2025-2026 tech hiring show that entry-level tech roles dropped about 25% in many organizations, and at the very bottom levels (often labeled P1 and P2) some employers cut hiring by over 70%. A breakdown from ByteIota describes this as a move away from “broad generalists” and toward AI-fluent specialists who can deliver value immediately, rather than spending months learning on low-risk tasks that an AI can handle acceptably (ByteIota’s tech hiring 2026 report).

| Aspect | Pre-AI junior role | AI-era entry-level role |

|---|---|---|

| Core work | CRUD APIs, simple bug fixes, boilerplate tests | Reviewing AI output, gluing services together, handling edge cases |

| Expectation on day one | Learning stack and syntax slowly on real tickets | Productivity with AI tools plus solid fundamentals from the start |

| Primary value | Typing speed and willingness to take repetitive tasks | Judgment, product understanding, ability to own small features |

| Common title | “Junior Developer,” “Associate Engineer” | “Full Stack Engineer,” “AI Engineering Coordinator,” “Product Engineer I” |

New expectations for “entry level”

Because AI can write a lot of routine code, the bar for being considered “junior” has moved up. Many teams now expect new hires to be product-minded - to understand users and business goals, not just syntax - and to be productive with AI tools from day one. Some orgs are even renaming their first rung to things like “AI engineering coordinator,” where the job is to prompt tools, review suggestions, and connect pieces across the stack. Editorials on AI’s impact argue that this doesn’t eliminate developers; it raises the standard for which ones matter. As one DevOpsDigest piece on AI and developers puts it, the winners will be the people who become impossible to replace:

“AI won’t replace developers; it will make the best ones indispensable.” - DevOpsDigest editorial, AI and the Future of Developers

Why this doesn’t mean “give up”

Historically, every big abstraction jump - compilers, higher-level languages, cloud - has squeezed out some low-skill roles while creating demand for people who understand the system end to end. The same thing is happening now: the work that remains, even at entry level, looks more like understanding the whole map than memorizing turns. Analysts now estimate that around 80% of the engineering workforce will need to upskill by 2027 to work effectively with AI, which sounds scary until you realize it’s essentially an invitation to treat AI fluency as part of your core toolkit, not an optional add-on. Your move, as a beginner or career-switcher, is to plan for that reality:

- Learn solid HTML, CSS, and JavaScript, plus a modern framework like React and a backend like Node/Express - so you can understand what AI generates, not just copy it.

- Practice using AI tools early, but always rebuild key pieces by hand and refactor AI-written code so you can explain every line in a portfolio review.

- Aim for 2-3 portfolio projects that show end-to-end ownership: UX decisions, API design, basic security, and deployment.

- Focus your story around being the kind of entry-level dev who can supervise AI output, not the one who needs AI just to get anything working - because, as one DEV Community writer bluntly framed it, the real divide in 2026 is between AI users and everyone else (“AI Users vs The Unemployed” on DEV Community).

Core Skills AI Still Can’t Touch

AI can now follow directions frighteningly well, but it still can’t truly read the city. It can trace routes through your codebase, generate components and CRUD endpoints, even spit out tests, yet it doesn’t understand why your system is shaped the way it is, what your users are really trying to do, or what happens when everything hits a messy production detour. The skills that keep human full stack developers in the driver’s seat live above raw coding: system architecture, business logic, UX and brand, and security and ethics.

System architecture: drawing the map, not just following it

Real systems are more than files and functions; they’re moving traffic: requests flowing from browser to backend to database to third-party services, under latency, cost, and reliability constraints. New tools like AI agents and “vibe coding” pipelines are actually increasing complexity, not reducing it, because you’re now coordinating humans, services, and models all at once. Coverage in places like ITPro’s look at AI-native software delivery makes the same point: AI can help with local turns, but architects still have to design the whole route.

- Choosing between a monolith and microservices when traffic could spike overnight.

- Deciding where to cache, where to queue, and where to accept slower responses.

- Planning for failures: partial outages, degraded third-party APIs, data migrations gone wrong.

Business logic and product thinking

AI can easily implement “discount = price * 0.9”; it cannot negotiate the messy human context behind that rule. Full stack developers sit at the intersection of code and business reality: talking to stakeholders, translating fuzzy requirements into concrete data models, and explaining trade-offs in plain language. Analyses like the brutally honest forecast on JavaScript in Plain English’s 2026-2031 outlook stress that AI still can’t own architecture decisions, long-term trade-offs, or stakeholder conversations. That higher-level judgment - what to build, what to postpone, and what to never ship - is where human developers create most of their value.

- Reconciling conflicting asks from sales, support, and compliance into one cohesive feature.

- Designing data models that won’t trap the product when requirements inevitably change.

- Balancing “ship fast” pressure with the future technical debt you’re signing up for.

UX, brand, and the “special spark”

On paper, AI-driven design sounds like magic: generate layouts, components, and even color palettes from a prompt. In reality, teams quickly run into a ceiling. AI can produce “pretty” and “usable,” but not the kind of opinionated, brand-aligned experience that actually differentiates a product. A detailed analysis on Web Design Library’s review of AI web design puts it bluntly: current tools still lack the “special spark” that comes from human empathy, domain knowledge, and iterative testing.

- Understanding why your onboarding needs three steps instead of one if you want users to trust you with their data.

- Shaping copy, micro-interactions, and error states so the product feels like your brand, not a generic template.

- Designing for real devices, bandwidth, and assistive technologies your actual audience uses.

Security, ethics, and the risky last mile

No serious team hands the keys to security, privacy, or ethics over to an AI. Even when models help generate snippets or configs, human developers still own threat modeling, access control, data retention, and incident response. Universities and industry groups keep coming back to the same conclusion: AI tools are code amplifiers, not autonomous guardians. As the University of Louisiana’s School of Computing notes in its overview of AI and coding jobs, AI-driven software still ultimately depends on human engineers for safety and correctness.

“AI software is still written and maintained by human programmers… AI itself needs to be programmed and guided by human programmers to function.” - University of Louisiana at Lafayette, School of Computing & Informatics

- Designing and enforcing role-based access control, audit logs, and data minimization.

- Anticipating abuse cases - how features could be misused, not just used as intended.

- Making judgment calls when legal, ethical, and commercial incentives don’t perfectly align.

All of this lives above simple “knowing” what a function does. It’s about understanding how your whole “city” of services behaves when traffic patterns change, roads close, or someone ignores a stop sign. AI can help you move faster once you see the map; it can’t replace the part of your job where you decide what the map should even look like - or what happens when you’re forced to reroute in the middle of rush hour.

Task Map: What to Automate, What to Pair, What to Own

At this point, AI is like a navigation system built into every car: most developers are using it somewhere in their workflow, whether they like it or not. Surveys show around 82% of developers use AI coding tools at least weekly, and nearly 59% juggle three or more tools in parallel. That ubiquity makes one habit non-negotiable: for every task you touch, you have to decide deliberately whether to automate it with AI, pair with AI, or own it yourself. Without that kind of task-level map, it’s very easy to let AI “drive” right into a dead end and only notice when production alarms start flashing.

Frontend: copy-paste vs collaboration vs craftsmanship

On the frontend, a lot of work falls cleanly into those three buckets. AI is genuinely good at repeatable patterns, shaky on complex flows, and blind to the deeper UX and brand decisions that make an app feel “right.” A reality-check from Towards AI stresses that while models can generate impressive UI code, they still fail on context, nuance, and long-term maintainability, which is why developers who understand those higher layers remain essential (Towards AI’s data-backed look at AI and developer roles).

- Automate with AI: presentational React components from a clear Figma spec, Tailwind utility classes for a given layout, simple pure-function helpers, and unit tests for straightforward UI logic.

- Pair with AI: multi-step forms with conditional fields, client-side caching and optimistic updates, accessibility beyond basic ARIA (focus management, keyboard flows), and performance tuning for specific devices.

- Own yourself: information architecture across pages, onboarding and checkout flows, design decisions tied to your brand voice, and anything where a UX misstep directly hits activation, retention, or revenue.

Backend & APIs: boilerplate, glue code, and critical paths

On the backend, AI shines when the problem looks like the dozens of tutorials it’s trained on, and it falls apart when you introduce messy business rules, multi-tenant constraints, or strict security boundaries. That means you can safely offload a lot of the “turn left here” details, but you must keep tight human control over the map of services and data.

- Automate with AI: CRUD routes for well-understood resources, basic input validation schemas, simple data transformation helpers, and API documentation stubs.

- Pair with AI: payment and subscription flows, multi-tenant isolation (per-organization data), background jobs and schedulers, and rate limiting or quotas tied to business rules.

- Own yourself: service boundaries and module layout, data retention and migration strategies, authentication and authorization models, and anything that affects compliance, SLAs, or incident response.

Testing & QA: more coverage, same responsibility

Testing is where AI can feel like a superpower and a liability at the same time. It’s excellent at generating lots of tests quickly, but it has no intuition about what should actually break your build or block a release. Teams that thrive treat AI as a test generator, not a test strategist, a theme echoed in analyses of AI-augmented IT teams that warn against over-automating judgment-heavy work (Prosum’s look at AI-augmented developers).

- Automate with AI: unit tests for pure functions, snapshot tests for stable components, boilerplate Jest/Vitest/Cypress setup, and regression tests based directly on bug reports.

- Pair with AI: integration tests spanning multiple services, property-based tests for tricky algorithms, performance test scaffolding, and scenario outlines from user stories.

- Own yourself: deciding what’s “critical path,” picking realistic user journeys, setting coverage and reliability targets, and the final “ship/no-ship” call before a release.

| Layer | Automate with AI | Pair with AI | Human-owned |

|---|---|---|---|

| Frontend | Presentational components, Tailwind markup | Complex forms, client-side caching | UX flows, information architecture, brand feel |

| Backend | CRUD endpoints, basic validation | Payments, multi-tenant logic, rate limits | Architecture, auth models, data policies |

| Testing | Unit tests, snapshots, test boilerplate | Integration and performance tests | Test strategy, release criteria |

How to decide in practice

In day-to-day work, you can turn this into a simple habit. Before you start a task, ask: “What parts are boring and repeatable?” (good candidates to automate), “What parts touch money, security, or core UX?” (must be human-owned), and “Where could AI give me a fast draft that I’ll refine?” (pairing zone). For tasks in that middle category, write prompts like “Generate an Express router for this resource, but leave TODO comments where auth, rate limiting, or business rules should go” so you never forget which parts still need real thought.

Whatever you offload, finish with the same human checkpoint: review the diff like a code reviewer, scan for security, error handling, logging, and performance issues, and make sure tests cover the most painful edge cases you can imagine. AI can help you move faster along the road, but only if you stay the one reading the signs, watching the dashboard, and deciding when to hit the brakes.

The New Core Skill Stack for 2026 Full Stack Devs

The core stack for full stack devs has quietly changed. Knowing JavaScript, React, and Node is now the baseline; what separates “AI-dependent tourist” from “local who actually knows the city” is how well you can orchestrate AI, understand systems, and communicate product trade-offs. That means adding new layers to your skill set: context engineering, AI security, platform/DevOps literacy, and product thinking on top of your existing frontend and backend fundamentals.

Context engineering: feeding AI the right map

“Prompt engineering” is evolving into something more demanding: context engineering. It’s not just about clever prompts; it’s about deciding exactly what code, docs, and examples to show the model so it can work effectively without hallucinating. Developers who get the most from tools like Copilot, Cursor, and Claude are the ones who can curate a tight context window, structure their repos clearly, and constrain outputs to specific files and patterns. A widely shared LinkedIn piece on the 10 AI skills full-stack developers need in 2026 puts context engineering right alongside system design and debugging as a must-have, not a bonus skill.

AI security and safety: defending the system, not just the code

As soon as you let user data flow into prompts or let agents take actions (sending emails, calling APIs, modifying records), you own a new security surface. AI security means understanding prompt injection, data leakage through logs, over-permissive tools, and how to build guardrails around what your AI can and cannot do. That looks like validating and sanitizing prompt inputs, keeping secrets out of logs and training data, limiting the power of tools exposed to agents, and working with legal or compliance when you introduce models into regulated domains. These are judgment calls no model can make for you, and they’re fast becoming standard expectations for serious full stack roles.

Platform literacy and product communication

On top of that, you need enough DevOps literacy to understand how your app runs, scales, and fails - Docker images, CI pipelines, cloud services - and enough product thinking to explain trade-offs in plain language. Full stack devs are increasingly the glue between AI tools, infrastructure, and non-technical stakeholders: the person who can look at logs and metrics, then sit in a meeting and say, “If we let AI auto-generate this workflow, here’s what we gain, here’s what we risk, and here’s how we’ll monitor it.” As one AI skill guide for full stack engineers puts it:

“The next generation of full-stack developers will not be measured by how much code they write, but by how effectively they combine AI tools with deep system understanding and clear communication.” - Rahul Kumar Gaddam, AI Skills for Full-Stack Developers, LinkedIn

The 30% rule: where automation should stop

Teams that are getting real value from AI are quietly following a pattern sometimes called the 30% rule: automate roughly a third of the work - the boring, repeatable parts - while keeping humans firmly in charge of creativity, architecture, and final decisions. A practical guide on the 30% rule in AI points out that trying to automate everything usually backfires in complexity and bugs; targeted automation is where the ROI lives. For you as a developer, that means treating AI as muscle, not brain: let it draft code, tests, and docs, but reserve design, risk assessment, and communication for yourself.

| Skill area | What AI can help with (~30%) | Human edge | How to practice |

|---|---|---|---|

| Context engineering | Summarizing files, proposing refactors | Choosing relevant context, setting constraints | Give AI small, specific repo slices and refine prompts |

| AI security | Suggesting validation or auth snippets | Threat modeling, policy decisions | Review prompts and flows like an attacker would |

| Platform & DevOps | Drafting Dockerfiles and CI configs | Understanding runtime behavior and failure modes | Deploy small apps, read logs/metrics, debug failures |

| Product thinking | Generating user stories or copy variants | Prioritization, trade-offs, stakeholder alignment | Write short design docs and explain decisions to others |

A Practical Day-to-Day Workflow for Working with AI

Dropping an AI assistant into your editor without a workflow is like turning on navigation in a city you don’t know and blindly following every “turn right now” prompt. It feels fast at first, then you realize you’ve looped the same block three times and still haven’t parked. To actually get value, you need a repeatable way to use AI on code tasks: when to ask for help, how to constrain it, and how to review its suggestions so you don’t spend more time untangling messes than you saved.

Step 1: Start with a human spec, not a prompt

Before you even open an AI tab, write a short spec in your own words. For a feature or bugfix, capture the problem, inputs and outputs, constraints (performance, security, UX), and any known edge cases. This doesn’t have to be a formal document; a few bullet points in a Markdown file or ticket is enough. The point is to think like an engineer first, not a prompt typist. A clear spec makes your prompts sharper and your review work easier, because you already decided what “correct” should look like.

- Define the goal: what user problem are you solving, in one or two sentences.

- List inputs/outputs and any external systems involved (APIs, queues, databases).

- Note obvious risks: security, data loss, UX regressions, performance hotspots.

Step 2-3: Use AI for scaffolding, then review like a senior

Once you know what you’re building, ask AI for the boring parts: initial React components, Express routes, Prisma models, or test file stubs. Be explicit about boundaries (“only touch this file,” “don’t change existing exports”) and patterns (“use async/await,” “follow our existing error-handling middleware”). Then treat the output exactly like a junior teammate’s pull request: you are responsible for every line that lands. Engineers who skip this step often discover that their “productivity boost” is an illusion; as The Pragmatic Engineer’s deep dive on AI-written code shows, teams still spend most of their time on design, debugging, and integration even when AI writes nearly all of the first draft.

“The hard part of software engineering is not writing code, but figuring out what to write and how to verify it.” - Gergely Orosz, Founder, The Pragmatic Engineer

- Read the diff top to bottom; if you can’t explain a block, rewrite or delete it.

- Check for missing error handling, logging, and edge-case branches.

- Scan for security issues: input validation, auth checks, secrets handling.

- Make sure the style and architecture match the rest of the codebase.

Step 4-5: Let AI draft tests and logs, then observe in a real environment

Next, ask AI to generate unit tests, basic integration tests, and suggested logging or metrics. You still decide what’s on the critical path - auth flows, payments, data deletion - and which edge cases must be covered before you ship. Finally, run everything in a realistic environment (local containers, staging, feature branches), watch logs and metrics, and do targeted manual QA on the riskiest flows. Publications like DevOpsDigest’s analysis of AI-augmented teams emphasize this human-in-the-loop stage as non-negotiable: AI can suggest changes, but only you can judge reliability in the messy reality of production traffic and incidents.

- Write a human spec for the change.

- Use AI to scaffold code in small, well-defined chunks.

- Review and refactor AI output as if it came from a junior dev.

- Have AI draft tests and instrumentation; you choose what’s critical.

- Deploy to a safe environment, observe behavior, and iterate.

Over time, this workflow becomes muscle memory: you drive, AI helps with directions. Instead of bouncing between copy-pasted suggestions and random errors, you move through a predictable loop that keeps you in control of design, risk, and quality - exactly the parts of the job AI still can’t do for you.

Upskilling Path: From Beginner to AI-Ready Full Stack

Starting from scratch right now can feel like trying to learn to drive in the middle of a construction zone: every YouTube tutorial says “just learn React” while Twitter screams that AI will write all your code anyway. The path isn’t closed, but it is steeper. To get into the lane of AI-era full stack work, you need two intertwined stacks: solid, old-school web fundamentals so you actually understand the car and the city, and AI collaboration skills so you can use the new tools without letting them drive you into a ditch.

Lay down a classic full stack foundation

The first layer is still the “boring” stuff: HTML, CSS, and modern JavaScript, then a frontend framework like React, and a backend with Node.js, Express, and a database such as MongoDB. You also need Git, basic testing, and at least one way to deploy a real app. That’s not just bootcamp marketing; it matches what independent roadmaps recommend. A popular 2026 guide to becoming a full stack developer lays out almost exactly this progression - web basics, React, Node, a database, Git, and deployment - before you even worry about AI, because without that foundation you can’t reliably review or fix what AI generates in your codebase. You can see this stack laid out in a widely shared full stack developer roadmap that many juniors and hiring managers refer to.

Use structure to compress the learning curve

For a lot of beginners and career-switchers, trying to piece all of that together from random tutorials is where they stall out. A structured program gives you a route, deadlines, and feedback. Nucamp’s Full Stack Web and Mobile Development Bootcamp is a concrete example of how to package those fundamentals into something you can realistically finish while working or caring for a family: 22 weeks, about 10-20 hours per week, with weekly 4-hour live workshops capped at 15 students. Tuition comes in at around $2,604 with early-bird pricing - far below the $15,000+ many in-person bootcamps charge - which is a big deal if you’re self-funding. The curriculum walks you through web fundamentals, React, React Native for mobile, Node/Express, MongoDB, and a dedicated 4-week portfolio project where you ship a full stack app end to end. That’s the kind of project you can point to in interviews and, just as importantly, use as a sandbox for AI tools later.

Layer AI collaboration on top of your fundamentals

Once you’re comfortable building small apps without help, start deliberately weaving AI into your practice. Use it as a tutor to explain errors, as a scaffolding engine for boilerplate components and routes, and as a reviewer that suggests refactors and tests. The key is to keep yourself in the driver’s seat: for each feature, build at least part of it by hand, then ask AI to refactor or extend your code, and finally review what it did line by line until you can explain every change. This is also the right stage to learn “context engineering” - feeding AI the right slices of your codebase and constraints - so you’re preparing for real-world workflows instead of just copying answers.

Turn full stack skills into AI product skills

After you’ve got a couple of deployed full stack projects and you’re comfortable using AI as a day-to-day coding partner, you can choose to stay on the traditional engineer track or lean into AI products. Nucamp’s Solo AI Tech Entrepreneur Bootcamp is built as that second step: a 25-week program (about six months) that assumes you already know JavaScript and a modern framework, then teaches you to integrate LLMs, design prompts and agents, and ship a production SaaS with authentication, payments, and deployment. Tuition is around $3,980 with early-bird options, and the stack stretches you beyond JavaScript-only work into tools like Svelte, Strapi, PostgreSQL, Docker, and GitHub Actions. The idea is simple: first learn to be a solid full stack “local” who understands the city; then learn to build AI-powered services that run through that city on your terms.

If you’re earlier than that - still deciding if tech is for you - shorter on-ramps like Nucamp’s 4-week Web Development Fundamentals (around $458) or the 11-month Complete Software Engineering Path (about $5,644) can help you test the waters or commit to a longer journey. Whatever route you pick, the pattern is the same: foundations first, then full stack, then AI collaboration, and finally AI product skills. The road has more detours than it used to, but if you build your skills in that order, you’re not just following directions - you’re learning the map well enough to reroute your own career when traffic patterns change.

Level Up: Building AI-Powered Products and Agents

Once you can build a full stack app end to end, the real jump isn’t just “learn another framework” - it’s learning how to turn that app into an AI-powered product. That means going from “I use AI as a coding assistant” to “my product itself calls models, uses retrieval, and runs agents that actually do work for users.” Hiring data reflects this shift: AI specialist roles have grown by roughly 49% year over year while many generic IT roles are flat, and postings for forward-deployed engineers who customize AI solutions for clients have surged by around 800%. The work is moving toward people who can both ship software and embed AI into it.

From full stack features to AI-native experiences

AI-powered products aren’t just chatbots bolted onto an app; they’re systems where large language models, data stores, and traditional services work together. As a full stack dev, your advantage is that you already know how to wire frontends, backends, and databases - now you’re adding LLM APIs, vector search, and agents into that mix. Web dev trend reports call out AI-driven experiences - personalized interfaces, AI search, and intelligent assistants - as one of the defining forces in modern products, with pieces like LogRocket’s 2026 web development trends highlighting AI as a core pillar, not a side feature.

- Turn a React app into an AI support portal where a Node.js backend calls OpenAI or Claude, uses retrieval-augmented generation (RAG) against your own docs, and streams answers back to the UI.

- Add an AI “copilot” to a project management tool that reads tasks from PostgreSQL, suggests next steps, and opens tickets via an agent workflow.

- Build a SaaS that summarizes customer feedback from multiple channels, tagging and grouping issues for non-technical teams to act on.

Specialist roles and the edge of entrepreneurship

This is exactly where new roles are forming. Companies are hiring LLM developers, AI product engineers, and forward-deployed engineers who can sit with a customer, understand their workflows, and implement an AI-powered solution, not just a feature. At the same time, the barrier to launching your own AI SaaS has dropped dramatically: with a solid full stack base, one person can now prototype, integrate LLMs, and ship a paid product. Legal and industry commentary describes the current moment as “a breakthrough AI year, and one of reckoning” for vendors and teams alike - a phase where those who adapt their skills to AI-native products accelerate, while others get squeezed.

“2026 is a breakthrough AI year, and one of reckoning.” - Editorial analysis, Legal IT Insider

A structured path: from full stack to AI tech entrepreneur

If you want a guided route into this world, Nucamp’s stack of programs is intentionally sequenced. The Full Stack Web and Mobile Development Bootcamp gives you a JavaScript-first foundation over 22 weeks (React, React Native, Node.js, MongoDB, plus a 4-week portfolio project) for about $2,604 with early-bird pricing. From there, the Solo AI Tech Entrepreneur Bootcamp is a 25-week follow-on that assumes you already know modern web development and focuses on turning you into an AI product builder: prompt engineering, integrating LLMs like OpenAI and Claude, building agents, and shipping a real SaaS with authentication, payments (Stripe or Lemon Squeezy), and global deployment. Tuition runs around $3,980 with early-bird options, and workshops stay capped at 15 students so you get feedback while you design, build, and launch.

| Stage | Primary focus | Key stack | Outcome |

|---|---|---|---|

| Full stack developer | Web & mobile fundamentals | HTML, CSS, JS, React, React Native, Node, MongoDB | Deployed full stack portfolio projects |

| AI-integrated engineer | LLM & API integration | Existing stack + LLM APIs, vector search, basic agents | Apps with AI-powered features (chat, search, summarization) |

| Solo AI tech entrepreneur | Product + business ownership | Svelte, Strapi, PostgreSQL, Docker, GitHub Actions + LLMs | Production AI SaaS with auth, payments, and real users |

Seen this way, “leveling up” isn’t a mysterious leap - it’s a sequence. First, become the kind of full stack dev who can ship features without AI. Next, learn to embed models and agents into those features in safe, reliable ways. Finally, if entrepreneurship appeals to you, use programs and projects that push you to own the whole loop: problem, product, AI system, and business. That’s where AI stops being something that might replace you and starts being the engine under products you control.

A 12-Month Roadmap to Stay Employable in the AI Era

Trying to stay employable in this AI moment without a plan is like driving through a city you don’t know, at night, with a glitchy GPS. You might get there eventually, but you’ll burn a lot of time and stress on wrong turns. A 12-month roadmap gives you something better than vibes: a sequence of skills and projects that move you from “I kind of know JavaScript” to “I can ship full stack apps and use AI responsibly to move faster.”

Months 1-3: Web foundations you can trust without AI

The first three months are about learning to drive without autopilot. That means getting comfortable with HTML, CSS, and modern JavaScript, plus basic Git and the browser dev tools. Focus on building small, boring things by hand: static pages, simple forms, basic DOM interactions. During this phase, you can use AI like a tutor (to explain an error message or a concept), but avoid letting it write entire files. You want manual reps so that, later, you can actually review and fix AI-generated code instead of just hoping it works. Short, focused programs like a 4-week web fundamentals course (Nucamp’s is around $458) can fit cleanly into this window and give you structure without locking you in long term.

- Build 2-3 tiny projects (landing page, todo list, responsive layout).

- Practice Git every day: init, commit, push, pull requests on your own repos.

- Use AI only to clarify, not to deliver full solutions.

Months 4-6: Full stack basics and your first real app

Next, you add the engine and the other half of the city: a frontend framework and a backend. This is where you learn React for the client, plus Node.js, Express, and a database like MongoDB for the server. Your goal by the end of month six is to have at least one deployed full stack app you can show to another human. A structured path like Nucamp’s 22-week Full Stack Web and Mobile Development Bootcamp (about $2,604 with early-bird pricing, 10-20 hours a week, small live workshops) effectively compresses this phase: it covers web basics, React, React Native, Node/Express, MongoDB, and gives you a dedicated 4-week portfolio project to glue it all together. At this point, start letting AI scaffold boring pieces - CRUD routes, form components, basic tests - while you stay fully responsible for wiring, logic, and debugging.

- Deploy 1-2 full stack apps (auth, CRUD, simple dashboards or feeds).

- Use AI to generate boilerplate, but always refactor and review it.

- Write a short README for each project explaining what you built and why.

Months 7-12: Systems, AI collaboration, and AI-powered features

The back half of the year is about moving from “I can follow tutorials” to “I understand how the system behaves” and “I know how to plug AI into it safely.” Months 7-9: deepen your understanding of system design, testing, and observability. Add better logging, metrics, and tests to your existing projects; practice using AI daily to suggest refactors, tests, and docs, but keep design and review in your hands. Months 10-12: start building AI-augmented features. Call an LLM API from your Node backend, add an AI-powered search or summarization feature, or build a simple in-app assistant that knows about your app’s data. Web trends reports, like Testmu’s analysis of web development trends and automation, keep hammering the same point: the people who thrive are those who can combine solid engineering with AI integration, not those who only know prompts.

- Refactor one existing project with better structure, tests, and logging.

- Build at least one feature that uses an LLM API with real app data.

- Write a 1-2 page “architecture + AI” doc for your best project: what services exist, how data flows, where AI is used, and how you handle errors and abuse cases.

| Months | Main focus | Concrete output | Optional support |

|---|---|---|---|

| 1-3 | HTML, CSS, JS, Git basics | 2-3 small static/interactive sites | Short web fundamentals course (~4 weeks) |

| 4-6 | React + Node/Express + MongoDB | 1-2 deployed full stack apps | 22-week full stack bootcamp with a 4-week capstone |

| 7-9 | System design, testing, observability | Refactored project with tests and logs | Intermediate resources on system design and DevOps |

| 10-12 | LLM integration and AI features | AI-augmented app in your portfolio | Advanced track like a 25-week AI product/entrepreneur bootcamp |

By the end of this year, you’re not just someone who can ask AI for snippets; you’re someone who can build and understand an entire app, then decide where AI belongs in it and how to keep it safe. That’s what “staying employable” really looks like in the AI era: not outrunning the tools, but learning the map well enough that, when the road ahead closes, you know exactly how to reroute.

Conclusion: Be the Driver, Not the GPS

By now, the pattern should feel clear: AI is everywhere in the car. It’s in your editor, in your docs search, in your design tools. It will happily suggest turns all day long. But when a production bug hits, an integration fails, or the business suddenly changes direction, everyone still turns to a human and asks, “So… what do we do?” Being employable in this era isn’t about out-coding the models; it’s about being the person who can answer that question without panicking.

Industry reports from places like the World Economic Forum point out that a huge share of job skills - on the order of a third or more - are shifting this decade as AI becomes standard infrastructure. At the same time, analyses compiling dozens of AI growth and adoption statistics show usage curves that are still rocketing up, not flattening out. Put those together and the message is blunt: AI isn’t a temporary fad you can wait out. It’s part of the road now. Your safety margin comes from understanding how the whole system works - from frontend to backend to deployment to AI integration - so you can adapt as tools and frameworks change.

That’s why this guide has hammered on foundations and judgment rather than secret hacks. If you learn the web basics, build real full stack projects, practice a disciplined AI workflow, and then start embedding AI features into your own apps, you’re not just “keeping up with the latest thing.” You’re training yourself to read maps, watch the dash, and reroute under pressure. That’s what hiring managers, founders, and clients are betting on when they choose people in a compressed, AI-heavy job market.

The road ahead absolutely has more construction cones than it did a few years ago. Junior titles are thinner on the ground. Expectations are higher. But there is still plenty of work for people who can ship, think, and learn. If you commit to the path - one year of focused effort on full stack fundamentals, AI collaboration, and at least one AI-augmented product in your portfolio - you’re not asking the GPS to save you. You’re using it as one more tool while you drive, fully awake, fully responsible, and capable of getting where you and your team need to go, even when the route keeps changing.

Frequently Asked Questions

Will AI actually replace full stack developers in 2026?

No - AI is changing how developers work but not replacing them wholesale; most teams still need human judgment for architecture, security, and messy edge cases. For example, while 84% of developers reported using AI daily in 2025, trust in those tools fell to about 33%, so humans remain essential for verification and system-level decisions.

How will AI change a full stack developer’s day-to-day work?

Expect to spend more time orchestrating AI, reviewing generated code, and owning reliability rather than just typing boilerplate - AI handles scaffolding but not system design. The result is team compression: companies often try to cover more surface area with fewer senior engineers as roughly half of new code in 2025 was AI-generated.

What skills should I prioritize to stay employable in the AI era?

Keep strong web fundamentals (HTML, CSS, JS, React, Node) and add system architecture, context engineering (prompting with curated repo context), AI security, platform/DevOps literacy, and product thinking. Analysts estimate about 80% of engineers will need to upskill by 2027 to work effectively with AI, so combine coding chops with judgment and communication.

Are entry-level developer jobs gone, and how can a beginner break in?

Entry-level hiring has tightened - some orgs cut junior roles by over 70% and entry-level openings dropped ~25% in places - but beginners can still break in by showing end-to-end ownership. Build 2-3 deployed portfolio projects that demonstrate UX decisions, API design, basic security, and the ability to review AI-generated code; being AI-fluent from day one is increasingly expected.

How should I use AI when coding so it helps rather than creates more work?

Use a human-written spec first, ask AI to scaffold small, well-scoped pieces, then review and refactor the output like a senior engineer before deploying to staging. This human-in-the-loop workflow matters because roughly 82% of developers use AI weekly and treating AI as a fast intern (not an owner) prevents the “almost right but not quite” debugging overhead many report.

Here’s a long-form learn to integrate Jest and Playwright into CI/CD pipelines walkthrough for real projects.

Use this practical tutorial for building a React + Node + PostgreSQL app that covers deployment and observability.

If you need an actionable roadmap, consult this complete guide to getting your first full stack job.

Want a low-cost alternative to a four-year program? Explore the CS degree alternative to bootcamp section.

The complete blueprint for full-stack Next.js apps shows folder structure, auth, and deployment choices you can use in a portfolio project.

Irene Holden

Operations Manager

Former Microsoft Education and Learning Futures Group team member, Irene now oversees instructors at Nucamp while writing about everything tech - from careers to coding bootcamps.